Office Hours And Recent Results

In this project we’re working together to develop a more fundamental understanding of how deidentification algorithms behave on diverse human data. If you want to see how that’s going as the project progresses, here’s where to look. Below you’ll find our own research talks, presentations from invited subject matter experts, and summaries of what we’ve learned from all of our participants during office hours.

Contents:

Leaderboards

Research Talks and Recent Evaluation Results

- A sampling from the Data and Metrics Archive

- CRC Kick-off talk at Privacy-Preserving Artificial Intelligence workshop (PPAI-23)

Tutorials

Office Hours Discussion Summaries

Invited Subject Matter Expert Seminars

- TBA

Leaderboards

Research Talks and Recent Evaluation Results

A sampling from the Data and Metrics Archive

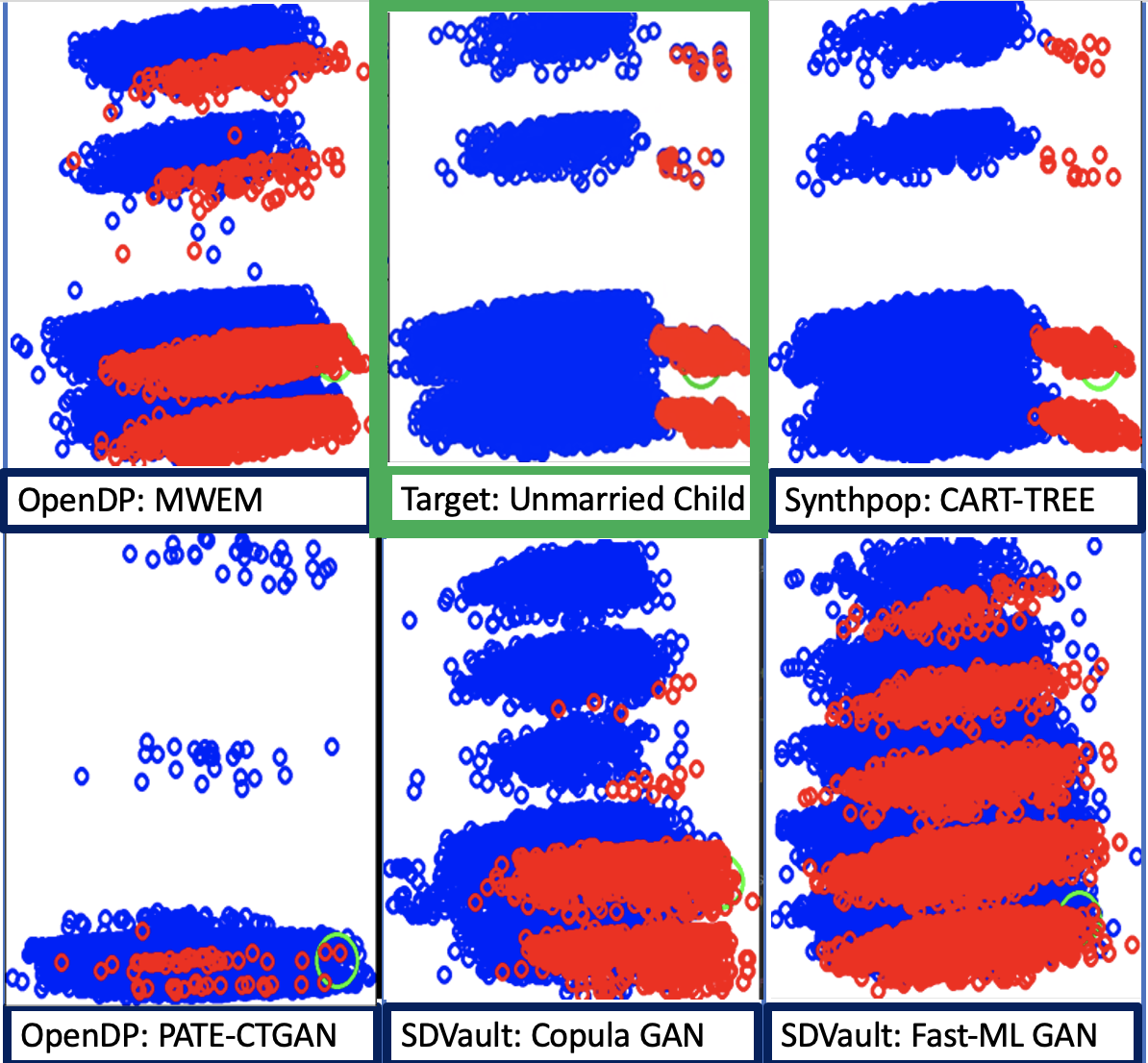

Below we provide a selection of deidentified data reports from version 1 of the Data and Metrics Bundle (which can be downloaded in its entirety here). The full archive contains 300 deidentified data samples from a variety of libraries, algorithms, parameter settings and feature sets.

Reports:

- DP Histogram (epsilon 10)

- SmartNoise PACSynth (epsilon 10)

- SmartNoise MST (epsilon 10)

- R Synthpop CART

- MostlyAI Synthetic Data Platform

- Synthetic Data Vault CTGAN

- SynthCity ADSGAN

Posted on: June 19, 2023

CRC Kick-off talk at Privacy-Preserving Artificial Intelligence workshop (PPAI-23)

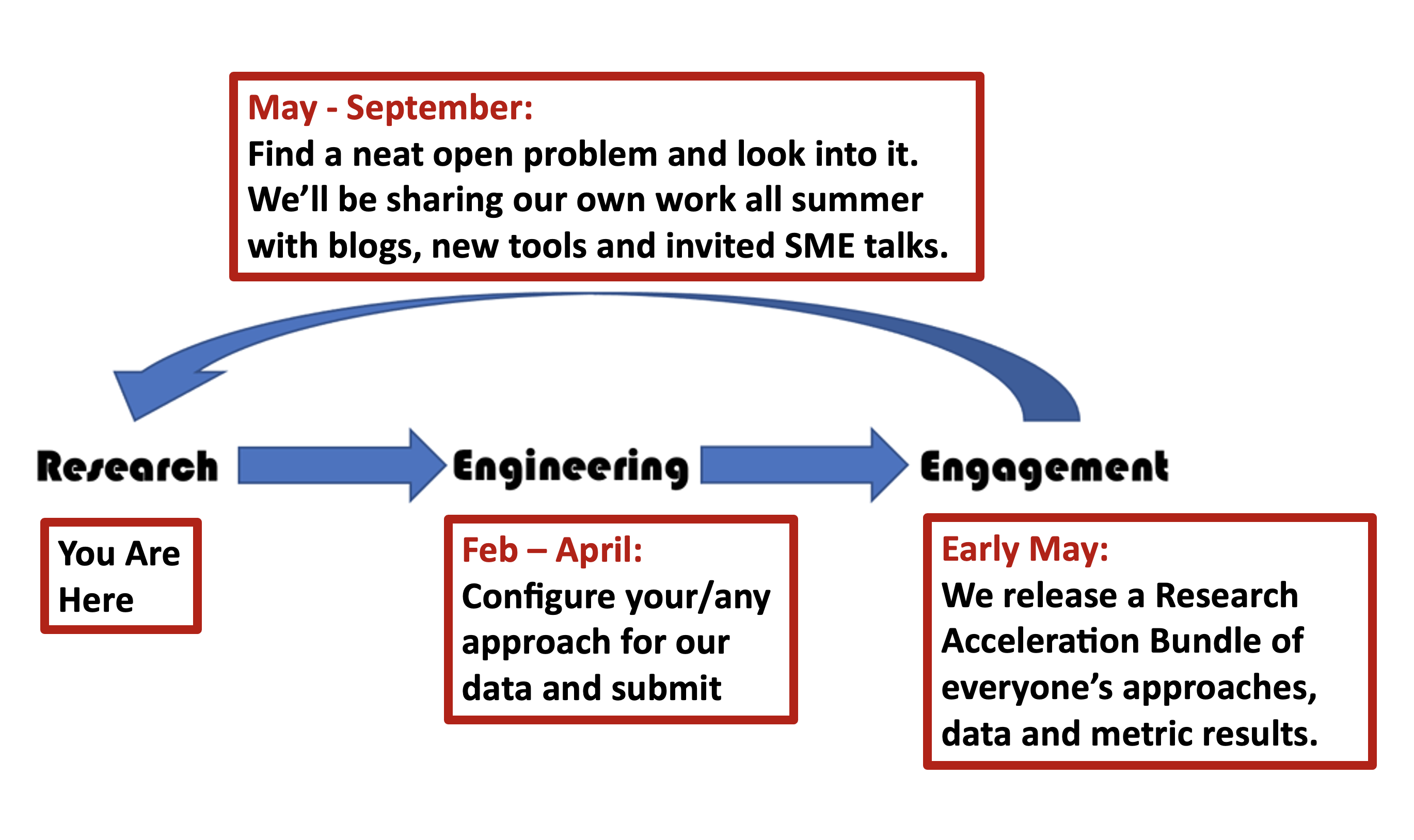

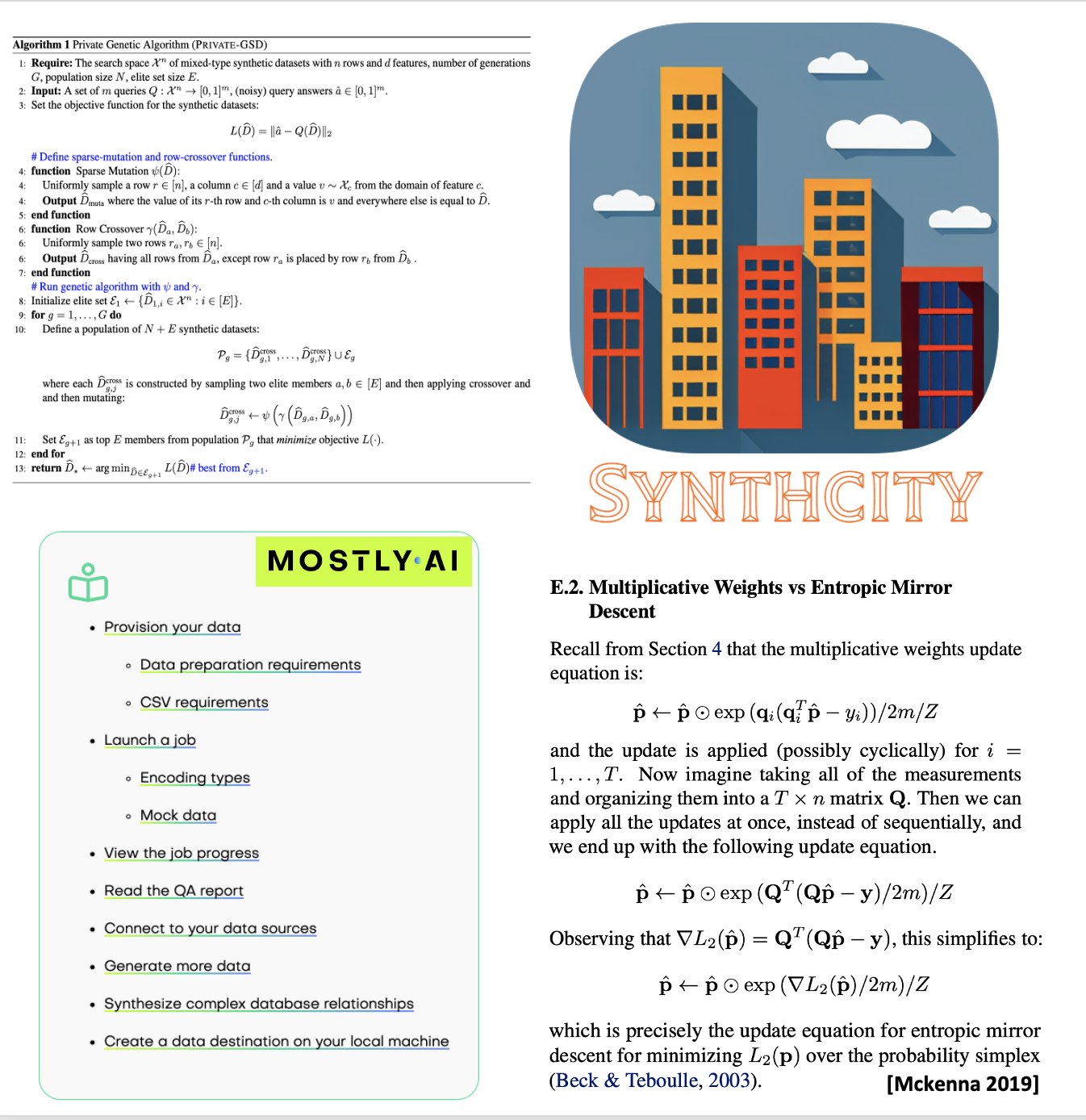

We launched the CRC program with comparative evaluation results on over a dozen techniques from libraries like OpenDP/SmartNoise, Synthetic Data Vault, R Synthpop, and Tumult Analytics. This deck includes an introduction to the “Research, Engineering, Engagement” cycle, our first round of demonstration evaluations (and early observations), as well as an illustrated walk through of our project resources.

Document Link

By: Christine Task and Karan Bhagat

Posted on: February 13, 2023

Tutorials

Website orientation and data submission tutorial

This tutorial offers a tour of the resources that are available on the project website. It then explains how you can use these resources to contribute to the first phase of the Collaborative Research Cycle, by submitting samples of deidentified data from different privacy techniques. We demonstrate how the data submission process might go for a very cool privacy algorithm, exploring the impact of "parameter [x]"

Video Link

By: Christine Task

Posted on: March 7, 2023

Project overview

This is a re-recording of the same slides that were presented for the initial project kick-off at the PPAI-23 workshop. Feel free to read through the slides to follow along at home; the presentation recording includes additional (audio) information about metric results/analysis and project motivations.

Video Link

By: Christine Task

Posted on: March 7, 2023

Office Hours Discussion Summaries

Office hour on March 13, 2023

Office hours are a time to get together, review evaluations and collaboratively think about the data/algorithms that have been submitted in the past two weeks. For our first office hours we looked at: GAN algorithms from the SynthCity open source library, MostlyAI's Synthetic Data Platform applied to all three benchmark data sets (MA, TX and National), as well as two fascinating differentially private marginal-based approaches contributed by academic researchers. Comparing SynthCity with MostlyAI shows the impact of automated neural network tuning on performance (but very diverse data still presents interesting challenges). Comparing MWEM+PGM with GeneticSD shows how pruning marginals can dramatically decrease noisiness but also potentially increase bias (impacting regression and correlations). Check out the video and the reports for more details.

Video Link

Reports:

-

SynthCity: DP-GAN (epsilon 1), PATE-GAN (epsilon 1), ADSGAN (lambda 10),

-

MostlyAI: MA (least diverse), TX (moderately diverse), National (very diverse),

-

LostInTheNoise: MWEM+PGM (epsilon 1),

-

SynCity: GeneticSD (epsilon 10),

Conducted By: Christine Task

Posted on: March 14, 2023

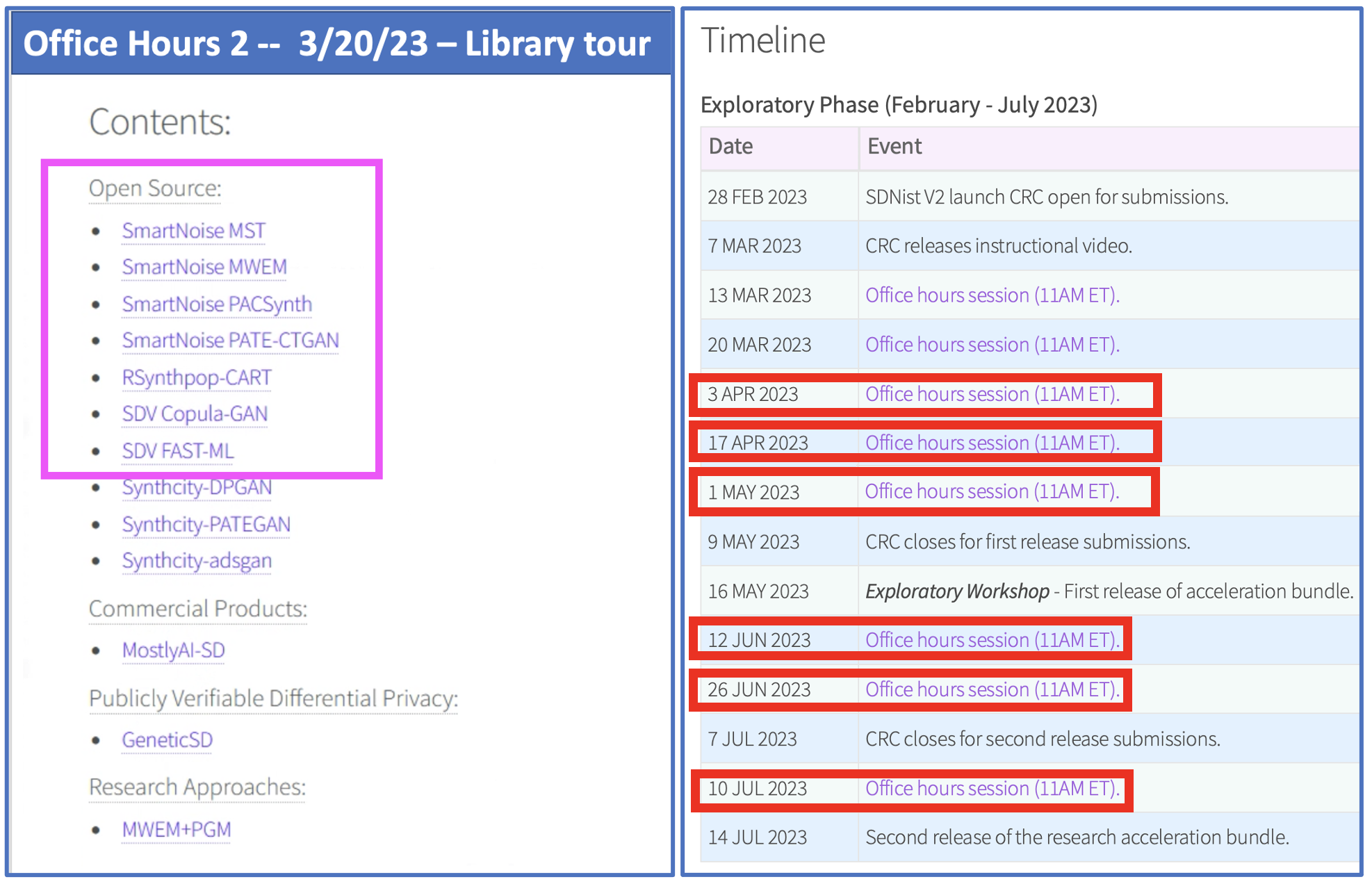

Office hour on March 20, 2023

For this week's office hours we started off by reviewing recent updates to our program schedule: We've added biweekly office hours and will be accepting data submissions through mid-July. Because our goal is to explore how data privacy algorithms behave on diverse data, our team leaderboard rankings are based on how much each team contributes to that exploration -- how many algorithms and privatized data sets you've submitted. In addition to contributing new research (always appreciated!), we have a lot of work left to do exploring existing mature techniques and libraries. So this week we offer a tour of the libraries already in our archive -- You can help our project (and your own ranking on the leaderboard) by exploring these libraries using different feature sets, target data sets (MA, TX and National) and different parameter configurations. Even if you're contributing your own new research too, this is a good way to get a broader picture of how data privacy operates -- you may learn something useful comparing these techniques to your own.

Video Link

Privacy Library Tour:

- Synthcity: Location | Installation | Documentation

- SDV: Location | Installation | Documentation

- SmartNoise: Location | Installation | Documentation

- R Synthpop: Location | Installation | Documentation

- Tumult Analytics: Location | Installation | Documentation

Conducted By: Christine Task

Posted on: March 20, 2023

Office hour on April 3, 2023

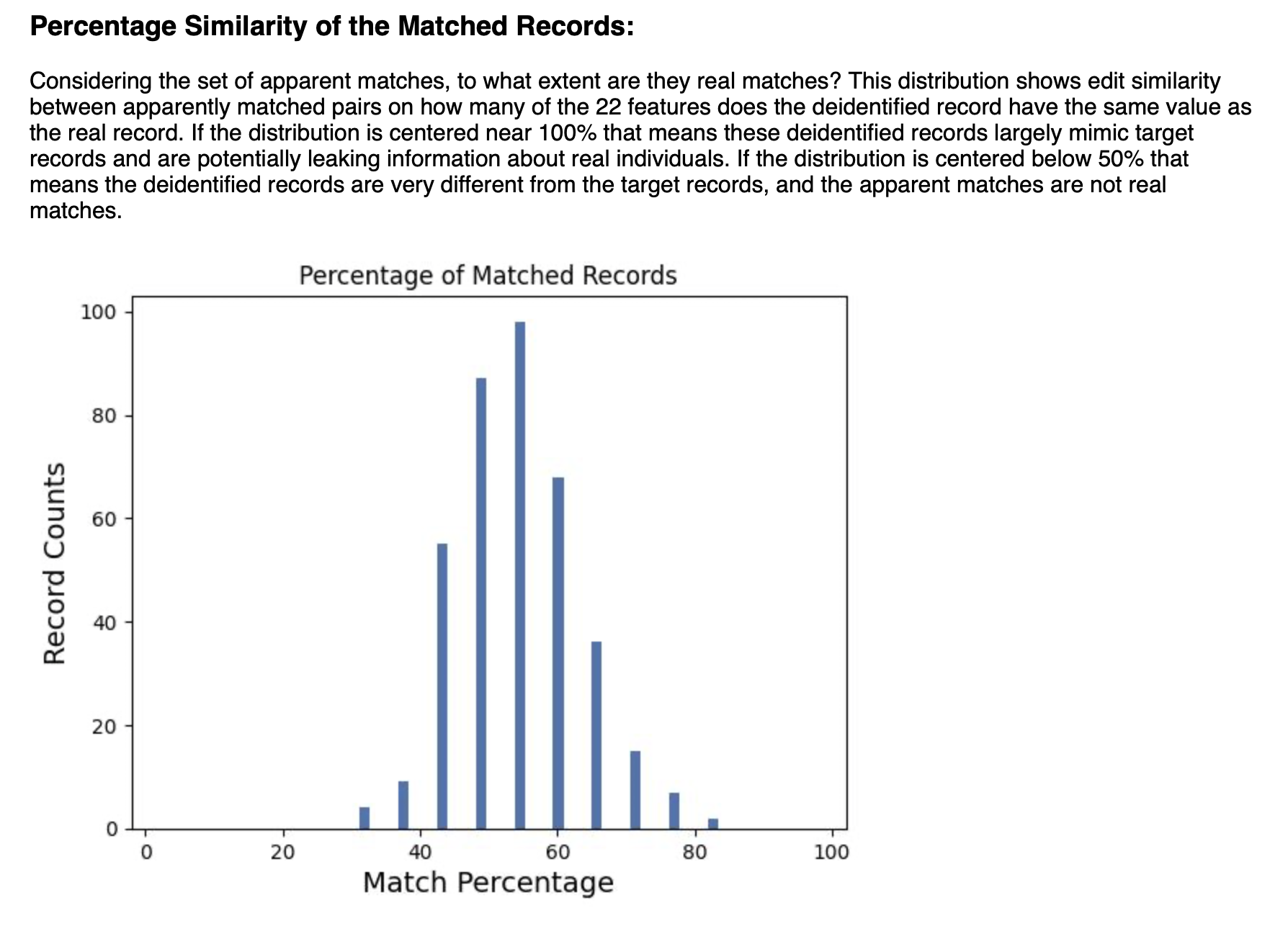

For our third office hours, we cover a few important topics. Because the SDNist evaluation library is applicable to any privacy approach, we're not limited to synthetic data privacy -- we can explore traditional approaches as well. We provide a tour of the sdcmicro Statistical Disclosure Control library, and check out two of its approaches: feature suppression based k-anonymity and Post Randomization (PRAM). Having looked more at utility in previous office hours, we take a closer look at our own privacy metrics, the Apparent Match metric and the unique exact matches metric.

And finally, we take a look at two commercial synthetic data products: A differentially private synthetic data sample from Sarus and an updated synthetic data submission from MostlyAI.

Video Link

Open-Source Privacy Library Tour:

- sdcmicro R: Location | Installation | Documentation | GUI App