CRC Research Acceleration Bundle

The CRC seeks to equip the research community with resources to explore, evaluate, and discuss deidentification approaches.

Contents:

Benchmark Data

Deidentified Data Archive

Deidentification Algorithm Summary Table

Meta-analysis Tutorial Notebooks

Benchmark Data

The Collaborative Research Cycle uses

the

NIST Excerpts Benchmark Data

serve as the target data for

this program. All deidentification

techniques

in our directory have been run on

this input data, and the resulting

examples of deidentified data are

available in the archive below.

The ACS Data Excerpts

includes three benchmark datasets

– the Massachusetts data is

from north of Boston, the Texas data

is from near Dallas, and the National

data is a collection of communities from

around the nation.

The data is derived from the 2019

American Community Survey; the 24

features in the complete scheme were

chosen because they capture many of

the complexities from real-world data,

while still being small, and simple

enough to make more formal analysis feasible.

The data folder includes lovely postcard

documentation about the communities and a

JSON data dictionary to make it easy to

configure your privacy technique. The usage

guidance section in the readme has helpful

configuration hints (watch out for 'N').

Additionally data from 2018 has

been provided as a control; this

may be useful for configuring

differentially private algorithms or

calibrating privacy metrics. The 2018 data

covers the same schema (features

and geography) but does not share any

individuals with the 2019 data.

Deidentified Data Archives

Download the

Research Acceleration Bundle

and explore! This archive of deidentified data samples

and evaluation metric results provides a broad,

representative sample of data deidentification as a whole.

The target data for this project are the

ACS Data Excerpts

, curated data drawn from the American Community Survey.

The archive is comprised of deidentified versions of the

Excerpts data as generated by a wide variety of deidentification algorithms,

libraries and privacy definitions.

Check out our

Algorithm Summary Table

for

a high level glimpse of the current archive contents.

Deidentification Algorithm Summary Table

This table provides a very high level

summary of the deidentification algorithms

in our archive. Unique Exact Match (UEM)

is a simple privacy metric that counts

the percentage of singleton records in

the target that are also present in

the deidentified data; these uniquely

identifiable individuals leaked through

the deidentification process. The

Subsample Equivalent (SsE) utility

metric uses an analogy between

deidentification error and sampling

error to communicate utility; a score

of 5% indicates the edit distance

between the target and deidentified

data distributions is similar to the

sampling error induced by randomly

discarding 95% of the data. Edit distance

is based on the k-marginal metric for

sparse distributions.

Note that this isn't a leaderboard--- you can

select any column in the dropdown menu and

reorder the table according to that column.

Algorithms with high utility (high SsE) may

have a lot of privacy leakage (high UEM),

algorithms with low privacy leakage (low UEM)

may have poor utility (low SsE).

Algorithms that have only been run on

small subsets of the schema may perform

differently on larger feature spaces

(Avg Feat. Space Size). And, in general,

SsE and UEM are very simple, reductive metrics.

If you're curious about a deidentification method,

we recommend checking out its full evaluation

results in

the metareports archive

Select Column for Sorting

DESC

Library

Algorithm

Team

# Entries

# Feat. sets

Avg. Feat. Space Size

ε values

Utility: SSE

Privacy Leak: UEM

rsynthpop

catall

Rsynthpop-categorical

2

1

2e+08

100.0

65.0

81.33

rsynthpop

ipf_NonDP

Rsynthpop-categorical

1

1

3e+08

50.0

15.82

rsynthpop

catall_NonDP

Rsynthpop-categorical

1

1

2e+08

50.0

63.37

tumult

DPHist

CRC

2

2

6e+07

10.0

45.5

99.91

subsample

subsample_40pcnt

CRC

15

5

4e+25

40.67

39.93

rsynthpop

cart

CRC

12

4

3e+20

40.0

16.14

UTDallas-AIFairness

smote

UT Dallas DSPL

9

1

2e+26

40.0

17.51

sdcmicro

pram

CRC

12

3

1e+11

38.33

56.27

smartnoise-synth

aim

CRC

16

6

4e+25

10.0

34.69

10.38

Anonos Data Embassy SDK

Anonos Data Embassy SDK

Anonos

3

1

2e+26

30.0

0.01

MostlyAI SD

MostlyAI SD

MOSTLY AI

6

1

2e+26

30.0

0.01

aindo-synth

aindo-synth

Aindo

3

1

2e+26

30.0

0.01

smartnoise-synth

aim

CRC

16

6

4e+25

5.0

28.12

9.77

rsynthpop

cart

CBS-NL

3

1

2e+08

21.67

28.6

smartnoise-synth

mst

CRC

16

6

2e+19

10.0

19.69

7.89

rsynthpop

ipf

CRC

3

1

2e+08

100.0

18.33

16.97

Genetic SD

Genetic SD

DataEvolution

10

2

9e+25

10.0

17.5

0.18

rsynthpop

ipf

CRC

3

1

2e+08

10.0

16.67

14.29

smartnoise-synth

mst

CRC

18

6

4e+25

5.0

14.44

7.42

smartnoise-synth

aim

CRC

16

6

4e+25

1.0

12.19

7.22

ydata-sdk

YData Fabric Synthesizers

YData

33

4

1e+26

11.85

9.7

LostInTheNoise

MWEM+PGM

LostInTheNoise

1

1

5e+26

1.0

10.0

0.0

smartnoise-synth

mst

CRC

16

6

2e+19

1.0

10.0

5.81

synthcity

bayesian_network

CRC

12

4

6e+25

7.17

17.86

Genetic SD

Genetic SD

DataEvolution

9

2

9e+25

1.0

5.56

0.04

ydata-synthetic

ctgan

DCAI Community

1

1

6e+14

5.0

0.33

subsample

subsample_5pcnt

CRC

4

4

1e+26

5.0

4.97

rsynthpop

ipf

Rsynthpop-categorical

1

1

2e+08

2.0

5.0

10.68

Sarus SDG

Sarus SDG

Sarus

1

1

2e+08

10.0

5.0

13.99

synthcity

privbayes

CRC

9

3

1e+11

1.0

4.89

5.17

sdv

ctgan

CBS-NL

6

1

2e+26

4.33

0.0

smartnoise-synth

mwem

CRC

5

5

2e+11

10.0

4.2

3.52

synthcity

privbayes

CRC

9

3

1e+11

10.0

3.78

4.32

smartnoise-synth

pacsynth

CRC

9

3

1e+11

10.0

3.44

8.9

sdv

tvae

CRC

13

4

6e+25

3.15

5.76

sdv

fastml

CRC

4

2

9e+24

3.0

1.15

synthcity

tvae

CRC

12

4

6e+25

3.0

4.69

smartnoise-synth

patectgan

CRC

12

4

2e+19

10.0

3.0

5.66

sdcmicro

kanonymity

CRC

21

3

1e+11

2.67

23.41

rsynthpop

ipf

CRC

3

1

2e+08

2.0

2.33

3.05

smartnoise-synth

patectgan

CRC

18

6

4e+25

5.0

2.33

4.49

smartnoise-synth

pacsynth

CRC

15

5

2e+11

5.0

2.13

4.32

synthcity

pategan

CRC

12

4

6e+25

10.0

2.0

2.59

synthcity

adsgan

CCAIM

1

1

5e+26

1.0

0.0

sdv

copula-gan

CRC

1

1

3e+24

1.0

0.0

sdv

ctgan

CRC

6

1

5e+26

1.0

0.0

synthcity

dpgan

CCAIM

1

1

5e+26

1.0

1.0

0.0

sdv

gaussian-copula

Blizzard Wizard

2

1

5e+11

1.0

0.0

synthcity

pategan

CCAIM

1

1

5e+26

1.0

1.0

0.0

rsynthpop

catall

Rsynthpop-categorical

2

1

2e+08

1.0

1.0

0.01

smartnoise-synth

patectgan

CRC

11

4

2e+19

1.0

1.0

0.02

smartnoise-synth

pacsynth

CRC

4

2

1e+08

1.0

1.0

0.38

sdv

gaussian-copula

CommunityData

1

1

2e+08

1.0

0.52

subsample

subsample_1pcnt

CRC

4

4

1e+26

1.0

0.98

synthcity

adsgan

CRC

12

4

6e+25

1.0

1.54

rsynthpop

ipf

CRC

1

1

2e+08

1.0

1.0

1.72

synthcity

pategan

CRC

12

4

6e+25

1.0

1.0

1.99

rsynthpop

ipf

Rsynthpop-categorical

1

1

2e+08

1.0

1.0

3.53

rsynthpop

catall

Rsynthpop-categorical

2

1

2e+08

10.0

1.0

60.39

tumult

DPHist

CRC

1

1

1e+06

1.0

1.0

74.47

tumult

DPHist

CRC

1

1

1e+06

2.0

1.0

88.39

tumult

DPHist

CRC

1

1

1e+06

4.0

1.0

98.05

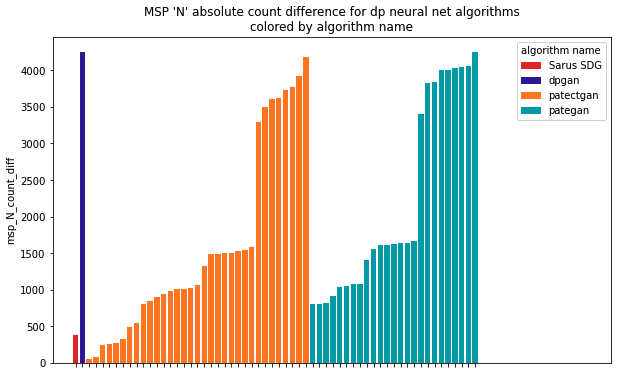

Tutorial notebooks

Included in the version 1.1 of the CRC Research Acceleration Bundle,

we provide a

notebooks directory

containing a complete set

of tools to make the archive widely accessible for programmatic

navigation and analysis.

Notebook Utility List (libs)

The notebooks folder includes a

library

of utilities to assist with navigating

the deidentified data archive (Data and Metrics Bundle):

- Index.csv file with metadata (library, algorithm type,

privacy type, research paper doi, parameter settings,

etc) on every technique and deidentified data sample in the archive.

- Utility to provide easy access to the index.csv file,

including help function with metadata definitions.

- Utility to assist navigation through metrics available in report.json files.

Utility to assist navigation through archive file hierarchy.

- Utility to easily generate bar charts and scatterplots with configurable colored highlighting

by deid technique metadata. Utility to make human-readable

display labels for deid samples

- Utility to configure and display collections of

metric visualizations from the SDNist reports

For newcomers to python/pandas:

Welcome! We also teach

everything

you need to know about pandas

dataframes:

- Reading data from csv file, creating new data frames

- Displaying excerpts of data frames

- Adding new columns, updating and operating on columns

- Filtering rows, iterating over rows

- Sorting rows based on selected columns

Introduction Tutorial:

We teach all the

basics

for performing meta-analysis

on the deidentified data archive:

- Setup notebook.

- Load deid datasets index file (index.csv).

- Select specific deid. datasets from the index dataframe.

- Working with the deidentified data csv files.

- Working with the target data csv files.

- Compare target and deid datasets.

- Use index.csv to highlight plots by algorithm properties.

- Access SDNIST evaluation reports.

- Show relationship between two evaluation metrics.

- Identify specific data samples of interest.

- Show images from SDNist evaluation reports.

- Get evaluation metrics for specific samples of interest.

Benchmark Data

The Collaborative Research Cycle uses the NIST Excerpts Benchmark Data serve as the target data for this program. All deidentification techniques in our directory have been run on this input data, and the resulting examples of deidentified data are available in the archive below.

The ACS Data Excerpts includes three benchmark datasets – the Massachusetts data is from north of Boston, the Texas data is from near Dallas, and the National data is a collection of communities from around the nation. The data is derived from the 2019 American Community Survey; the 24 features in the complete scheme were chosen because they capture many of the complexities from real-world data, while still being small, and simple enough to make more formal analysis feasible. The data folder includes lovely postcard documentation about the communities and a JSON data dictionary to make it easy to configure your privacy technique. The usage guidance section in the readme has helpful configuration hints (watch out for 'N').

Additionally data from 2018 has been provided as a control; this may be useful for configuring differentially private algorithms or calibrating privacy metrics. The 2018 data covers the same schema (features and geography) but does not share any individuals with the 2019 data.

Deidentified Data Archives

Download the Research Acceleration Bundle and explore! This archive of deidentified data samples and evaluation metric results provides a broad, representative sample of data deidentification as a whole.

The target data for this project are the ACS Data Excerpts , curated data drawn from the American Community Survey. The archive is comprised of deidentified versions of the Excerpts data as generated by a wide variety of deidentification algorithms, libraries and privacy definitions. Check out our Algorithm Summary Table for a high level glimpse of the current archive contents.

Deidentification Algorithm Summary Table

This table provides a very high level summary of the deidentification algorithms in our archive. Unique Exact Match (UEM) is a simple privacy metric that counts the percentage of singleton records in the target that are also present in the deidentified data; these uniquely identifiable individuals leaked through the deidentification process. The Subsample Equivalent (SsE) utility metric uses an analogy between deidentification error and sampling error to communicate utility; a score of 5% indicates the edit distance between the target and deidentified data distributions is similar to the sampling error induced by randomly discarding 95% of the data. Edit distance is based on the k-marginal metric for sparse distributions.

Note that this isn't a leaderboard--- you can select any column in the dropdown menu and reorder the table according to that column. Algorithms with high utility (high SsE) may have a lot of privacy leakage (high UEM), algorithms with low privacy leakage (low UEM) may have poor utility (low SsE). Algorithms that have only been run on small subsets of the schema may perform differently on larger feature spaces (Avg Feat. Space Size). And, in general, SsE and UEM are very simple, reductive metrics. If you're curious about a deidentification method, we recommend checking out its full evaluation results in the metareports archive

Select Column for Sorting

| Library | Algorithm | Team | # Entries | # Feat. sets | Avg. Feat. Space Size | ε values | Utility: SSE | Privacy Leak: UEM |

|---|---|---|---|---|---|---|---|---|

| rsynthpop | catall | Rsynthpop-categorical | 2 | 1 | 2e+08 | 100.0 | 65.0 | 81.33 |

| rsynthpop | ipf_NonDP | Rsynthpop-categorical | 1 | 1 | 3e+08 | 50.0 | 15.82 | |

| rsynthpop | catall_NonDP | Rsynthpop-categorical | 1 | 1 | 2e+08 | 50.0 | 63.37 | |

| tumult | DPHist | CRC | 2 | 2 | 6e+07 | 10.0 | 45.5 | 99.91 |

| subsample | subsample_40pcnt | CRC | 15 | 5 | 4e+25 | 40.67 | 39.93 | |

| rsynthpop | cart | CRC | 12 | 4 | 3e+20 | 40.0 | 16.14 | |

| UTDallas-AIFairness | smote | UT Dallas DSPL | 9 | 1 | 2e+26 | 40.0 | 17.51 | |

| sdcmicro | pram | CRC | 12 | 3 | 1e+11 | 38.33 | 56.27 | |

| smartnoise-synth | aim | CRC | 16 | 6 | 4e+25 | 10.0 | 34.69 | 10.38 |

| Anonos Data Embassy SDK | Anonos Data Embassy SDK | Anonos | 3 | 1 | 2e+26 | 30.0 | 0.01 | |

| MostlyAI SD | MostlyAI SD | MOSTLY AI | 6 | 1 | 2e+26 | 30.0 | 0.01 | |

| aindo-synth | aindo-synth | Aindo | 3 | 1 | 2e+26 | 30.0 | 0.01 | |

| smartnoise-synth | aim | CRC | 16 | 6 | 4e+25 | 5.0 | 28.12 | 9.77 |

| rsynthpop | cart | CBS-NL | 3 | 1 | 2e+08 | 21.67 | 28.6 | |

| smartnoise-synth | mst | CRC | 16 | 6 | 2e+19 | 10.0 | 19.69 | 7.89 |

| rsynthpop | ipf | CRC | 3 | 1 | 2e+08 | 100.0 | 18.33 | 16.97 |

| Genetic SD | Genetic SD | DataEvolution | 10 | 2 | 9e+25 | 10.0 | 17.5 | 0.18 |

| rsynthpop | ipf | CRC | 3 | 1 | 2e+08 | 10.0 | 16.67 | 14.29 |

| smartnoise-synth | mst | CRC | 18 | 6 | 4e+25 | 5.0 | 14.44 | 7.42 |

| smartnoise-synth | aim | CRC | 16 | 6 | 4e+25 | 1.0 | 12.19 | 7.22 |

| ydata-sdk | YData Fabric Synthesizers | YData | 33 | 4 | 1e+26 | 11.85 | 9.7 | |

| LostInTheNoise | MWEM+PGM | LostInTheNoise | 1 | 1 | 5e+26 | 1.0 | 10.0 | 0.0 |

| smartnoise-synth | mst | CRC | 16 | 6 | 2e+19 | 1.0 | 10.0 | 5.81 |

| synthcity | bayesian_network | CRC | 12 | 4 | 6e+25 | 7.17 | 17.86 | |

| Genetic SD | Genetic SD | DataEvolution | 9 | 2 | 9e+25 | 1.0 | 5.56 | 0.04 |

| ydata-synthetic | ctgan | DCAI Community | 1 | 1 | 6e+14 | 5.0 | 0.33 | |

| subsample | subsample_5pcnt | CRC | 4 | 4 | 1e+26 | 5.0 | 4.97 | |

| rsynthpop | ipf | Rsynthpop-categorical | 1 | 1 | 2e+08 | 2.0 | 5.0 | 10.68 |

| Sarus SDG | Sarus SDG | Sarus | 1 | 1 | 2e+08 | 10.0 | 5.0 | 13.99 |

| synthcity | privbayes | CRC | 9 | 3 | 1e+11 | 1.0 | 4.89 | 5.17 |

| sdv | ctgan | CBS-NL | 6 | 1 | 2e+26 | 4.33 | 0.0 | |

| smartnoise-synth | mwem | CRC | 5 | 5 | 2e+11 | 10.0 | 4.2 | 3.52 |

| synthcity | privbayes | CRC | 9 | 3 | 1e+11 | 10.0 | 3.78 | 4.32 |

| smartnoise-synth | pacsynth | CRC | 9 | 3 | 1e+11 | 10.0 | 3.44 | 8.9 |

| sdv | tvae | CRC | 13 | 4 | 6e+25 | 3.15 | 5.76 | |

| sdv | fastml | CRC | 4 | 2 | 9e+24 | 3.0 | 1.15 | |

| synthcity | tvae | CRC | 12 | 4 | 6e+25 | 3.0 | 4.69 | |

| smartnoise-synth | patectgan | CRC | 12 | 4 | 2e+19 | 10.0 | 3.0 | 5.66 |

| sdcmicro | kanonymity | CRC | 21 | 3 | 1e+11 | 2.67 | 23.41 | |

| rsynthpop | ipf | CRC | 3 | 1 | 2e+08 | 2.0 | 2.33 | 3.05 |

| smartnoise-synth | patectgan | CRC | 18 | 6 | 4e+25 | 5.0 | 2.33 | 4.49 |

| smartnoise-synth | pacsynth | CRC | 15 | 5 | 2e+11 | 5.0 | 2.13 | 4.32 |

| synthcity | pategan | CRC | 12 | 4 | 6e+25 | 10.0 | 2.0 | 2.59 |

| synthcity | adsgan | CCAIM | 1 | 1 | 5e+26 | 1.0 | 0.0 | |

| sdv | copula-gan | CRC | 1 | 1 | 3e+24 | 1.0 | 0.0 | |

| sdv | ctgan | CRC | 6 | 1 | 5e+26 | 1.0 | 0.0 | |

| synthcity | dpgan | CCAIM | 1 | 1 | 5e+26 | 1.0 | 1.0 | 0.0 |

| sdv | gaussian-copula | Blizzard Wizard | 2 | 1 | 5e+11 | 1.0 | 0.0 | |

| synthcity | pategan | CCAIM | 1 | 1 | 5e+26 | 1.0 | 1.0 | 0.0 |

| rsynthpop | catall | Rsynthpop-categorical | 2 | 1 | 2e+08 | 1.0 | 1.0 | 0.01 |

| smartnoise-synth | patectgan | CRC | 11 | 4 | 2e+19 | 1.0 | 1.0 | 0.02 |

| smartnoise-synth | pacsynth | CRC | 4 | 2 | 1e+08 | 1.0 | 1.0 | 0.38 |

| sdv | gaussian-copula | CommunityData | 1 | 1 | 2e+08 | 1.0 | 0.52 | |

| subsample | subsample_1pcnt | CRC | 4 | 4 | 1e+26 | 1.0 | 0.98 | |

| synthcity | adsgan | CRC | 12 | 4 | 6e+25 | 1.0 | 1.54 | |

| rsynthpop | ipf | CRC | 1 | 1 | 2e+08 | 1.0 | 1.0 | 1.72 |

| synthcity | pategan | CRC | 12 | 4 | 6e+25 | 1.0 | 1.0 | 1.99 |

| rsynthpop | ipf | Rsynthpop-categorical | 1 | 1 | 2e+08 | 1.0 | 1.0 | 3.53 |

| rsynthpop | catall | Rsynthpop-categorical | 2 | 1 | 2e+08 | 10.0 | 1.0 | 60.39 |

| tumult | DPHist | CRC | 1 | 1 | 1e+06 | 1.0 | 1.0 | 74.47 |

| tumult | DPHist | CRC | 1 | 1 | 1e+06 | 2.0 | 1.0 | 88.39 |

| tumult | DPHist | CRC | 1 | 1 | 1e+06 | 4.0 | 1.0 | 98.05 |