Temperature interpolation#

Classes used for interpolation#

Instead of performing extrapolation, we can also perform interpolation. Classes already exist for this, namely ExtrapWeightedModel, InterpModel, and MBARModel. The first implements extrapolation from two points weighted with a Minkowski-like distance, the second polynomial interpolation from any number of points, and the last uses reweighting with MBAR from multiple points.

Demonstrations of creating and using InterpModel and MBARModel classes are shown below.

The InterpModel will essentially just stitch together multiple ExtrapModel objects generated at multiple \(\beta\) values by factory_extrapmodel. The InterpModel class is actually based on a very general and helpful class called StateCollection, which just holds a list of ExtrapModel objects. InterpModel just uses this list of objects in a specific way to implement piecewise polynomial interpolation.

The functions below conveniently pull a specified number of \(\beta\) values out of a range and create ExtrapModel objects for each \(\beta\) value in a provided list. These will be very convenient as we generate data and models at different states.

%matplotlib inline

import cmomy

import matplotlib.pyplot as plt

import numpy as np

import xarray as xr

# Import idealgas module

import thermoextrap as xtrap

from thermoextrap import idealgas

# seeded random number generator

rng = cmomy.random.default_rng(seed=0)

def get_betas(nbeta=4, betamin=0.1, betamax=10.0):

dbeta = (betamax - betamin) / (nbeta - 1)

return np.array([betamin + dbeta * i for i in range(nbeta)])

def get_xems(betas, nconfig=100000, npart=1000, order=2, vol=1):

xems = []

for beta in betas:

xdata, udata = (

xr.DataArray(_, dims="rec")

for _ in idealgas.generate_data((nconfig, npart), beta=beta, vol=vol)

)

xem = xtrap.beta.factory_extrapmodel(

beta=beta,

data=xtrap.DataCentralMomentsVals.from_vals(

xv=xdata, uv=udata, central=True, order=order

),

)

xems.append(xem)

return xems

# Define a range of beta values to test predictions at

betas = np.arange(0.1, 10.0, 0.5)

# Grab betas from either end, as well as data and ExtrapModel objects at reference betas

beta_samp = get_betas(2)

xems = get_xems(beta_samp)

# Create models

xemi = xtrap.InterpModel(xems)

xmbar = xtrap.MBARModel(xems)

# Make rpedictions with each type of interpolative model

fig, ax = plt.subplots()

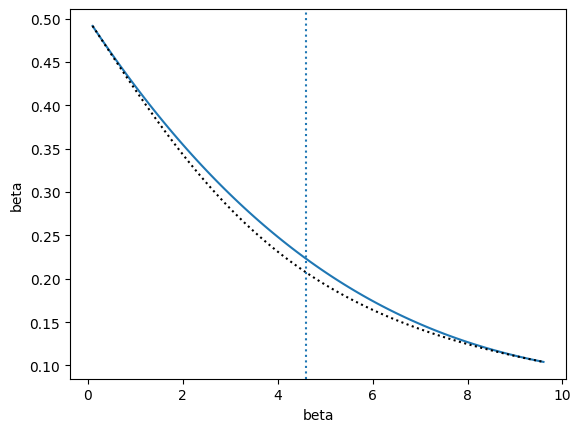

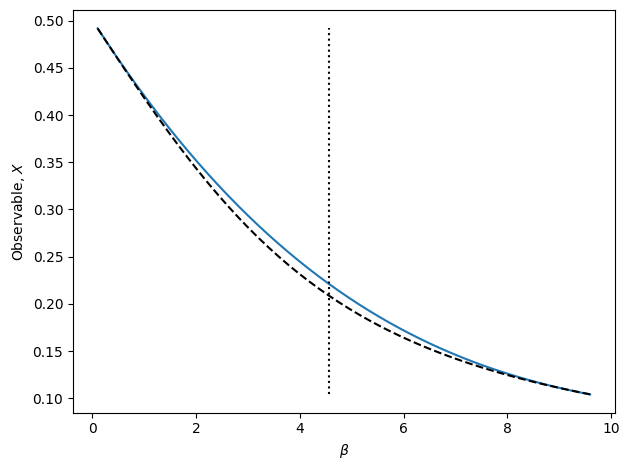

out = xemi.predict(betas, order=1)

err = xemi.resample(sampler={"nrep": 100}).predict(betas, order=2).std("rep")

plt.errorbar(betas, out, yerr=err, label="interp poly")

xmbar.predict(betas).plot(label="mbar")

# And plot the true values

plt.plot(betas, idealgas.x_ave(betas), "k--", label="True", zorder=3)

ax.set_xlabel(r"$\beta$")

ax.set_ylabel(r"$\langle x \rangle$")

ax.legend()

<matplotlib.legend.Legend at 0x142f326f0>

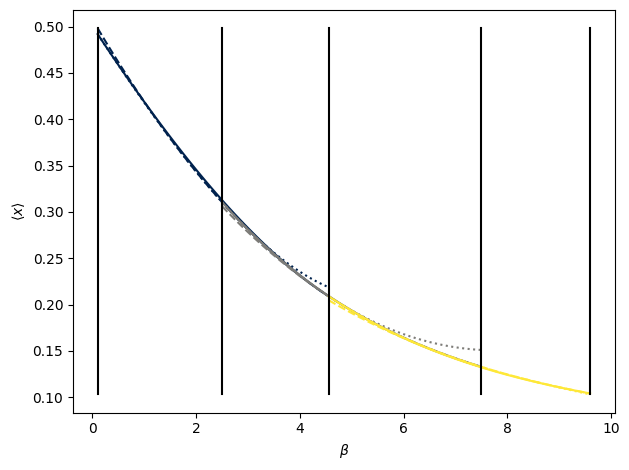

The above compares polynomial interpolation and MBAR (really just BAR because using only 2 points). Both methods are used to interpolate between the two extreme values of beta. In both cases, the same data from the two edge points is used, making use of only derivatives up to 1st order for interpolation. The error bars are one standard deviation from bootstrap resampling of the predictions of each model. True values are represented by the black dashed line.

We don’t really expect MBAR to do any better than perturbation because it is using the MBAR weights between states to reweight the perturbation theory estimates. As such, we can see that it is “sticky” in that it tries to keep using a single point until it suddenly jumps to the next one. This is a symptom of the fact that the perturbation theory estimates themselves plateau. Such plateaus represent poor overlap, resulting in large free energy differences and MBAR weights that essentially act as step functions (i.e. you just pick whichever point has lower free energy over the entire interval). Extrapolation is almost always better, but note that it’s predictions vary more with sampling, especially if you go to higher order.

# Adding an additional reference beta value

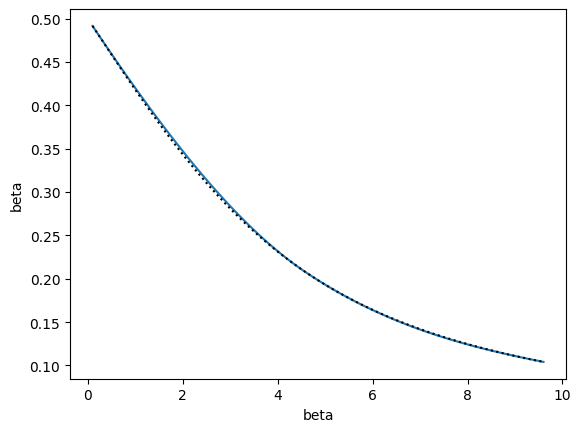

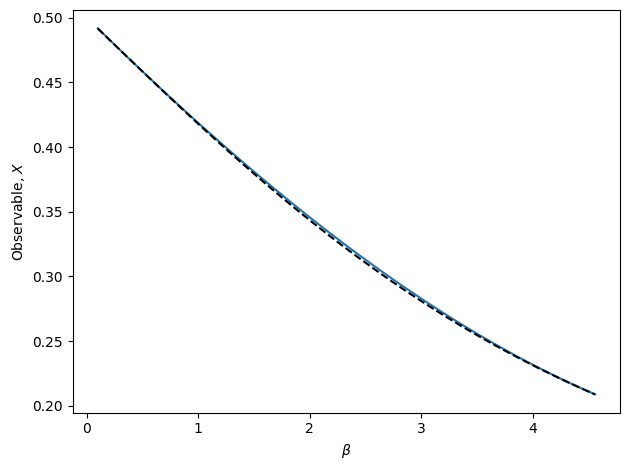

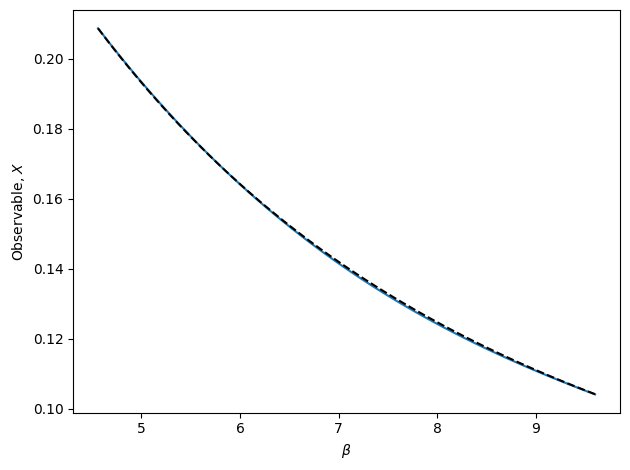

betas_samp = get_betas(3)

xems = get_xems(betas_samp)

xemi = xtrap.InterpModel(xems)

xemi.predict(betas, order=1).plot()

xemw = xtrap.ExtrapWeightedModel(xems)

xemw.predict(betas, order=1).plot()

plt.plot(betas, idealgas.x_ave(betas), "k--", label="True", zorder=3)

[<matplotlib.lines.Line2D at 0x142d39be0>]

Recursive interpolation#

Also included in the library is a function to perform recursive interpolation to achieve estimates over an interval to a specified error tolerance. The model class that does this is called RecursiveInterp in the recursive_interp module. One of the inputs to this class is an interpolation class object (trained or not), such as InterpModel. This function continues picking new points and dividing the original interval until all points satisfy the error tolerance, which is represented as a relative error. Details of the algorithm are provided below.

A key feature of RecursiveInterp is a method called get_data(). Simply put, this obtains data if provided a value of the variable over which we’re extrapolating. By default, this method is just set up to return data at a specified \(\beta\) for the 1D ideal gas model, so we don’t need to touch it. However, in the paper we modify this method to load in data from an MD simulation of water closest to the specified state point. If you want to generate new MD or MC data on the fly, you will need to create a new class that inherits from RecursiveInterp and just change the get_data() method to run the appropriate simulation and load in the data you need.

from thermoextrap import recursive_interp

# Generate coefficients

derivs = xtrap.beta.factory_derivatives()

# Create the recursive model

intPpiecewise = recursive_interp.RecursiveInterp(

xtrap.InterpModel, derivs, [betas[0], betas[-1]], max_order=1, tol=0.003

)

# Train it recursively until error tolerance reached

# Turn off verbose or doPlot if don't want to see progress of algorithm

# plotCompareFunc provides the true values to compare to in the figure

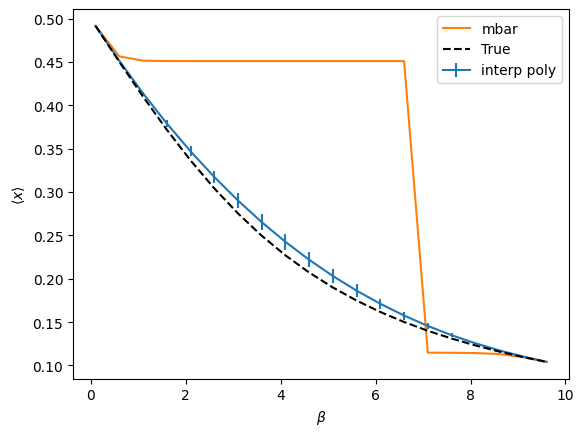

intPpiecewise.recursive_train(

betas[0], betas[-1], verbose=True, do_plot=True, plot_func=idealgas.x_ave

)

# Obtain predictions from our model, which will be based on piecewise interpolating polynomials

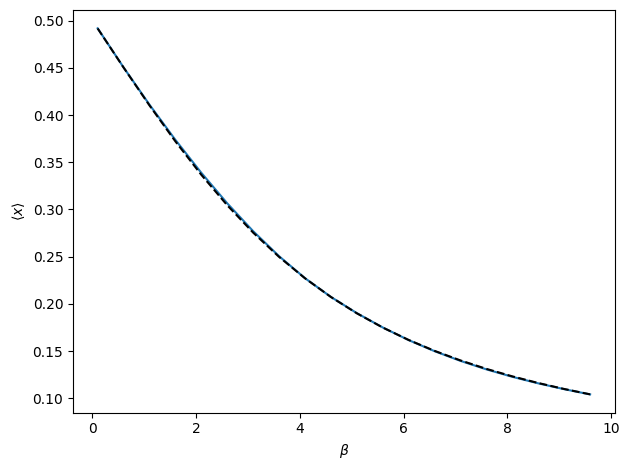

intPpredict = intPpiecewise.predict(betas)

# Plot to compare against true average versus beta for ideal gas model

plt.ion()

interpFig, interpAx = plt.subplots()

interpAx.plot(betas, intPpredict)

interpAx.plot(betas, idealgas.x_ave(betas), "k--", zorder=3)

interpAx.set_ylabel(r"$\langle x \rangle$")

interpAx.set_xlabel(r"$\beta$")

interpFig.tight_layout()

plt.show()

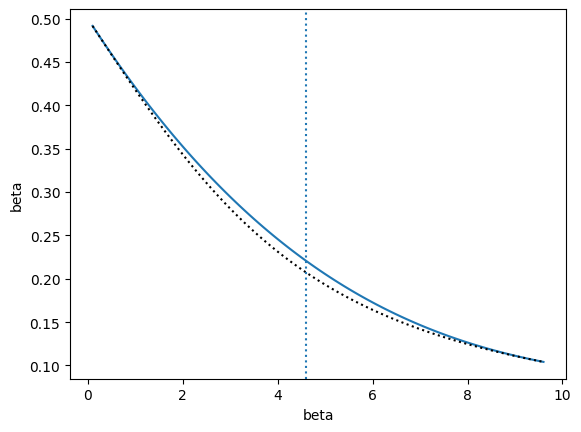

The above cell runs an automated algorithm for performing interpolation recursively. In the above example, polynomial interpolation is used. This is recommended, but the algorithm may also use weighted extrapolation or MBAR. In the figure, the true observable is shown as the dashed black line. The blue line is final result of the recursive algorithm. Note that there is no bootstrap method for RecursiveInterp since the algorithm guarantees the uncertainty to be within the tolerance.

A sketch of the recursive algorithm is as follows:

Perform interpolation between edge points

Only uses up to maximum desired order of derivative information. In the simple ideal gas model, it requires prohibitive amounts of sampling to get good accuracy of derivatives above order 2. If using polynomial interpolation, second derivatives will give us order 5 polynomials. In practice, this probably is not necessary, so settling for the much more accurate third order interpolating polynomials, which only make use of 1st derivatives.

Needs input data at each edge point or must modify the

RecursiveInterp.get_data()method in the RecursiveInterp class to read in data or perform simulations. As a default, this class just uses the toy ideal gas model to generate data.

Calculate bootstrapped uncertainty over whole interval

The region between the edge points is gridded up with the interpolation model used to predict the value at each point. This procedure is bootstrapped by resampling the data to get the standard deviation of each predicted value at each grid point. The absolute relative error is defined as \(\frac{\sigma_x}{|x|}\). The maximum relative error over all grid points and observable elements (if the observable is a vector) is found and compared to the desired tolerance.

Check if maximum uncertainty is within tolerance

If the maximum absolute relative error within the region is lower than the tolerance, then no new simulations are needed. If it is larger than the tolerance, a new state-point is selected where the absolute relative error is a maximum.

Add state point if necessary and recurse

If the tolerance is not met, a state point is added as described above. The algorithm returns to step one for each subinterval created, with new data only generated at the new point.

Though the algorithm has met the specified tolerance, you may still want to manually add more points. This is easy with the RecursiveInterp.sequential_train() method.

intPpiecewise.sequential_train([2.5, 7.5], verbose=True)

It is a good idea when using the recursive algorithm to visually check for consistency.

# Can also check for consistency of local curvature

# Not implemented as an "optimization" rule in the recursive interpolation procedure

# But do have function to do statistical and visual check

# Using the model we just trained above, so must run cell above before this one

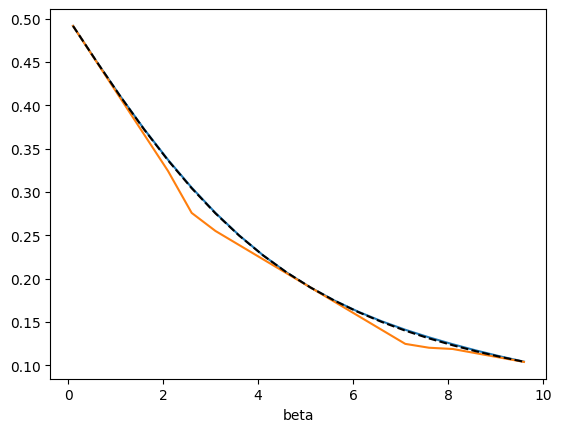

checkPvals = intPpiecewise.check_poly_consistency(do_plot=True)

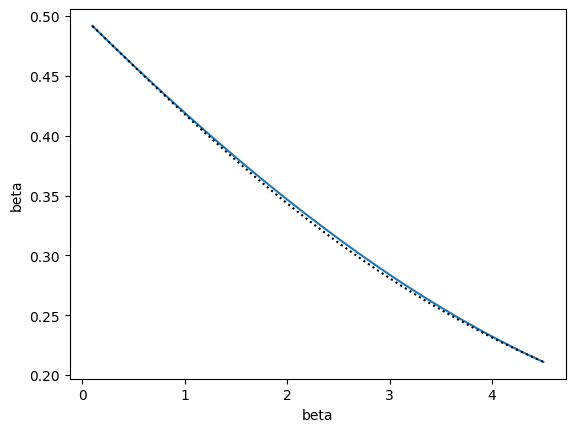

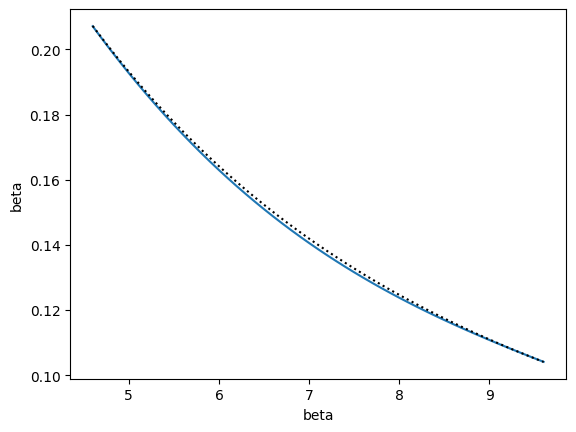

In the above plot, interpolating polynomials are shown for sliding windows of three state points used in the recursive interpolation model. Colors change for each window. Within a window (same color) the interpolating polynomial for the lower subregion is shown with a dotted line, that for the upper subregion with a dashed line, and the whole window region using the two outermost edge points with a solid line. Outside of the region they were fitted over, the polynomials show large deviations from the true values. For interpolation, however, the polynomials all overlap quite well. By construction, the piecewise function created by the recursive procedure is continuous in both its value and first derivative at all points in the entire interval. Using higher order derivative information would result in continuity in even higher derivatives, but likely even more divergent behavior outside of the interpolated range.

The point of the above consistency check is to see if the local polynomials agree within each sliding window. If they do, then that means the local curvature is the same over that region and is captured by the order of polynomial that is fit there. To this end, the polynomial coefficients are also bootstrapped and, assuming Normal distributions, p-values are computed for the null hypothesis that the coefficients for each set of sub-regions and the full region within a window are the same. Since we want the polynomials to be the same, we don’t want small p-values, so the test is of limited usefulness as larger p-values don’t necessarily imply greater similarity. A better statistical test is thus needed. However, the visualization provides a quick visual check/rule of thumb to gauge the consistency of the local curvature and thus convergence of the algorithm. This is similar to the idea of generating overlapping distributions for umbrella sampling.

Adaptive interpolation#

While named differently, this is essentially a different implementation of the algorithm described above in adaptive_interp. It is more modular, extensible, and has more flexible features, but has not been tested or used as extensively. Specifically, there is no longer a class to keep track of the interpolation (that gets provided as the factory_statecollection argument to each method called in adaptive_interp) and there is a customizable callback structure that handles tolerance checking, plotting, and outputting incremental results. Further, the thermoextrap.recursive_interp.RecursiveInterp.get_data() is replaced by passing a function factory_state as an argument to all of the methods in adaptive_interp. For an example factory_state method, see factory_state_idealgas(), where an example callback for plotting may also be found.

The major technical difference is that, instead of automatically creating a fine grid of new alpha values to select a new point based on maximum uncertainty, the grid is passed as an argument. The minimum and maximum values are treated as the initial state points to use. This gives the user finer control over how the search is performed. Another measure of control is provided by the alpha_tol argument, which specifies the minimum distance of a new point from previous alpha values.

Like with recursive_train() and sequential_train(), there are two training modes. The first is identical to the recursive algorithm described previously and is called train_recursive().

from thermoextrap import adaptive_interp

# Create a fine grid of points to select from (happens automatically without user control in RecursiveInterp class)

alphas = np.arange(betas[0], betas[-1] + 0.05, 0.1)

# Define keyword arguments to be passed to the factory_state method for creating new state information

# (formerly would be arguments to get_data)

state_kws = {"order": 1, "nrep": 100, "rep_dim": "rep"}

states_recurse, info_recurse = adaptive_interp.train_recursive(

alphas=alphas,

factory_state=adaptive_interp.factory_state_idealgas,

factory_statecollection=xtrap.InterpModel,

state_kws=state_kws,

predict_kws={"order": 1},

reduce_dim="rep",

alpha_tol=0.1,

tol=0.003,

callback=adaptive_interp.callback_plot_progress,

)

The other training option, train_iterative(), also adds states as long as the relative error is greater than tol, but selects new states based on a different rule. A new state is also inserted at an alpha value from alphas that is at least alpha_tol away from existing states and has a maximum uncertainty. The difference from the recursive strategy is that the point is chosen globally from all alphas rather than choosing a new point for each interval between current points.

Note that if you want similar behavior as above (and you probably do), then should pass a piecewise model, like InterpModelPiecewise. Otherwise, the InterpModel class will build a model using all points and derivatives to fit a single polynomial rather than a piecewise one. With the recursive strategy, piecewise polynomials are naturally used as a result of considering each interval separately.

smodel_iter, info_iter = adaptive_interp.train_iterative(

alphas=alphas,

factory_state=adaptive_interp.factory_state_idealgas,

# Note: for things to be similar between this and

# recursive with InterpModel, must use InterpModelPiecewise

factory_statecollection=xtrap.InterpModelPiecewise,

state_kws=state_kws,

predict_kws={"order": 1},

reduce_dim="rep",

alpha_tol=0.1,

tol=0.003,

callback=adaptive_interp.callback_plot_progress,

)