Temperature independent average observable#

We first extrapolate our ideal gas test system in temperature and compare to the exact result. We also compare results with finite numbers of samples to exact results for an infinite number of samples. This is possible because we can analytically calculate derivatives for the ideal gas model at any order rather than estimating them.

The first thing we want to do is define some parameters and generate data. We will define a reference inverse temperature, specific orders we want to extrapolate to, and create some reference data. The thermoextrap.idealgas.generate_data() function conveniently provides random samples of \(x\) and \(U\) data. This will be in the format of an array with nconfig elements, each being the average \(x\) value over \(N\) independent ideal gas particles. The relevant potential energy is an array of the same shape with a single entry for each configuration. More generally, the data that should be provided to the extrapolation/interpolation code can have any number of columns but should have a single row associated with each simulation snapshot and potential energy. This allows for simultaneous extrapolation of all points in RDFs or time correlation functions with the interparticle distance or time varying with column. Or for simultaneous extrapolation of multiple observables. Critical, however, is that the potential energy (or Hamiltonian) is that which is appropriate to the snapshot with the same index. So for dynamical quantities like time correlation functions, the appropriate Hamiltonian is that for the starting configuration. Another main point here is that the number of columns is arbitrary, with each extrapolated separately, but because of the way the code is vectorized you get much better efficiency by providing multiple columns rather than running the extrapolation multiple times for different observables.

For more information on the structure of data and other options for inputting data, please see the Data_Organization notebook.

%matplotlib inline

import cmomy

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

# Import idealgas module

from thermoextrap import idealgas

# set random seed

rng = cmomy.random.default_rng(0)

# Define test betas and reference beta

betas = np.arange(0.1, 10.0, 0.5)

beta_ref = betas[11]

vol = 1.0

# Define orders to extrapolate to

orders = [1, 2, 4, 6]

order = orders[-1]

npart = 1000 # Number of particles (in single configuration)

nconfig = 100_000 # Number of configurations

# Generate all the data we could want

xdata, udata = idealgas.generate_data((nconfig, npart), beta_ref, vol)

All the code below relies on inputting xarray objects. This is easily applied.

import xarray as xr

xdata = xr.DataArray(xdata, dims=["rec"])

udata = xr.DataArray(udata, dims=["rec"])

We now want to create and train our extrapolation and perturbation models that we will compare. By default the ExtrapModel or other classes work with the simplest case of directly extrapolating an observable that is an average quantity and does not explicitly depend on temperature. Note that this covers quantities that include dependence on kinetic degrees of freedom, with the potential energy simply substituted for the full Hamiltonian (total energy). Or it works for extrapolating in temperature or pressure in the NPT ensemble - again you just have to insert the appropriate Hamiltonian instead of the potential energy. To be specific, that means that if we want to extrapolate with respect to \(\beta\) in the NPT ensemble, we need to provide \(U + pV\) instead of just \(U\). To extrapolate with respect to \(p\) in the NPT, we only provide \(\beta V\) at each snapshot. The math then works out the same way and all of this code can be re-used. We handle other cases, like explicit temperature dependence of the quantity to be averaged, in other notebooks.

import thermoextrap as xtrap

# First, create a model around the data - see "Data_Organization" notebook

data = xtrap.DataCentralMomentsVals.from_vals(

order=orders[-1], rec_dim="rec", xv=xdata, uv=udata, central=True

)

# Create extrapolation and perturbation models

xem = xtrap.beta.factory_extrapmodel(beta_ref, data)

xpm = xtrap.beta.factory_perturbmodel(beta_ref, uv=udata, xv=xdata)

Let’s test some predictions and look at the results. Notice that you can make predictions over scalars or arrays of values, but the extrapolation will only be 1D in the variable of interest (e.g. temperature, pressure, etc.). Extrapolations in multiple dimensions of state variables is not yet supported.

x = rng.random()

print(f"{x:.3f}")

0.045

# Make some predictions

print(

f"""

Extrapolation: {xem.predict(betas[0]).values:.3f}

Perturbation:, {xpm.predict(betas[0]).values:.3f}

"""

)

# By default, uses maximum order, which here is inaccurate due to limited sampling (see figure below)

# But can switch to any lower order if desired

# Remember, order doesn't matter for perturbation

print(

f"""

At lower order

--------------

Extrapolation: {xem.predict(betas[0], order=2).values:.3f}

Perturbation: {xpm.predict(betas[0]).values:.3f}

"""

)

# Can also make predictions for multiple values

print(

f"""

For multiple beta values

------------------------

Extrapolation: {xem.predict(betas[:4], order=2).values}

Perturbation: {xpm.predict(betas[:4]).values}

"""

)

Extrapolation: -23.750

Perturbation:, 0.199

At lower order

--------------

Extrapolation: 0.448

Perturbation: 0.199

For multiple beta values

------------------------

Extrapolation: [0.4484 0.4137 0.3811 0.3503]

Perturbation: [0.1986 0.1986 0.1986 0.1986]

All of the extrapolation/interpolation model classes come with a way to resample data efficiently to allow for bootstrapped uncertainty estimates. The thermoextrap.data.DataCentralMomentsVals.resample() function actually resamples the data and returns a new extrapolation/interpolation class object, meaning that the necessary coefficients are automatically recomputed. However, the coefficients are computed separately for the different bootstrapped samples, which appear as different entries in the “replication” or “rep” dimension. In what we show below, this makes it easy to create bootstrap resampled data, then compute statistics on the coefficients or the predictions using built in statistical functions in xarray (e.g., xarray.DataArray.mean() and xarray.DataArray.std()).

# Create a bootstrapped sample

boot_xem = xem.resample({"nrep": 100})

# Predict coefficients - these are derivatives divided by appropriate factorial, so Taylor series coefficients

boot_xem.coefs(order=2)

<xarray.DataArray (order: 3, rep: 100)> Size: 2kB

array([[ 0.1749, 0.1748, 0.1749, 0.1748, 0.1748, 0.1748, 0.1749,

0.1749, 0.1749, 0.1748, 0.1749, 0.1748, 0.1748, 0.1749,

0.1748, 0.1749, 0.1749, 0.1749, 0.1749, 0.1749, 0.1748,

0.1749, 0.1749, 0.1749, 0.1748, 0.1748, 0.1749, 0.1748,

0.1748, 0.1748, 0.1748, 0.1748, 0.1749, 0.1748, 0.1748,

0.1749, 0.1749, 0.1748, 0.1748, 0.1749, 0.1749, 0.1748,

0.1749, 0.1748, 0.1748, 0.1749, 0.1749, 0.1748, 0.1748,

0.1749, 0.1749, 0.1749, 0.1749, 0.1749, 0.1748, 0.1748,

0.1749, 0.1749, 0.1748, 0.1749, 0.1749, 0.1748, 0.1748,

0.1748, 0.1748, 0.1749, 0.1749, 0.1749, 0.1749, 0.1748,

0.1749, 0.1749, 0.1748, 0.1748, 0.1749, 0.1749, 0.1748,

0.1748, 0.1748, 0.1748, 0.1748, 0.1748, 0.1748, 0.1748,

0.1748, 0.1748, 0.1748, 0.1749, 0.1748, 0.1748, 0.1749,

0.1748, 0.1748, 0.1749, 0.1749, 0.1748, 0.1749, 0.1748,

0.1749, 0.1748],

[-0.0283, -0.0282, -0.0285, -0.0283, -0.0283, -0.0282, -0.0283,

-0.0282, -0.028 , -0.0281, -0.0282, -0.028 , -0.028 , -0.0281,

-0.0284, -0.0281, -0.0281, -0.0284, -0.0284, -0.0284, -0.0281,

-0.0282, -0.0282, -0.0282, -0.0283, -0.0284, -0.0281, -0.0282,

-0.0282, -0.0282, -0.0283, -0.0284, -0.0283, -0.0282, -0.028 ,

...

-0.0282, -0.0284, -0.0282, -0.0283, -0.0283, -0.0284, -0.0281,

-0.0283, -0.0284, -0.0282, -0.0282, -0.0281, -0.028 , -0.0282,

-0.0283, -0.0284, -0.0286, -0.0284, -0.0283, -0.0284, -0.0285,

-0.0282, -0.028 , -0.0282, -0.0283, -0.0283, -0.0283, -0.0279,

-0.0282, -0.0282],

[ 0.0039, 0.0035, 0.0045, 0.004 , 0.004 , 0.0035, 0.0038,

0.0049, 0.0032, 0.0036, 0.0034, 0.0041, 0.0044, 0.0039,

0.0041, 0.0046, 0.0041, 0.0035, 0.0035, 0.0035, 0.0043,

0.004 , 0.0033, 0.0048, 0.0038, 0.0038, 0.0034, 0.0041,

0.0035, 0.0042, 0.005 , 0.0038, 0.0054, 0.0037, 0.0048,

0.0039, 0.0039, 0.0047, 0.0048, 0.0043, 0.004 , 0.0041,

0.0041, 0.0032, 0.0041, 0.0047, 0.0044, 0.0044, 0.0037,

0.0042, 0.0038, 0.0049, 0.0046, 0.0026, 0.0045, 0.0038,

0.003 , 0.0045, 0.0034, 0.0048, 0.004 , 0.0049, 0.0027,

0.0031, 0.0041, 0.0045, 0.0039, 0.0024, 0.0037, 0.0043,

0.0037, 0.0042, 0.0051, 0.0027, 0.004 , 0.0037, 0.0047,

0.0036, 0.0053, 0.0046, 0.0046, 0.0039, 0.0033, 0.004 ,

0.0038, 0.0045, 0.0034, 0.0044, 0.0051, 0.004 , 0.0041,

0.0049, 0.004 , 0.0042, 0.0042, 0.0045, 0.004 , 0.0038,

0.0043, 0.0043]])

Dimensions without coordinates: order, rep# Make predictions

boot_xem.predict(betas[:4], order=2)

<xarray.DataArray (beta: 4, rep: 100)> Size: 3kB

array([[0.4493, 0.4352, 0.4688, 0.4502, 0.4498, 0.4348, 0.4462, 0.479 ,

0.4265, 0.439 , 0.432 , 0.454 , 0.4629, 0.449 , 0.4553, 0.4682,

0.4535, 0.4369, 0.436 , 0.4376, 0.459 , 0.4509, 0.4312, 0.4752,

0.4438, 0.4473, 0.4336, 0.4542, 0.4375, 0.4569, 0.4828, 0.4449,

0.4927, 0.442 , 0.4728, 0.4487, 0.447 , 0.4724, 0.4741, 0.4612,

0.4509, 0.4549, 0.4543, 0.4262, 0.4525, 0.4733, 0.4642, 0.4617,

0.4421, 0.4574, 0.4469, 0.4785, 0.4709, 0.4086, 0.4672, 0.4435,

0.4214, 0.4644, 0.434 , 0.4761, 0.4524, 0.4804, 0.4124, 0.4243,

0.4547, 0.4657, 0.4483, 0.4023, 0.4426, 0.4629, 0.4414, 0.4581,

0.4833, 0.4119, 0.4506, 0.4435, 0.4723, 0.4388, 0.4927, 0.4696,

0.468 , 0.4471, 0.4289, 0.4512, 0.4441, 0.4671, 0.4355, 0.4654,

0.4848, 0.4518, 0.4542, 0.4766, 0.4504, 0.4585, 0.4571, 0.4674,

0.4505, 0.4427, 0.4601, 0.4613],

[0.4145, 0.4029, 0.4307, 0.4152, 0.4149, 0.4025, 0.412 , 0.439 ,

0.3956, 0.4059, 0.4001, 0.4183, 0.4257, 0.4142, 0.4196, 0.4301,

0.4179, 0.4044, 0.4036, 0.4049, 0.4224, 0.4158, 0.3995, 0.4359,

0.4099, 0.4129, 0.4014, 0.4186, 0.4047, 0.4207, 0.4422, 0.4109,

0.4504, 0.4085, 0.4338, 0.414 , 0.4126, 0.4334, 0.435 , 0.4244,

0.4159, 0.4192, 0.4187, 0.3955, 0.4171, 0.4343, 0.4269, 0.4247,

0.4086, 0.4213, 0.4125, 0.4386, 0.4324, 0.3809, 0.4293, 0.4097,

...

0.377 , 0.3872, 0.3801, 0.4012, 0.3962, 0.3545, 0.3937, 0.3778,

0.363 , 0.3917, 0.3715, 0.3997, 0.3839, 0.4026, 0.3569, 0.3649,

0.3854, 0.3927, 0.381 , 0.3501, 0.3771, 0.391 , 0.3764, 0.3877,

0.4044, 0.3567, 0.3826, 0.3779, 0.397 , 0.3747, 0.4108, 0.3952,

0.3941, 0.3801, 0.3678, 0.3829, 0.3782, 0.3937, 0.3727, 0.3926,

0.4055, 0.3835, 0.3851, 0.3999, 0.3823, 0.3878, 0.3869, 0.3938,

0.3825, 0.377 , 0.3889, 0.3897],

[0.3509, 0.3433, 0.3614, 0.3513, 0.3511, 0.3431, 0.3493, 0.3665,

0.3386, 0.3452, 0.3416, 0.353 , 0.3578, 0.3505, 0.3542, 0.3607,

0.3529, 0.3445, 0.3439, 0.3448, 0.3558, 0.3516, 0.3412, 0.3644,

0.3479, 0.3499, 0.3423, 0.3534, 0.3445, 0.3547, 0.3686, 0.3487,

0.3738, 0.3469, 0.3629, 0.3505, 0.3495, 0.3627, 0.3639, 0.3572,

0.3518, 0.354 , 0.3536, 0.3387, 0.3523, 0.3634, 0.3589, 0.3572,

0.3472, 0.3552, 0.3496, 0.3662, 0.3623, 0.3293, 0.3604, 0.3477,

0.3361, 0.3587, 0.3428, 0.3651, 0.3527, 0.3675, 0.3312, 0.3375,

0.3539, 0.3596, 0.3502, 0.3258, 0.3471, 0.3583, 0.3467, 0.3557,

0.3687, 0.3311, 0.3516, 0.3479, 0.3628, 0.3453, 0.3739, 0.3615,

0.3606, 0.3495, 0.3397, 0.3518, 0.3481, 0.3604, 0.3438, 0.3595,

0.3697, 0.3524, 0.3536, 0.3652, 0.3512, 0.3557, 0.355 , 0.3604,

0.3515, 0.347 , 0.3565, 0.3571]])

Coordinates:

* beta (beta) float64 32B 0.1 0.6 1.1 1.6

dalpha (beta) float64 32B -5.5 -5.0 -4.5 -4.0

beta0 float64 8B 5.6

Dimensions without coordinates: rep# Make predictions and compute statistics over bootstrapped resamples

# This should closely match the predictions without bootstrapping!

boot_xem.predict(betas[:4], order=2).mean("rep")

<xarray.DataArray (beta: 4)> Size: 32B

array([0.4527, 0.4173, 0.384 , 0.3526])

Coordinates:

* beta (beta) float64 32B 0.1 0.6 1.1 1.6

dalpha (beta) float64 32B -5.5 -5.0 -4.5 -4.0

beta0 float64 8B 5.6# And standard deviations

# And remember, you can do the same thing with coefficients (which includes the bootstrapped data itself)

boot_xem.predict(betas[:4], order=2).std("rep")

<xarray.DataArray (beta: 4)> Size: 32B

array([0.0178, 0.0147, 0.0119, 0.0094])

Coordinates:

* beta (beta) float64 32B 0.1 0.6 1.1 1.6

dalpha (beta) float64 32B -5.5 -5.0 -4.5 -4.0

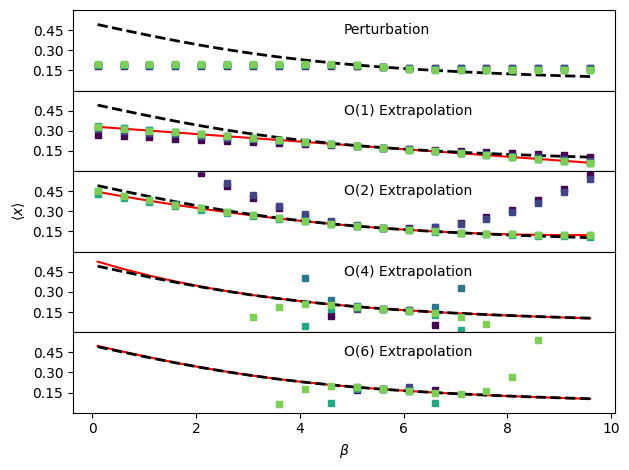

beta0 float64 8B 5.6To demonstrate how easy it is to try extrapolations at different orders and compare to our analytical test system, we will loop over different numbers of samples (in sets randomly drawn from the data we generated earlier) and extrapolation orders and compare the performance of extrapolation and perturbation to the analytical result.

fig, ax = plt.subplots(len(orders) + 1, sharex=True, sharey=True)

nsampvals = np.array((10.0 * np.ones(5)) ** np.arange(1, 6), dtype=int)

nsampcolors = plt.cm.viridis(np.arange(0.0, 1.0, float(1.0 / len(nsampvals))))

# First plot the analytical result

for a in ax:

a.plot(betas, idealgas.x_ave(betas, vol), "k--", linewidth=2.0)

# Next look at extrapolation with an infinite number of samples

# This is possible in the ideal gas model in both temperature and volume

for j, o in enumerate(orders):

trueExtrap, trueDerivs = idealgas.x_beta_extrap(o, beta_ref, betas, vol)

ax[j + 1].plot(betas, trueExtrap, "r-", zorder=0)

if j == len(orders) - 1:

print(f"True extrapolation coefficients: {trueDerivs}")

for i, n in enumerate(nsampvals):

thisinds = rng.choice(len(xdata), size=n, replace=False)

# Get parameters for extrapolation model with this data by training it - the parameters are the derivatives

xem = xtrap.beta.factory_extrapmodel(

beta=beta_ref,

data=xtrap.DataCentralMomentsVals.from_vals(

uv=udata[thisinds], xv=xdata[thisinds], central=True, order=order

),

)

out = xem.predict(betas, cumsum=True)

print(

f"\t With N_configs = {n:6}: {xem.derivs(norm=False).values}"

) # Have to flatten because observable is 1-D

for j, o in enumerate(orders):

out.sel(order=o).plot(

ax=ax[j + 1],

marker="s",

ls="None",

markersize=4,

color=nsampcolors[i],

label=f"N={n}",

)

# And do the same thing for perturbation, but here the parameters are just the data

xpm = xtrap.beta.factory_perturbmodel(beta_ref, udata[thisinds], xdata[thisinds])

xpm.predict(betas).plot(ax=ax[0], marker="s", ls="None", ms=4, color=nsampcolors[i])

ax[2].set_ylabel(r"$\langle x \rangle$")

ax[-1].set_xlabel(r"$\beta$")

ax[0].annotate("Perturbation", xy=(0.5, 0.7), xycoords="axes fraction")

for j, o in enumerate(orders):

ax[j + 1].annotate(f"O({o}) Extrapolation", xy=(0.5, 0.7), xycoords="axes fraction")

ax[-1].set_ylim((0.0, 0.6))

ax[-1].yaxis.set_major_locator(mpl.ticker.MaxNLocator(nbins=4, prune="both"))

fig.tight_layout()

fig.subplots_adjust(hspace=0.0)

for a in ax:

a.set_title("")

plt.show()

True extrapolation coefficients: [ 0.1749 -0.0282 0.0076 -0.0023 0.0004 0.0003 -0.0004]

With N_configs = 10: [ 1.7514e-01 -1.6838e-02 5.7698e-02 -9.1330e-02 -2.8572e+00 5.4054e+01

-2.4043e+02]

With N_configs = 100: [ 1.7533e-01 -2.4669e-02 5.8247e-02 1.8898e-01 -6.3072e+00 3.1714e+01

1.0259e+03]

With N_configs = 1000: [ 1.7476e-01 -2.8602e-02 8.0187e-03 7.5768e-03 8.5724e-01 2.5574e+01

-3.4636e+02]

With N_configs = 10000: [ 1.7487e-01 -2.8687e-02 6.2837e-03 4.7795e-02 -7.1003e-01 2.6718e+00

-5.6026e+01]

With N_configs = 100000: [ 0.1748 -0.0282 0.0078 -0.0049 -0.1045 0.4246 -0.0662]

In each of the figures, the true behavior is shown as a black dashed line, the analytical result (infinite sampling) for each order of extrapolation is shown as a red solid line and the results with 10, 100, 1000, … 100000 randomly sampled configurations are shown with purple (fewer samples) to green (more samples) circles. Perturbation works close to the reference temperature, but saturates far away. With more samples, it works further out, but the increases in accuracy are marginal. First order extrapolation quickly converges to the infinite sampling limit while second order takes more sampling to do so. The actual coefficients at each order of extrapolation are printed below. Since perturbation essentially refuses to provide an estimate past a certain distance in \(\beta\), it is not reliable some distance out. Both first and second order extrapolation essentially get lucky here and remain highly accurate over very large temperature ranges. For higher order extrapolation, the analytical, infinite sampling result matches very closely with the true temperature dependence of \(\langle x \rangle\). However, the finite-sampling results are in practice very poor due to difficulties in accurately estimating the higher-order moments of the potential energy distribution. The higher orders are actually quite accurate (if you try zooming in) close to the point we’re extrapolating from, but the error grows very quickly as we move further away.