Property regression example#

low level interface#

To show how the components of NFFLr work together, let’s train a formation energy model using the dft_3d dataset.

We can use the periodic_radius_graph transform to configure the AtomsDataset to automatically transform atomic configurations into DGLGraphs.

import nfflr

transform = nfflr.nn.PeriodicRadiusGraph(cutoff=5.0)

dataset = nfflr.AtomsDataset(

"dft_3d",

target="formation_energy_peratom",

transform=transform,

)

dataset[0]

/opt/hostedtoolcache/Python/3.10.13/x64/lib/python3.10/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

2024-03-08 20:07:11,073 INFO util.py:154 -- Missing packages: ['ipywidgets']. Run `pip install -U ipywidgets`, then restart the notebook server for rich notebook output.

2024-03-08 20:07:11,224 INFO util.py:154 -- Missing packages: ['ipywidgets']. Run `pip install -U ipywidgets`, then restart the notebook server for rich notebook output.

dataset_name='dft_3d'

Obtaining 3D dataset 76k ...

Reference:https://www.nature.com/articles/s41524-020-00440-1

Other versions:https://doi.org/10.6084/m9.figshare.6815699

0%| | 0.00/40.8M [00:00<?, ?iB/s]

0%| | 52.2k/40.8M [00:00<01:45, 385kiB/s]

1%| | 209k/40.8M [00:00<00:49, 818kiB/s]

2%|▏ | 940k/40.8M [00:00<00:14, 2.83MiB/s]

9%|▉ | 3.81M/40.8M [00:00<00:03, 9.76MiB/s]

20%|██ | 8.37M/40.8M [00:00<00:01, 17.9MiB/s]

33%|███▎ | 13.7M/40.8M [00:00<00:00, 27.3MiB/s]

41%|████ | 16.6M/40.8M [00:00<00:00, 27.1MiB/s]

54%|█████▍ | 22.1M/40.8M [00:01<00:00, 33.1MiB/s]

64%|██████▎ | 26.0M/40.8M [00:01<00:00, 34.6MiB/s]

74%|███████▍ | 30.3M/40.8M [00:01<00:00, 35.8MiB/s]

84%|████████▍ | 34.4M/40.8M [00:01<00:00, 37.3MiB/s]

95%|█████████▌| 38.8M/40.8M [00:01<00:00, 38.0MiB/s]

100%|██████████| 40.8M/40.8M [00:01<00:00, 27.3MiB/s]

Loading the zipfile...

Loading completed.

(Graph(num_nodes=8, num_edges=288,

ndata_schemes={'coord': Scheme(shape=(3,), dtype=torch.float32), 'atomic_number': Scheme(shape=(), dtype=torch.int32)}

edata_schemes={'r': Scheme(shape=(3,), dtype=torch.float32)}),

tensor(-0.4276))

AtomsDataset can also load structures from the directory format that ALIGNN uses; the directory should contain a collection of POSCAR, CIF, or XYZ files and a mapping from file names to prediction targets in the file id_prop.csv.

For example, loading the small set of POSCAR files distributed with nfflr (and alignn):

import inspect

from pathlib import Path

nfflr_root = Path(inspect.getfile(nfflr)).parent

dataset = nfflr.AtomsDataset(

nfflr_root / "examples/sample_data",

target="target",

transform=transform,

)

dataset[0]

dataset_name=PosixPath('/home/runner/work/nfflr/nfflr/nfflr/examples/sample_data')

(Graph(num_nodes=8, num_edges=288,

ndata_schemes={'coord': Scheme(shape=(3,), dtype=torch.float32), 'atomic_number': Scheme(shape=(), dtype=torch.int32)}

edata_schemes={'r': Scheme(shape=(3,), dtype=torch.float32)}),

tensor(0.))

Set up a medium-sized ALIGNN model:

cfg = nfflr.models.ALIGNNConfig(

transform=transform,

alignn_layers=2,

gcn_layers=2,

norm="layernorm",

atom_features="embedding"

)

model = nfflr.models.ALIGNN(cfg)

atoms, target = dataset[0]

model(atoms)

tensor(0.2389, grad_fn=<SqueezeBackward0>)

AtomsDataset is meant to work nicely with standard pytorch DataLoaders.

Because of the rich structure of the common input formats for atomistic ML, often a custom collation function is needed to properly auto-batch samples - AtomsDataset tries to automatically select an appropriate collate_fn for tasks that it knows about, which can be accessed by AtomsDataset.collate.

import numpy as np

import torch

from torch import nn

from torch.utils.data import DataLoader, SubsetRandomSampler

batchsize = 2

train_loader = DataLoader(

dataset,

batch_size=batchsize,

collate_fn=dataset.collate,

sampler=SubsetRandomSampler(dataset.split["train"]),

drop_last=True

)

next(iter(train_loader))

(Graph(num_nodes=100, num_edges=2876,

ndata_schemes={'coord': Scheme(shape=(3,), dtype=torch.float32), 'atomic_number': Scheme(shape=(), dtype=torch.int32)}

edata_schemes={'r': Scheme(shape=(3,), dtype=torch.float32)}),

tensor([0.9240, 4.0720]))

Now we can set up a PyTorch optimizer and objective function and optimize the model parameters with an explicit training loop. See the [PyTorch quickstart tutorial for more context)[https://pytorch.org/tutorials/beginner/basics/quickstart_tutorial.html].

from tqdm import tqdm

criterion = nn.MSELoss()

optimizer = torch.optim.AdamW(model.parameters(), lr=1e-3, weight_decay=0.1)

training_loss = []

for epoch in range(5):

for step, (g, y) in enumerate(tqdm(train_loader)):

pred = model(g)

loss = criterion(pred, y)

loss.backward()

optimizer.step()

optimizer.zero_grad()

training_loss.append(loss.item())

0%| | 0/19 [00:00<?, ?it/s]

11%|█ | 2/19 [00:00<00:01, 16.69it/s]

21%|██ | 4/19 [00:01<00:08, 1.85it/s]

26%|██▋ | 5/19 [00:02<00:07, 1.81it/s]

32%|███▏ | 6/19 [00:02<00:05, 2.23it/s]

37%|███▋ | 7/19 [00:03<00:05, 2.38it/s]

42%|████▏ | 8/19 [00:03<00:03, 2.91it/s]

47%|████▋ | 9/19 [00:03<00:03, 3.05it/s]

53%|█████▎ | 10/19 [00:04<00:04, 2.05it/s]

58%|█████▊ | 11/19 [00:04<00:03, 2.27it/s]

63%|██████▎ | 12/19 [00:04<00:02, 2.61it/s]

68%|██████▊ | 13/19 [00:05<00:02, 2.75it/s]

74%|███████▎ | 14/19 [00:06<00:02, 1.78it/s]

79%|███████▉ | 15/19 [00:06<00:01, 2.23it/s]

84%|████████▍ | 16/19 [00:06<00:01, 2.81it/s]

89%|████████▉ | 17/19 [00:06<00:00, 3.31it/s]

95%|█████████▍| 18/19 [00:07<00:00, 3.48it/s]

100%|██████████| 19/19 [00:07<00:00, 2.69it/s]

0%| | 0/19 [00:00<?, ?it/s]

5%|▌ | 1/19 [00:00<00:06, 2.76it/s]

11%|█ | 2/19 [00:00<00:03, 4.49it/s]

16%|█▌ | 3/19 [00:00<00:04, 3.52it/s]

21%|██ | 4/19 [00:00<00:03, 4.48it/s]

26%|██▋ | 5/19 [00:01<00:02, 4.73it/s]

32%|███▏ | 6/19 [00:01<00:03, 3.26it/s]

37%|███▋ | 7/19 [00:02<00:06, 1.99it/s]

42%|████▏ | 8/19 [00:03<00:06, 1.83it/s]

53%|█████▎ | 10/19 [00:04<00:04, 1.81it/s]

58%|█████▊ | 11/19 [00:04<00:03, 2.13it/s]

63%|██████▎ | 12/19 [00:04<00:02, 2.62it/s]

68%|██████▊ | 13/19 [00:04<00:02, 2.76it/s]

74%|███████▎ | 14/19 [00:05<00:01, 2.71it/s]

79%|███████▉ | 15/19 [00:05<00:01, 3.17it/s]

84%|████████▍ | 16/19 [00:05<00:00, 3.53it/s]

89%|████████▉ | 17/19 [00:05<00:00, 3.89it/s]

95%|█████████▍| 18/19 [00:06<00:00, 2.25it/s]

100%|██████████| 19/19 [00:07<00:00, 2.42it/s]

100%|██████████| 19/19 [00:07<00:00, 2.64it/s]

0%| | 0/19 [00:00<?, ?it/s]

5%|▌ | 1/19 [00:00<00:10, 1.77it/s]

11%|█ | 2/19 [00:00<00:05, 2.86it/s]

16%|█▌ | 3/19 [00:01<00:05, 3.06it/s]

21%|██ | 4/19 [00:01<00:03, 3.83it/s]

26%|██▋ | 5/19 [00:01<00:02, 4.82it/s]

32%|███▏ | 6/19 [00:01<00:04, 3.05it/s]

42%|████▏ | 8/19 [00:02<00:02, 4.75it/s]

47%|████▋ | 9/19 [00:02<00:01, 5.17it/s]

53%|█████▎ | 10/19 [00:03<00:03, 2.32it/s]

58%|█████▊ | 11/19 [00:03<00:02, 2.72it/s]

63%|██████▎ | 12/19 [00:03<00:02, 3.04it/s]

68%|██████▊ | 13/19 [00:04<00:02, 2.01it/s]

74%|███████▎ | 14/19 [00:04<00:01, 2.60it/s]

84%|████████▍ | 16/19 [00:05<00:00, 3.38it/s]

89%|████████▉ | 17/19 [00:05<00:00, 2.41it/s]

95%|█████████▍| 18/19 [00:06<00:00, 1.95it/s]

100%|██████████| 19/19 [00:07<00:00, 1.94it/s]

100%|██████████| 19/19 [00:07<00:00, 2.63it/s]

0%| | 0/19 [00:00<?, ?it/s]

5%|▌ | 1/19 [00:00<00:02, 7.07it/s]

11%|█ | 2/19 [00:00<00:03, 5.65it/s]

16%|█▌ | 3/19 [00:01<00:09, 1.74it/s]

26%|██▋ | 5/19 [00:01<00:04, 2.96it/s]

32%|███▏ | 6/19 [00:02<00:04, 3.05it/s]

37%|███▋ | 7/19 [00:02<00:03, 3.73it/s]

42%|████▏ | 8/19 [00:02<00:03, 3.18it/s]

47%|████▋ | 9/19 [00:02<00:02, 3.54it/s]

58%|█████▊ | 11/19 [00:03<00:02, 3.76it/s]

63%|██████▎ | 12/19 [00:03<00:01, 3.62it/s]

68%|██████▊ | 13/19 [00:03<00:01, 4.31it/s]

74%|███████▎ | 14/19 [00:04<00:01, 3.15it/s]

79%|███████▉ | 15/19 [00:04<00:01, 2.39it/s]

84%|████████▍ | 16/19 [00:05<00:01, 1.82it/s]

89%|████████▉ | 17/19 [00:06<00:01, 1.68it/s]

95%|█████████▍| 18/19 [00:06<00:00, 1.94it/s]

100%|██████████| 19/19 [00:07<00:00, 2.30it/s]

100%|██████████| 19/19 [00:07<00:00, 2.70it/s]

0%| | 0/19 [00:00<?, ?it/s]

5%|▌ | 1/19 [00:00<00:13, 1.30it/s]

11%|█ | 2/19 [00:01<00:12, 1.37it/s]

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

Cell In[5], line 10

8 pred = model(g)

9 loss = criterion(pred, y)

---> 10 loss.backward()

11 optimizer.step()

12 optimizer.zero_grad()

File /opt/hostedtoolcache/Python/3.10.13/x64/lib/python3.10/site-packages/torch/_tensor.py:522, in Tensor.backward(self, gradient, retain_graph, create_graph, inputs)

512 if has_torch_function_unary(self):

513 return handle_torch_function(

514 Tensor.backward,

515 (self,),

(...)

520 inputs=inputs,

521 )

--> 522 torch.autograd.backward(

523 self, gradient, retain_graph, create_graph, inputs=inputs

524 )

File /opt/hostedtoolcache/Python/3.10.13/x64/lib/python3.10/site-packages/torch/autograd/__init__.py:266, in backward(tensors, grad_tensors, retain_graph, create_graph, grad_variables, inputs)

261 retain_graph = create_graph

263 # The reason we repeat the same comment below is that

264 # some Python versions print out the first line of a multi-line function

265 # calls in the traceback and some print out the last line

--> 266 Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

267 tensors,

268 grad_tensors_,

269 retain_graph,

270 create_graph,

271 inputs,

272 allow_unreachable=True,

273 accumulate_grad=True,

274 )

File /opt/hostedtoolcache/Python/3.10.13/x64/lib/python3.10/site-packages/torch/autograd/function.py:277, in BackwardCFunction.apply(self, *args)

276 class BackwardCFunction(_C._FunctionBase, FunctionCtx, _HookMixin):

--> 277 def apply(self, *args):

278 # _forward_cls is defined by derived class

279 # The user should define either backward or vjp but never both.

280 backward_fn = self._forward_cls.backward # type: ignore[attr-defined]

281 vjp_fn = self._forward_cls.vjp # type: ignore[attr-defined]

KeyboardInterrupt:

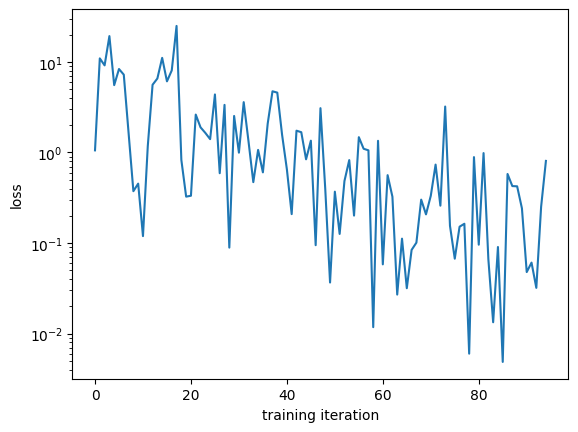

import matplotlib.pyplot as plt

%matplotlib inline

plt.plot(training_loss)

plt.xlabel("training iteration")

plt.ylabel("loss");

plt.semilogy();

using the ignite-based NFFLr trainer#

import tempfile

from nfflr import train

rank = 0

training_config = {

"dataset": dataset,

"model": model,

"optimizer": optimizer,

"criterion": criterion,

"random_seed": 42,

"batch_size": 2,

"learning_rate": 1e-3,

"weight_decay": 0.1,

"epochs": 5,

"num_workers": 0,

"progress": True,

"output_dir": tempfile.TemporaryDirectory().name

}

train.run_train(rank, training_config)

2024-01-24 14:46:26,979 ignite.distributed.auto.auto_dataloader INFO: Use data loader kwargs for dataset '<nfflr.data.dataset.':

{'collate_fn': <function AtomsDataset.collate_default at 0x29a06a560>, 'batch_size': 2, 'sampler': <torch.utils.data.sampler.SubsetRandomSampler object at 0x107b71510>, 'drop_last': True, 'num_workers': 0, 'pin_memory': False}

2024-01-24 14:46:26,979 ignite.distributed.auto.auto_dataloader INFO: Use data loader kwargs for dataset '<nfflr.data.dataset.':

{'collate_fn': <function AtomsDataset.collate_default at 0x29a06a560>, 'batch_size': 2, 'sampler': <torch.utils.data.sampler.SubsetRandomSampler object at 0x30ec1da80>, 'drop_last': True, 'num_workers': 0, 'pin_memory': False}

/Users/bld/.pyenv/versions/3.10.9/envs/nfflr/lib/python3.10/site-packages/dgl/backend/pytorch/tensor.py:445: UserWarning: TypedStorage is deprecated. It will be removed in the future and UntypedStorage will be the only storage class. This should only matter to you if you are using storages directly. To access UntypedStorage directly, use tensor.untyped_storage() instead of tensor.storage()

assert input.numel() == input.storage().size(), (

starting training loop

train results - Epoch: 1 Avg loss: 0.01

val results - Epoch: 1 Avg loss: 3.60

train results - Epoch: 2 Avg loss: 0.45

val results - Epoch: 2 Avg loss: 4.95

train results - Epoch: 3 Avg loss: 0.05

val results - Epoch: 3 Avg loss: 1.20

train results - Epoch: 4 Avg loss: 0.02

val results - Epoch: 4 Avg loss: 3.59

train results - Epoch: 5 Avg loss: 0.00

val results - Epoch: 5 Avg loss: 3.85

3.854463577270508