Tue, 26 Aug 2025 08:51:12 -0500

ABSTRACT

This guideline focuses on the authentication of subjects who interact with government information systems over networks to establish that a given claimant is a subscriber who has been previously authenticated. The result of the authentication process may be used locally by the system performing the authentication or asserted elsewhere in a federated identity system. This document defines technical requirements for each of the three authentication assurance levels. The guidelines are not intended to constrain the development or use of standards outside of this purpose. This publication supersedes NIST Special Publication (SP) 800-63B.

Keywords

authentication; authentication assurance; credential service provider; digital authentication; passwords.

Preface

This publication and its companion volumes — [SP800-63], [SP800-63A], and [SP800-63C] — provide technical guidelines for organizations to implement digital identity services.

This document, SP 800-63B, provides requirements to credential service providers (CSPs) for remote user authentication at each of three authentication assurance levels (AALs).

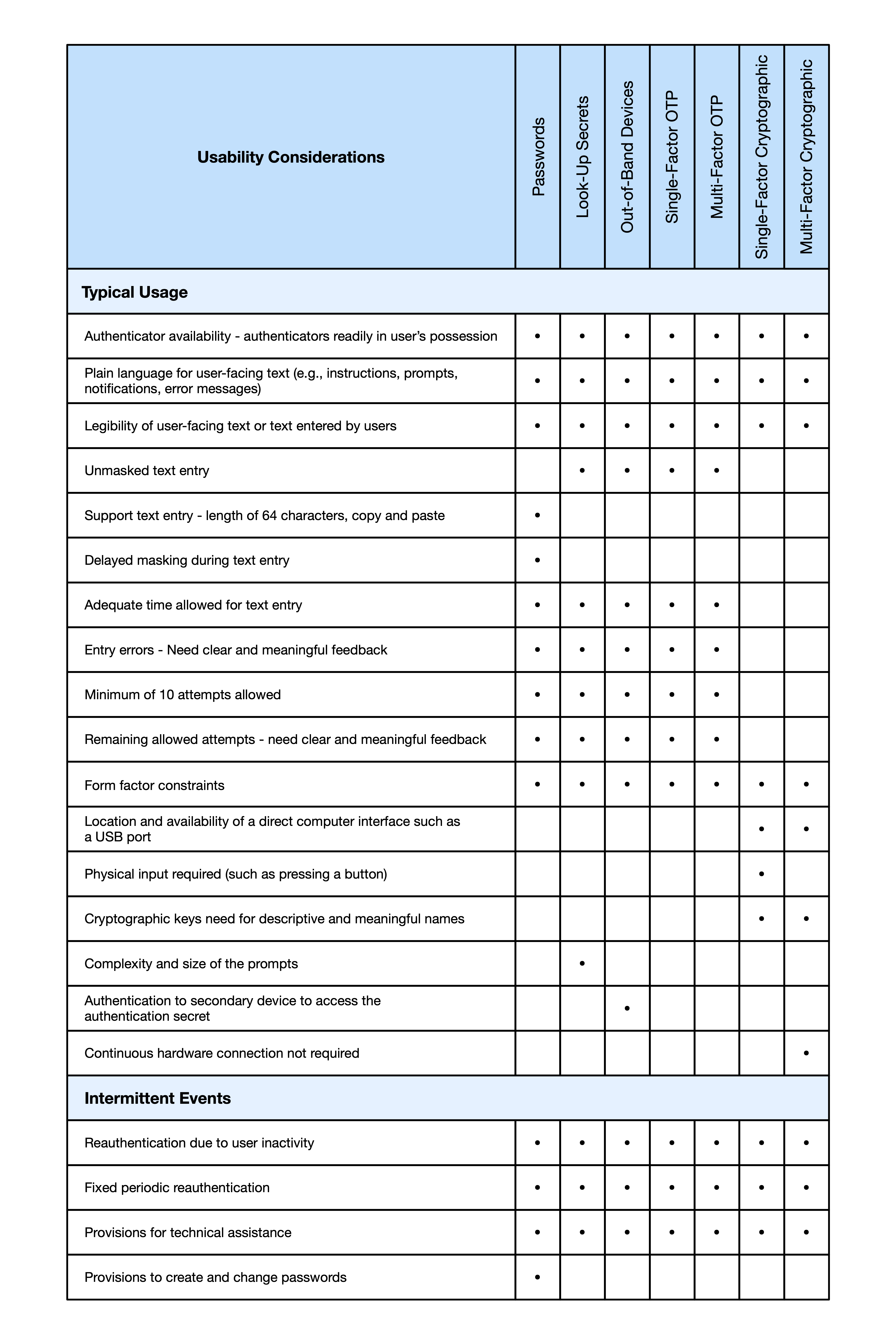

Introduction

This section is informative.

Authentication is the process of determining the validity of one or more authenticators used to claim a digital identity by establishing that a subject attempting to access a digital service is in control of the secrets used to authenticate. If return visits are applicable to a service, successful authentication provides reasonable risk-based assurance that the subject accessing the service today is the same as the one who previously accessed the service. One-time services (i.e., the subscriber will only ever access the service once) do not necessarily require persistent digital authentication nor the issuance of authenticators.

The authentication of claimants is central to the process of associating a subscriber with their online activity as recorded in their subscriber account, which is maintained by a credential service provider (CSP). Authentication is performed by verifying that the claimant controls one or more authenticators (called tokens in some earlier editions of SP 800-63) associated with a given subscriber account. The authentication process is conducted by a verifier, which is a role of the CSP or — in federated authentication — of an identity provider (IdP). Upon successful authentication, the verifier asserts the identifier for the subscriber to the relying party (RP). Optionally, the verifier may assert additional attributes to the RP.

This guideline provides recommendations on types of authentication processes, including choices of authenticators, that may be used at various authentication assurance levels (AALs). It also provides recommendations on events that may occur during the lifetime of authenticators, including initial issuance, maintenance, and invalidation in the event of loss or theft of the authenticator.

This guideline applies to the digital authentication of subjects to systems over a network. It also requires that verifiers and RPs participating in authentication protocols be authenticated to claimants to assure the identity of the services with which they are authenticating. It does not address the authentication of a person for physical access (e.g., to a building).

This guideline recognizes that subscribers are responsible for protecting their authentication secrets and not disclosing them to others (e.g., credential sharing). The protections at the various AALs are intended to protect against credential theft and are not intended to protect against willful disclosure of credential secrets by a subscriber. In most cases, there are very few technical controls that can detect and prevent such willful collusion and sharing.

AALs categorize the strength of an authentication transaction. Stronger authentication (i.e., a higher AAL) requires malicious actors to have better capabilities and to expend greater resources to successfully subvert the authentication process. Authentication at higher AALs can effectively reduce the risk of attacks. A high-level summary of the technical requirements for each of the AALs is provided below; see Sec. 2 and Sec. 3 of this document for specific normative requirements.

Authentication Assurance Level 1: AAL1 provides basic confidence that the claimant controls an authenticator bound to the subscriber account being authenticated. AAL1 requires only single-factor authentication using a wide range of available authentication technologies. However, it is recommended that applications assessed at AAL1 offer multi-factor authentication options. Successful authentication requires the claimant to prove possession and control of the authenticator.

Authentication Assurance Level 2: AAL2 provides high confidence that the claimant controls one or more authenticators bound to the subscriber account being authenticated. Proof of the possession and control of two distinct authentication factors is required. Applications assessed at AAL2 must offer a phishing-resistant authentication (see Sec. 3.2.5) option.

Authentication Assurance Level 3: AAL3 provides very high confidence that the claimant controls one or more authenticators bound to the subscriber account being authenticated. Authentication at AAL3 is based on the proof of possession of a key through the use of a public-key cryptographic protocol. AAL3 authentication requires a phishing-resistant authenticator (see Sec. 3.2.5) with a non-exportable authentication key (see Sec. 3.2.13). To authenticate at AAL3, claimants are required to prove possession and control of two distinct authentication factors.

When a session has been authenticated at a given AAL and a higher AAL is required, an authentication process may also provide step-up authentication to raise the session’s AAL.

Notations

This guideline uses the following typographical conventions in text:

- Specific terms in CAPITALS represent normative requirements. When these same terms are not in CAPITALS, the term does not represent a normative requirement.

- The terms “SHALL” and “SHALL NOT” indicate requirements to be followed strictly in order to conform to the publication and from which no deviation is permitted.

- The terms “SHOULD” and “SHOULD NOT” indicate that among several possibilities, one is recommended as particularly suitable without mentioning or excluding others, that a certain course of action is preferred but not necessarily required, or that (in the negative form) a certain possibility or course of action is discouraged but not prohibited.

- The terms “MAY” and “NEED NOT” indicate a course of action permissible within the limits of the publication.

- The terms “CAN” and “CANNOT” indicate a possibility and capability — whether material, physical, or causal — or, in the negative, the absence of that possibility or capability.

Document Structure

This document is organized as follows. Each section is labeled as either normative (i.e., mandatory for compliance) or informative (i.e., not mandatory).

- Section 1 introduces the document. This section is informative.

- Section 2 describes requirements for AALs. This section is normative.

- Section 3 describes authenticator and verifier requirements. This section is normative.

- Section 4 describes requirements for authenticator event management. This section is normative.

- Section 5 describes requirements for session management. This section is normative.

- Section 6 provides security considerations. This section is informative.

- Section 7 provides privacy considerations. This section is informative.

- Section 8 provides customer experience considerations. This section is informative.

- The References section lists publications that are cited in this document. This section is informative.

- Appendix A discusses the strength of passwords. This appendix is informative.

- Appendix B discusses syncable authenticators. This appendix is normative.

- Appendix C contains a selected list of abbreviations used in this document. This appendix is informative.

- Appendix D contains a glossary of selected terms used in this document. This appendix is informative.

- Appendix E contains a summarized list of changes in this document’s history. This appendix is informative.

Authentication Assurance Levels

This section is normative.

To satisfy the requirements of a given AAL and be recognized as a subscriber, a claimant SHALL authenticate to an RP (or IdP, as described in [SP800-63C]) with a process whose strength is equal to or greater than the requirements at that level. The authentication process results in an identifier that uniquely identifies the subscriber each time they authenticate to that RP. The identifier MAY be pseudonymous. Other attributes that identify the subscriber as a unique subject MAY also be provided. Detailed normative requirements for authenticators and verifiers at each AAL are provided in Sec. 3. See [SP800-63] Sec. 3 for details on how to choose the most appropriate AAL.

Personal information collected during and after identity proofing (see [SP800-63A]) MAY be made available to the subscriber by the digital identity service through the subscriber account. The release or online availability of any personal information by federal agencies requires multi-factor authentication in accordance with [EO13681]. Therefore, federal agencies SHALL select a minimum of AAL2 when personal information is made available online.

At all AALs, indicators of potential fraud, including applicable indicators described in Sec. 5.3, MAY be used to lower the risk of misauthentication. For example, authentication from an unexpected geolocation or IP address block (e.g., a cloud service) might prompt the use of additional risk-based controls. CSPs or verifiers SHALL assess their use of indicators of potential fraud for efficacy and to identify and mitigate potential negative impacts on their user populations. CSPs or verifiers SHALL include fraud indicators in the authentication privacy risk assessment. The use of potential fraud indicators prior to or during the authentication process does not impact or change the AAL of a transaction or substitute for an authentication factor.

Throughout this document, [FIPS140] requirements are satisfied by security technologies, products, and services that utilize implementations of cryptography validated by the Cryptography Module Validation Program [CMVP]. FIPS 140 requirements at a given AAL are often different for authenticators and verifiers, with more stringent requirements generally applying to verifiers. This is in recognition of the practical limitations on the certification of authenticators as well as the broader scope that is often associated with a security breach at a verifier.

Authentication Assurance Level 1

AAL1 provides basic confidence that the claimant controls an authenticator that is bound to the subscriber account. AAL1 requires either single-factor or multi-factor authentication using a wide range of available authentication technologies. Verifiers SHOULD make multi-factor authentication options available at AAL1 and encourage their use. Successful authentication requires that the claimant prove possession and control of the authenticator through a secure authentication protocol.

Permitted Authenticator Types

AAL1 authentication SHALL use any of the following authentication types, which are further defined in Sec. 3:

- Password (Sec. 3.1.1): A memorizable secret typically chosen by the subscriber

- Look-up secret (Sec. 3.1.2): A secret determined by the claimant by looking up a prompted value in a list held by the subscriber

- Out-of-band device (Sec. 3.1.3): A secret sent or received through a separate communication channel with the subscriber

- Single-factor one-time password (OTP) (Sec. 3.1.4): A one-time secret obtained from a device or application held by the subscriber

- Multi-factor OTP (Sec. 3.1.5): A one-time secret obtained from a device or application held by the subscriber that requires activation by a second authentication factor

- Single-factor cryptographic authentication (Sec. 3.1.6): Proof of possession and control via an authentication protocol of a cryptographic key held by the subscriber

- Multi-factor cryptographic authentication (Sec. 3.1.7): Proof of possession and control via an authentication protocol of a cryptographic key held by the subscriber that requires activation by a second authentication factor

Authenticator and Verifier Requirements

Authenticators used at AAL1 SHALL use approved cryptography. In other words, they must use approved algorithms, but the implementation need not be validated under [FIPS140].

Communication between the claimant and verifier SHALL occur via one or more authenticated protected channels.

Cryptography used by verifiers operated by or on behalf of federal agencies at AAL1 SHALL be validated to meet the requirements of [FIPS140] Level 1.

Reauthentication

These guidelines provide for two types of timeouts, which are further described in Sec. 5.2:

- An overall timeout limits the duration of an authenticated session to a specified period following authentication or a previous reauthentication.

- An inactivity timeout terminates a session that has not had activity from the subscriber for a specified period.

Periodic reauthentication of subscriber sessions SHALL be performed, as described in Sec. 5.2. A definite reauthentication overall timeout SHALL be established, which SHOULD be no more than 30 days at AAL1. An inactivity timeout MAY be applied but is not required at AAL1.

Authentication Assurance Level 2

AAL2 provides high confidence that the claimant controls one or more authenticators that are bound to the subscriber account. Proof of possession and control of two distinct authentication factors through the use of secure authentication protocols is required. Approved cryptographic techniques are required.

Permitted Authenticator Types

At AAL2, authentication SHALL use either a multi-factor authenticator or a combination of two separate authentication factors. A multi-factor authenticator requires two factors to execute a single authentication event, such as a cryptographically secure device with an integrated biometric sensor that is required to activate the device. Authenticator requirements are specified in Sec. 3.

When a multi-factor authenticator is used, any of the following MAY be used:

- Multi-factor out-of-band authenticator (Sec. 3.1.3.4)

- Multi-factor OTP (Sec. 3.1.5)

- Multi-factor cryptographic authentication (Sec. 3.1.7)

When a combination of two single-factor authenticators is used, the combination SHALL include one physical authenticator (i.e., “something you have”) from the following list in conjunction with either a password (Sec. 3.1.1) or a biometric comparison:

- Look-up secret (Sec. 3.1.2)

- Out-of-band device (Sec. 3.1.3)

- Single-factor OTP (Sec. 3.1.4)

- Single-factor cryptographic authentication (Sec. 3.1.6)

A biometric characteristic is not recognized as an authenticator by itself. Section 3.2.3 requires a physical authenticator to be authenticated along with a biometric comparison. The physical authenticator then serves as “something you have,” while the biometric match serves as “something you are.” When a biometric comparison is used as an activation factor for a multi-factor authenticator, the authenticator itself serves as the physical authenticator. As noted in that section, local verification of biometric factors (i.e., the use of a multi-factor authenticator with a biometric comparison as an activation factor) is preferred over central biometric factor comparison.

Authenticator and Verifier Requirements

Authenticators used at AAL2 SHALL use approved cryptography. Cryptographic authenticators procured by federal agencies SHALL be validated to meet the requirements of [FIPS140] Level 1. At least one authenticator used at AAL2 SHALL be replay-resistant, as described in Sec. 3.2.7. Authentication at AAL2 SHOULD demonstrate authentication intent from at least one authenticator, as discussed in Sec. 3.2.8.

Communication between the claimant and verifier SHALL occur via one or more authenticated protected channels.

Cryptography used by verifiers operated by or on behalf of federal agencies at AAL2 SHALL be validated to meet the requirements of [FIPS140] Level 1 unless otherwise specified.

Verifiers SHALL offer at least one phishing-resistant authentication option at AAL2, as described in Sec. 3.2.5. Federal agencies SHALL require their staff, contractors, and partners to use phishing-resistant authentication to access federal information systems. In all cases, verifiers SHOULD encourage the use of phishing-resistant authentication at AAL2 whenever practical since phishing is a significant threat vector [IC3].

Reauthentication

Periodic reauthentication of subscriber sessions SHALL be performed, as described in Sec. 5.2. A definite reauthentication overall timeout SHALL be established, which SHOULD be no more than 24 hours at AAL2. The inactivity timeout SHOULD be no more than 1 hour. When the inactivity timeout has occurred but the overall timeout has not yet occurred, the verifier MAY allow the subscriber to reauthenticate using only a successful password or biometric comparison in conjunction with the session secret, as described in Sec. 5.1.

Authentication Assurance Level 3

AAL3 provides very high confidence that the claimant controls authenticators that are bound to the subscriber account. Authentication at AAL3 is based on the proof of possession of a key through the use of a cryptographic protocol along with either an activation factor or a password. AAL3 authentication requires the use of a cryptographic authenticator with a non-exportable private key that provides phishing resistance. Approved cryptographic techniques are required.

Permitted Authenticator Types

AAL3 authentication SHALL require one of the following authenticator combinations:

- Multi-factor cryptographic authentication (Sec. 3.1.7)

- Single-factor cryptographic authentication (Sec. 3.1.6) used in conjunction with either a password (Sec. 3.1.1) or a biometric comparison

A biometric characteristic is not recognized as an authenticator by itself. Section 3.2.3 requires a physical authenticator to be authenticated along with the biometric comparison. The physical authenticator then serves as “something you have,” while the biometric match serves as “something you are.” When a biometric comparison is used as an activation factor for a multi-factor authenticator, the authenticator itself serves as the physical authenticator. As noted in that section, local verification of biometric factors (i.e., the use of a multi-factor authenticator with a biometric comparison as an activation factor) is preferred over central biometric factor comparison.

Authenticator and Verifier Requirements

Authenticators used at AAL3 SHALL use approved cryptography. Communication between the claimant and verifier SHALL occur via one or more authenticated protected channels. The cryptographic authenticator used at AAL3 SHALL have a non-exportable private key and SHALL provide phishing resistance, as described in Sec. 3.2.5. The cryptographic authentication protocol SHALL be replay-resistant, as described in Sec. 3.2.7. All authentication and reauthentication processes at AAL3 SHALL demonstrate authentication intent from at least one authenticator, as described in Sec. 3.2.8. Cryptographic authenticators used at AAL3 SHALL use public-key cryptography to protect the authentication secrets from compromise of the verifier.

Single-factor and multi-factor authenticators used at AAL3 SHALL be validated to meet the requirements of [FIPS140] Level 1 or higher overall. As described in Sec. 3.2.12, cryptographic authenticators used at AAL3 are required to provide a hardware-protected, isolated environment to prevent authentication keys from being leaked or extracted. Since syncable authenticators (described in Appendix B) require the private key to be exportable, syncable authenticators SHALL NOT be used at AAL3.

Cryptography used by verifiers at AAL3 SHALL be validated at [FIPS140] Level 1 or higher.

Hardware-based authenticators and verifiers at AAL3 SHOULD resist relevant side-channel (e.g., timing and power-consumption analysis) attacks.

Reauthentication

Periodic reauthentication of subscriber sessions SHALL be performed, as described in Sec. 5.2. At AAL3, the overall timeout for reauthentication SHALL be no more than 12 hours. The inactivity timeout SHOULD be no more than 15 minutes. Unlike AAL2, AAL3 reauthentication requirements are the same as for initial authentication at AAL3.

General Requirements

The following requirements apply to authentication at all AALs.

Security Controls

The verifier SHALL employ appropriately tailored security controls from the moderate baseline security controls defined in [SP800-53] or an equivalent federal (e.g., [FEDRAMP]) or industry standard that the organization has chosen for the information systems, applications, and online services that these guidelines are used to protect.

Records Retention Policy

The verifier SHALL comply with its respective records retention policies in accordance with applicable laws, regulations, and policies, including any National Archives and Records Administration (NARA) records retention schedules that may apply. If the verifier opts to retain records in the absence of mandatory requirements, the verifier or the CSP or IdP of which it is a part SHALL conduct a risk management process [NISTRMF], including assessments of privacy and security risks, to determine how long records should be retained and SHALL inform the subscriber of that retention policy.

Privacy Requirements

The verifier SHALL employ appropriately tailored privacy controls defined in [SP800-53] or an equivalent industry standard.

If CSPs or IdPs process attributes for purposes other than identity services (i.e., identity proofing, authentication, or attribute assertions), related fraud mitigation, or compliance with laws or legal processes, they SHALL implement measures to maintain predictability and manageability commensurate with the privacy risks that arise from the additional processing. Examples of such measures include providing clear notice, obtaining subscriber consent, and enabling the selective use or disclosure of attributes. When CSPs or IdPs use consent measures, they SHALL NOT make consent for the additional processing a condition of the identity service.

Regardless of whether the CSP or IdP is an agency or private-sector provider, the following requirements apply to federal agencies that offer or use the authentication service:

- The agency SHALL consult with their Senior Agency Official for Privacy (SAOP) and conduct an analysis to determine whether the collection of personal information to issue or maintain authenticators triggers the requirements of the Privacy Act of 1974 [PrivacyAct] (see Sec. 7.4).

- The agency SHALL publish a System of Records Notice (SORN) to cover such collections, as applicable.

- The agency SHALL consult with its SAOP and conduct an analysis to determine whether the collection of personal information to issue or maintain authenticators triggers the requirements of the E-Government Act of 2002 [E-Gov].

- The agency SHALL publish a Privacy Impact Assessment (PIA) to cover such collection, as applicable.

Redress Requirements

The verifier and associated CSP or IdP SHALL provide mechanisms for the redress of subscriber complaints and problems that arise from subscriber authentication processes, as described in Sec. 5.6 of [SP800-63]. These mechanisms SHALL be easy for subscribers to find and use. The CSP or IdP SHALL assess the mechanisms for efficacy in resolving complaints or problems.

\clearpage

Summary of Requirements

Table 1 provides a non-normative summary of the requirements for each of the AALs.

Table 1. Summary of requirements by AAL

| Requirement | AAL1 | AAL2 | AAL3 |

|---|---|---|---|

| Permitted Authenticator Types | * Any AAL2 or AAL3 authenticator type * Password * Look-up secret * Out-of-band * SF OTP * SF cryptographic |

* MF cryptographic * MF out-of-band * MF OTP * Password or biometric comparison plus: –SF cryptographic –Look-up secret –Out-of-band –SF OTP |

* MF cryptographic * SF cryptographic plus: –Password –Biometric comparison |

| FIPS 140 Validation (Government Verifiers and Authenticators) |

Verifiers –Level 1 |

Verifiers –Level 1 Authenticators –Level 1 overall |

Verifiers –Level 1 Authenticators –Level 1 overall |

| Reauthentication (recommended) |

30 days overall | 24 hours overall 1 hour inactivity Single factor required |

12 hours overall 15 minutes inactivity |

| Phishing Resistance | Not required | Recommended; Must be available |

Required |

| Replay Resistance | Not required | Required | Required |

| Authentication Intent | Not required | Recommended | Required |

| Key Exportability | Permitted | Permitted | Prohibited |

Authenticator and Verifier Requirements

This section is normative.

This section provides detailed requirements that are specific to each type of authenticator. With the exception of the reauthentication requirements specified in Sec. 2 and the requirement for phishing resistance at AAL3 described in Sec. 3.2.5, the technical requirements for each authenticator type are the same, regardless of the AAL at which the authenticator is used.

In federated applications described in [SP800-63C], the authentication functions of an IdP correspond closely with that of a CSP or verifier. In the discussion below, the requirements associated with a CSP also apply to an IdP.

In many circumstances, subscribers need to share devices that are used in authentication processes, such as a family phone that receives OTPs. In public-facing applications, CSPs SHOULD NOT prevent a device from being registered as an authenticator by multiple subscribers. However, they MAY establish restrictions to prevent large-scale fraud or misuse (e.g., limiting the total number of subscriber accounts a single device can be registered with).

Authentication, authenticator binding (discussed in Sec. 4.1), and session management (discussed in Sec. 5) are based on proof of possession of one or more types of secrets, as shown in Table 2.

Table 2. Summary of secrets (non-normative)

| Type of Secret | Purpose | Reference Section |

|---|---|---|

| Password | A subscriber-chosen secret used as an authentication factor | 3.1.1 |

| Look-up secret | A secret issued by a verifier and used only once to prove possession of the secret | 3.1.2 |

| Out-of-band secret | A short-lived secret generated by a verifier and independently sent to a subscriber’s device to verify its possession | 3.1.3 |

| One-time passcodes (OTP) | A secret generated by an authenticator and used only once to prove possession of the authenticator | 3.1.4, 3.1.5 |

| Activation secret | A password that is used locally as an activation factor for a multi-factor authenticator | 3.2.10 |

| Long-term authenticator secret | A secret embedded in a physical authenticator to allow it to function for authentication | 4.1 |

| Recovery code | A secret issued to the subscriber to allow them to recover an account at which they are no longer able to authenticate | 4.2 |

| Session secret | A secret issued by the verifier at authentication and used to establish the continuity of authenticated sessions | 5.1 |

Requirements by Authenticator Type

The following requirements apply to specific authenticator types.

Passwords

A password (sometimes referred to as a passphrase or, if numeric, a personal identification number [PIN]) is a secret value intended to be chosen and either memorized or recorded by the subscriber. Passwords must be of sufficient effective strength and secrecy that it would be impractical for an attacker to guess or otherwise discover the correct secret value. A password is “something you know.”

The requirements in this section apply to centrally verified passwords that are used as independent authentication factors and sent over an authenticated protected channel to the verifier. Passwords used locally as an activation factor for a multi-factor authenticator (e.g., an unlock PIN) are referred to as activation secrets and discussed in Sec. 3.2.10. In contrast to centrally verified passwords, activation secrets (similar to the unlock passwords or PINs on many devices) are not sent to the verifier and instead used locally to gain access to the authentication secret.

Passwords are not phishing-resistant.

Password Authenticators

Passwords SHALL either be chosen by the subscriber or assigned randomly by the CSP.

If the CSP disallows a chosen password because it is on a blocklist of commonly used, expected, or compromised values (see Sec. 3.1.1.2), the subscriber SHALL be required to choose a different password. Other composition requirements for passwords SHALL NOT be imposed. A rationale for this is presented in Appendix A, Strength of Passwords.

Password Verifiers

The following requirements apply to passwords.

- Verifiers and CSPs SHALL require passwords that are used as a single-factor authentication mechanism to be a minimum of 15 characters in length. Verifiers and CSPs MAY allow passwords that are only used as part of multi-factor authentication processes to be shorter but SHALL require them to be a minimum of eight characters in length.

- Verifiers and CSPs SHOULD permit a maximum password length of at least 64 characters.

- Verifiers and CSPs SHOULD accept all printing ASCII [RFC20] characters and the space character in passwords.

- Verifiers and CSPs SHOULD accept Unicode [ISO/ISC 10646] characters in passwords. Each Unicode code point SHALL be counted as a single character when evaluating password length.

- Verifiers and CSPs SHALL NOT impose other composition rules (e.g., requiring mixtures of different character types) for passwords.

- Verifiers and CSPs SHALL NOT require subscribers to change passwords periodically. However, verifiers SHALL force a change if there is evidence that the authenticator has been compromised.

- Verifiers and CSPs SHALL NOT permit the subscriber to store a hint (e.g., a reminder of how the password was created) that is accessible to an unauthenticated claimant.

- Verifiers and CSPs SHALL NOT prompt subscribers to use knowledge-based authentication (KBA) (e.g., “What was the name of your first pet?”) or security questions when choosing passwords.

- Verifiers SHALL request the password to be provided in full (not a subset of it) and SHALL verify the entire submitted password (e.g., not truncate it).

If Unicode characters are accepted in passwords, the verifier SHOULD apply the normalization process for stabilized strings using the Normalization Form Canonical Composition (NFC) normalization defined in Sec. 12.1 of Unicode Normalization Forms [UAX15]. This process is applied before hashing the byte string that represents the password. Subscribers choosing passwords that contain Unicode characters SHOULD be advised that some endpoints may represent some characters differently, which would affect their ability to authenticate successfully.

When processing a request to establish or change a password, verifiers SHALL compare the prospective secret against a blocklist that contains known commonly used, expected, or compromised passwords. The entire password SHALL be subject to comparison, not substrings or words that might be contained therein. For example, the list may include:

- Passwords obtained from previous breach corpuses

- Dictionary words

- Context-specific words, such as the name of the service, the username, and derivatives thereof

If the chosen password is found on the blocklist, the CSP SHALL require the subscriber to select a different secret and SHALL provide the reason for rejection. Since the blocklist is used to defend against brute-force attacks and unsuccessful attempts are rate-limited, the blocklist SHOULD be of sufficient size to prevent subscribers from choosing passwords that attackers are likely to guess before reaching the attempt limit.

Excessively large blocklists are of little incremental security benefit because the blocklist is used to defend against online attacks, which are already limited by the throttling requirements described in Sec. 3.2.2.

Verifiers SHALL offer guidance to the subscriber to help the subscriber choose a strong password. This is particularly important following the rejection of a password on the blocklist as it discourages trivial modifications of listed weak passwords [Blocklists].

Verifiers SHALL implement a rate-limiting mechanism that effectively limits the number of failed authentication attempts that can be made on the subscriber account, as described in Sec. 3.2.2.

Verifiers SHALL allow the use of password managers and autofill functionality. Verifiers SHOULD permit claimants to use the “paste” function when entering a password to facilitate password manager use when password autofill APIs are unavailable. Password managers have been shown to increase the likelihood that subscribers will choose stronger passwords, particularly if the password managers include password generators [Managers].

To help the claimant successfully enter a password, the verifier SHOULD offer an option to display the password — rather than a series of dots or asterisks — while it is entered and until it is submitted to the verifier. This allows the claimant to confirm their entry if they are in a location where their screen is unlikely to be observed. The verifier MAY also permit the claimant’s device to display individual entered characters for a short time after each character is typed to verify the correct entry. This is common on mobile devices.

Verifiers MAY make limited allowances for mistyping (e.g., removing leading and trailing whitespace characters before verification, allowing the verification of passwords with differing cases for the leading character) if the password remains at least the required minimum length after such processing and the complexity of the resulting password is not significantly reduced.

Verifiers and CSPs SHALL use approved encryption and an authenticated protected channel when requesting passwords.

Verifiers SHALL store passwords in a form that is resistant to offline attacks. Passwords SHALL be salted and hashed using a suitable password hashing scheme. Password hashing schemes take a password, a salt, and a cost factor as inputs and generate a password hash. Their purpose is to make each password guess more expensive for an attacker who has obtained a hashed password file, thereby making the cost of a guessing attack high or prohibitive. The chosen cost factor SHOULD be as high as practical without negatively impacting verifier performance. It SHOULD be increased over time to account for increases in computing performance. An approved password hashing scheme published in the latest revision of [SP800-132] or updated NIST guidelines on password hashing schemes SHOULD be used. The chosen output length of the password verifier, excluding the salt and versioning information, SHOULD be the same as the length of the underlying password hashing scheme output.

The salt SHALL be at least 32 bits in length and chosen to minimize salt value collisions among stored hashes (i.e., to prevent multiple subscriber accounts from having the same hashed password). Both the salt value and the resulting hash SHALL be stored for each password. A reference to the password hashing scheme used, including the cost factor, SHOULD be stored for each password to allow migration to new algorithms and work factors.

In addition, verifiers SHOULD perform an additional iteration of a keyed hashing or encryption operation using a secret key known only to the verifier. If used, this key value SHALL be generated by an approved random bit generator, as described in Sec. 3.2.12. The secret key value SHALL be stored separately from the hashed passwords. It SHOULD be stored and used within a hardware-protected area, such as a hardware security module or trusted execution environment (TEE), such as a trusted platform module (TPM). With this additional iteration, brute-force attacks on the hashed passwords are impractical as long as the secret key value remains secret.

Look-Up Secrets

A look-up secret authenticator is a physical or electronic record that stores a set of secrets shared between the claimant and the CSP. The claimant uses the authenticator to look up the appropriate secrets needed to respond to a prompt from the verifier. For example, the verifier could ask a claimant to provide a specific subset of the numeric or character strings printed on a card in table format. A typical application of look-up secrets is for one-time saved recovery codes (see Sec. 4.2.1.1) that the subscriber stores for use if another authenticator is lost or malfunctions. A look-up secret is “something you have.”

Look-up secrets are not phishing-resistant.

Look-Up Secret Authenticators

CSPs that create look-up secret authenticators SHALL use an approved random bit generator, as described in Sec. 3.2.12, to generate the list of secrets and SHALL deliver the authenticator list securely to the subscriber (e.g., in an in-person session, via an online session, through the postal mail to a contact address). If delivered via an online session, the session SHALL be authenticated by the subscriber at AAL2 or higher and SHALL deliver the secrets through an authenticated protected channel and in accordance with the post-enrollment binding requirements in Sec. 4.1.2. Look-up secrets SHALL be at least six decimal digits (or equivalent) in length. Additional requirements described in Sec. 3.1.2.2 may also apply, depending on their length.

Look-Up Secret Verifiers

Verifiers of look-up secrets SHALL prompt the claimant for a secret from their authenticator. A secret from a look-up secret authenticator SHALL be used successfully only once. If the look-up secret is derived from a grid card, each grid cell SHOULD be used only once, which limits the number of authentications that can be accomplished using look-up secrets. A very long list of secrets is potentially required.

Verifiers SHALL store look-up secrets in a form that is resistant to offline attacks. All look-up secrets SHALL be stored in a hashed form using an approved hashing function.

Look-up secrets SHALL be at least six decimal digits (or equivalent) in length, as specified in Sec. 3.1.2.1. Look-up secrets that are shorter than specified lengths have additional verification requirements as follows:

-

Look-up secrets that are shorter than the minimum security strength specified in the latest revision of [SP800-131A] (i.e., 112 bits as of the date of this publication) SHALL be stored in a salted and hashed form using a suitable password hashing scheme, as described in Sec. 3.1.1.2. The salt value SHALL be at least 32 bits in length and arbitrarily chosen to minimize salt value collisions among stored hashes. Both the salt value and the resulting hash SHALL be stored for each look-up secret.

-

The verifier SHALL implement a rate-limiting mechanism that effectively limits the number of failed authentication attempts that can be made on the subscriber account, as described in Sec. 3.2.2.

The verifier SHALL use approved encryption and an authenticated protected channel when requesting look-up secrets.

Out-of-Band Devices

An out-of-band authenticator is a physical device that is uniquely addressable and can communicate securely with the verifier over an independent communications channel, which is referred to as the secondary channel. The device is possessed and controlled by the claimant and supports private communication over this separate secondary channel, which is separate from the primary channel for authentication. An out-of-band authenticator is “something you have.” Examples of out-of-band devices include smartphones equipped with applications that allow the verifier to independently communicate with the subscriber or the use of text messaging or audio calls to communicate with the subscriber.

Out-of-band authentication uses a short-term secret generated by the verifier. The secret securely binds the authentication operation on the primary and secondary channels and establishes the claimant’s control of the out-of-band device.

Out-of-band authentication is not phishing-resistant.

The out-of-band authenticator can operate in one of the following ways:

- The claimant transfers a secret received by the out-of-band device via the secondary channel to the verifier using the primary channel. For example, the claimant may receive the secret (typically a 6-digit code) on their mobile device and type it into their authentication session. This method is shown in Fig. 1.

Fig. 1. Transfer of secret to primary device

- The claimant transfers a secret received via the primary channel to the out-of-band device for transmission to the verifier via the secondary channel. For example, the claimant may view the secret on their authentication session and either type it into an application on their mobile device or use a technology such as a barcode or QR code to effect the transfer. This method is shown in Fig. 2.

Fig. 2. Transfer of secret to out-of-band device

Note: A third method of out-of-band authentication compares the secrets received from the primary and secondary channels and requests approval on the secondary channel. This method is no longer considered acceptable because it increases the likelihood that the subscriber would approve an authentication request without actually comparing the secrets as required. This has been observed with “authentication fatigue” attacks in which an attacker generates many out-of-band authentication requests to the subscriber, who might approve one to eliminate the annoyance. For this reason, these guidelines require the transfer of the secret between the out-of-band device and the primary channel to increase assurance that the subject is actively participating in the session with the verifier. Presenting the claimant with a list of secrets to compare is not sufficient to meet this requirement, as the claimant may reasonably guess the secret due to the limited size of the list that can be presented.

Out-of-Band Authenticators

The out-of-band authenticator SHALL establish a separate channel with the verifier to retrieve the out-of-band secret or authentication request. This channel is considered to be out-of-band with respect to the primary communication channel (even if it terminates on the same device), provided that the device does not leak information from one channel to the other without the claimant’s participation.

The out-of-band device SHOULD be uniquely addressable by the verifier. Communication over the secondary channel SHALL use approved encryption unless sent via the public switched telephone network (PSTN). For additional authenticator requirements that are specific to using the PSTN for out-of-band authentication, see Sec. 3.1.3.3.

Email SHALL NOT be used for out-of-band authentication because it may be vulnerable to:

- Access using only a password

- Interception in transit or at intermediate mail servers

- Rerouting attacks, such as those caused by Domain Name System (DNS) spoofing

Confirmation codes that are sent to validate email addresses or are issued as recovery codes (see Sec. 4.2.1.2) are not authentication processes and not affected by the above prohibition.

The out-of-band authenticator SHALL uniquely authenticate itself in one of the following ways when communicating with the verifier:

-

Using approved cryptography, establish a mutually authenticated protected channel (e.g., client-authenticated transport layer security (TLS) [RFC8446]) with the verifier. Communication between the out-of-band authenticator and the verifier MAY use a trusted intermediary service to which each authenticates. The key used to establish the channel SHALL be provisioned in a mutually authenticated session during authenticator binding, as described in Sec. 4.1.

-

Authenticate to a public mobile telephone network using a SIM card or equivalent secret that uniquely identifies the subscriber. This method SHALL only be used if a secret is sent from the verifier to the out-of-band device via the PSTN (i.e., SMS or voice) or an encrypted instant messaging service.

-

Use a wired connection to the PSTN that the verifier can call and dictate the out-of-band secret. For the purposes of this definition, “wired connection” includes services such as cable providers that offer PSTN services through other wired media and fiber via analog telephone adapters.

For single-factor out-of-band authenticators, if a secret is sent by the verifier to the out-of-band device, the device SHOULD NOT display the authentication secret while it is locked by the owner. Rather, the device SHOULD require the presentation and verification of a PIN, passcode, or biometric characteristic to view the secret. However, authenticators SHOULD indicate the receipt of an authentication secret on a locked device.

If the out-of-band authenticator requests approval over the secondary communication channel rather than by presenting a secret that the claimant transfers to the primary communication channel, it SHALL accept a transfer of the secret from the primary channel and send it to the verifier over the secondary channel to associate the approval with the authentication transaction. The claimant MAY perform the transfer manually or with the assistance of a representation, such as a barcode or quick response (QR) code.

Out-of-Band Verifiers

The verifier waits for an authenticated protected channel to be established with the out-of-band authenticator and verifies its identifying key. The verifier SHALL NOT store the identifying key itself but SHALL use a verification method (e.g., an approved hash function or proof of possession of the identifying key) to uniquely identify the authenticator. Once authenticated, the verifier transmits the authentication secret to the authenticator. The connection with the out-of-band authenticator MAY be either manually initiated or prompted by a mechanism, such as a push notification.

Depending on the type of out-of-band authenticator, one of the following SHALL take place:

-

Transfer of the secret from the secondary to the primary channel. As shown in Fig. 1, the verifier MAY signal the device that contains the subscriber’s authenticator to indicate a readiness to authenticate. It SHALL then transmit a random secret to the out-of-band authenticator and wait for the secret to be returned via the primary communication channel.

-

Transfer of the secret from the primary to the secondary channel. As shown in Fig. 2, the verifier SHALL transmit a random authentication secret to the claimant via the primary channel. It SHALL then wait for the secret to be returned via the secondary channel from the claimant’s out-of-band authenticator. The verifier MAY additionally display an address, such as a phone number or VoIP address, for the claimant to use in addressing its response to the verifier.

In all cases, the authentication SHALL be considered invalid unless completed within 10 minutes. Verifiers SHALL accept a given authentication secret as valid only once during the validity period to provide replay resistance, as described in Sec. 3.2.7.

The verifier SHALL generate random authentication secrets that are at least six decimal digits (or equivalent) in length using an approved random bit generator as described in Sec. 3.2.12. If the authentication secret is less than 64 bits long, the verifier SHALL implement a rate-limiting mechanism that effectively limits the total number of consecutive failed authentication attempts that can be made on the subscriber account as described in Sec. 3.2.2. Generating a new authentication secret SHALL NOT reset the failed authentication count.

Out-of-band verifiers that send a push notification to a subscriber device SHOULD implement a reasonable limit on the rate or total number of push notifications that will be sent since the last successful authentication.

For additional verification requirements that are specific to the PSTN, see Sec. 3.1.3.3.

Authentication Using the Public Switched Telephone Network

Use of the PSTN for out-of-band verification is restricted as described in this section and SHALL satisfy the requirements of Sec. 3.2.9. Setting or changing the pre-registered telephone number is considered to be the binding of a new authenticator and SHALL only occur as described in Sec. 4.1.2.

Some subscribers may be unable to use PSTN to deliver out-of-band authentication secrets in areas with limited telephone coverage, particularly without mobile phone service. Accordingly, verifiers SHALL ensure that alternative authenticator types are available to all subscribers and SHOULD remind subscribers of this limitation of PSTN out-of-band authenticators before binding one or more devices controlled by the subscriber.

Verifiers SHOULD consider risk indicators (e.g., device swap, SIM change, number porting, other abnormal behavior) before using the PSTN to deliver an out-of-band authentication secret.

Consistent with the discussion of restricted authenticators in Sec. 3.2.9, NIST may adjust the restricted status of out-of-band authentication using the PSTN based on the evolution of the threat landscape and the technical operation of the PSTN.

Multi-Factor Out-of-Band Authenticators

Multi-factor out-of-band authenticators operate similarly to single-factor out-of-band authenticators (see Sec. 3.1.3.1). However, they require the presentation and verification of an activation factor (i.e., a password or a biometric characteristic) before allowing the claimant to complete the authentication transaction (i.e., before accessing or entering the authentication secret as appropriate for the authentication flow being used). Each use of the authenticator SHALL require the presentation of the activation factor.

Authenticator activation secrets SHALL meet the requirements of Sec. 3.2.10. A biometric activation factor SHALL meet the requirements of Sec. 3.2.3, including limits on the number of consecutive authentication failures. The password or biometric sample used for activation and any biometric data derived from the biometric sample (e.g., a fingerprint image and feature locations produced by a fingerprint feature extractor) SHALL be erased immediately after an authentication operation.

Single-Factor OTP

A single-factor OTP generates one-time passwords (OTPs). This category includes hardware devices and software-based OTP generators that are installed on devices such as mobile phones. These authenticators have an embedded secret that is used as the seed for generating OTPs and do not require activation through a second factor. The OTP is displayed on the authenticator and manually input for transmission to the verifier, thereby proving possession and control of the authenticator. A single-factor OTP authenticator is “something you have.”

Single-factor OTPs are similar to look-up secret authenticators except that the secrets are cryptographically and independently generated by the authenticator and the verifier and compared by the verifier. The secret is computed based on a nonce that may be time-based or from a counter on the authenticator and verifier.

OTP authentication is not phishing-resistant. [FIPS140] validation of OTP authenticators and verifiers is not required.

Single-Factor OTP Authenticators

Single-factor OTP authenticators and verifiers contain two persistent values: 1) a symmetric key that persists for the authenticator’s lifetime and 2) a nonce that is either changed each time the authenticator is used or is based on a real-time clock.

The secret key and its algorithm SHALL provide at least the minimum security strength specified in the latest revision of [SP800-131A] (i.e., 112 bits as of the date of this publication). The nonce SHALL be of sufficient length to ensure that it is unique for each operation of the authenticator over its lifetime. If a subscriber needs to change the device on which a software-based OTP authenticator resides, they SHOULD bind the authenticator application on the new device to their subscriber account, as described in Sec. 4.1.2, and invalidate the authenticator application that will no longer be used. Alternatively, the subscriber MAY export the secret key and store it in a sync fabric that meets the requirements in Appendix B.2 and then retrieve the key with their new device.

The authenticator output is obtained using an approved block cipher or hash function to securely combine the key and nonce. In coordination with the verifier, the authenticator MAY truncate its output to as few as six decimal digits (or an equivalent representation).

If the nonce used to generate the authenticator output is based on a real-time clock, the nonce SHALL be changed at least once every two minutes.

Single-Factor OTP Verifiers

Single-factor OTP verifiers effectively duplicate the process of generating the OTP used by the authenticator. As such, the symmetric keys used by authenticators are also present in the verifier and SHALL be strongly protected against unauthorized disclosure by access controls that limit access to the keys to only those software components that require access.

When binding a single-factor OTP authenticator to a subscriber account, the verifier or associated CSP SHALL use approved cryptography for key establishment to generate and exchange keys or to obtain the secrets required to duplicate the authenticator output.

The verifier SHALL use approved encryption and an authenticated protected channel when collecting the OTP. Verifiers SHALL accept a given OTP only once while it is valid to provide replay resistance, as described in Sec. 3.2.7. If a claimant’s authentication is denied due to the duplicate use of an OTP, verifiers MAY warn the claimant if an attacker has been able to authenticate in advance. Verifiers MAY also warn a subscriber in an existing session of the attempted duplicate use of an OTP.

Time-based OTPs [TOTP] SHALL have a defined lifetime that is determined by the expected clock drift in either direction of the authenticator over its lifetime plus an allowance for network delay and claimant entry of the OTP.

The verifier SHOULD implement or, if the authenticator output is less than 64 bits in length, SHALL implement a rate-limiting mechanism that effectively limits the number of failed authentication attempts that can be made on the subscriber account, as described in Sec. 3.2.2.

Multi-Factor OTPs

A multi-factor OTP generates one-time passwords for authentication following the input of an activation factor. This includes hardware devices and software-based OTP generators that are installed on mobile phones and similar devices. The second authentication factor may be provided through an integral entry pad, an integral biometric (e.g., fingerprint) reader, or a direct computer interface (e.g., universal serial bus [USB] port). The OTP is displayed on the authenticator and manually input for transmission to the verifier. The multi-factor OTP authenticator is “something you have” activated by either “something you know” or “something you are.”

OTP authentication is not phishing-resistant. [FIPS140] validation of OTP authenticators and verifiers is not required.

Multi-Factor OTP Authenticators

Multi-factor OTP authenticators operate similarly to single-factor OTP authenticators (see Sec. 3.1.4.1), except they require the presentation and verification of an activation factor (i.e., a password or a biometric characteristic) to obtain the OTP from the authenticator. Each use of the authenticator SHALL require the input of the activation factor.

In addition to activation information, multi-factor OTP authenticators and verifiers contain two persistent values: 1) a symmetric key that persists for the authenticator’s lifetime and 2) a nonce that is either changed each time the authenticator is used or based on a real-time clock.

The secret key and its algorithm SHALL provide at least the minimum security strength specified in the latest revision of [SP800-131A] (i.e., 112 bits as of the date of this publication). The nonce SHALL be of sufficient length to ensure that it is unique for each operation of the authenticator over its lifetime. If a subscriber needs to change the device on which a software-based OTP authenticator resides, they SHOULD bind the authenticator application on the new device to their subscriber account, as described in Sec. 4.1.2, and invalidate the authenticator application that will no longer be used. Alternatively, the subscriber MAY export the secret key and store it in a sync fabric that meets the requirements in Appendix B.2 and retrieve the key with their new device.

The authenticator output is obtained using an approved cryptography block cipher or hash function to securely combine the key and nonce. In coordination with the verifier, the authenticator MAY truncate its output to as few as six decimal digits or equivalent.

If the nonce used to generate the authenticator output is based on a real-time clock, the nonce SHALL be changed at least once every two minutes.

Authenticator activation secrets SHALL meet the requirements of Sec. 3.2.10. A biometric activation factor SHALL meet the requirements of Sec. 3.2.3, including limits on the number of consecutive authentication failures. The unencrypted key and activation secret or biometric sample and any biometric data derived from the biometric sample (e.g., a fingerprint image and feature locations produced by a fingerprint feature extractor) SHALL be erased immediately after an OTP has been generated.

Multi-Factor OTP Verifiers

Multi-factor OTP verifiers effectively duplicate the process of generating the OTP used by the authenticator without requiring a second authentication factor. As such, the symmetric keys used by authenticators SHALL be strongly protected against unauthorized disclosure by access controls that limit access to the keys to only those software components that require access.

When binding a multi-factor OTP authenticator to a subscriber account, the verifier or associated CSP SHALL use approved cryptography for key establishment to generate and exchange keys or to obtain the secrets required to duplicate the authenticator output.

The verifier SHALL use approved encryption and an authenticated protected channel when collecting the OTP. Verifiers SHALL accept a given OTP only once while it is valid to provide replay resistance, as described in Sec. 3.2.7. If a claimant’s authentication is denied due to the duplicate use of an OTP, verifiers MAY warn the claimant if an attacker has been able to authenticate in advance. Verifiers MAY also warn a subscriber in an existing session of the attempted duplicate use of an OTP.

Time-based OTPs [TOTP] SHALL have a defined lifetime that is determined by the expected clock drift in either direction of the authenticator over its lifetime plus an allowance for network delay and claimant entry of the OTP.

The verifier SHALL implement a rate-limiting mechanism that effectively limits the number of consecutive failed authentication attempts that can be made on the subscriber account, as required by Sec. 3.2.10.

Single-Factor Cryptographic Authentication

Single-factor cryptographic authentication is accomplished by proving the possession and control of a cryptographic key via an authentication protocol. Depending on the strength of authentication required, the authentication key may be stored in a manner that is accessible to the endpoint associated with the authenticator or in a separate, directly connected processor or device. The authenticator output is highly dependent on the specific cryptographic protocol used but is generally some type of signed message. A single-factor cryptographic authenticator is “something you have.” Single-factor cryptographic authenticators used at AAL3 SHALL use public-key cryptography to protect the authentication secrets from compromise of the verifier.

Cryptographic authentication is phishing-resistant if it meets the additional requirements in Sec. 3.2.5.

Single-Factor Cryptographic Authenticators

Single-factor cryptographic authenticators encapsulate one or more authentication keys. Authentication keys are described as either exportable (see Sec. 3.2.13) or non-exportable. Exportable authentication keys (usable at AAL2 or below) SHOULD be stored in appropriate storage that is available to the authenticator (e.g., keychain storage). If they are accessible to the endpoint being authenticated, exportable authentication keys SHALL be strongly protected against unauthorized disclosure with access controls that limit access to the key to only those software components that require access. Non-exportable authentication keys (usable at AAL3 or below) SHALL be stored in an isolated execution environment that is protected by hardware or in a separate processor with a controlled interface to the central processing unit of the user endpoint.

Some cryptographic authenticators, referred to as syncable authenticators, can manage their authentication keys using a sync fabric (e.g., a cloud provider). Additional requirements for using syncable authenticators are in Appendix B.

External (i.e., non-embedded) cryptographic authenticators SHALL meet the requirements for connected authenticators in Sec. 3.2.11.

As required by Sec. 2.3.2, single-factor cryptographic authenticators that are being used at AAL3 must meet the authentication intent requirements of Sec. 3.2.8.

Single-Factor Cryptographic Verifiers

Single-factor cryptographic verifiers generate a challenge nonce, send it to the corresponding authenticator, and use the authenticator output to verify possession of the authenticator. The authenticator output is highly dependent on the specific cryptographic authenticator and protocol used but is generally some type of signed message.

The verifier has a public cryptographic key that corresponds to each authenticator. While both types of keys SHALL be protected against modification, symmetric keys SHALL additionally be protected against unauthorized disclosure by access controls that limit access to the key to only those software components that require access.

The authentication key and its algorithm SHALL provide at least the minimum security strength specified in the latest revision of [SP800-131A] (i.e., 112 bits as of the date of this publication). The challenge nonce SHALL be at least 64 bits in length and SHALL either be unique over the authenticator’s lifetime or statistically unique (i.e., generated using an approved random bit generator, as described in Sec. 3.2.12). The verification operation SHALL use approved cryptography.

Multi-Factor Cryptographic Authentication

Multi-factor cryptographic authentication uses an authentication protocol to prove possession and control of an authentication key that requires activation through a second authentication factor. Depending on the strength of authentication needed, the authentication key may be stored in a manner that is accessible to the endpoint being authenticated or in a separate, directly connected processor or device. The authenticator output is highly dependent on the specific cryptographic protocol used but is generally some type of signed message. A multi-factor cryptographic authenticator is “something you have” and is activated by an activation factor that represents either “something you know” or “something you are.” Multi-factor cryptographic authenticators used at AAL3 SHALL use public-key cryptography to protect the authentication secrets from compromise of the verifier.

Cryptographic authentication is phishing-resistant if it meets the additional requirements in Sec. 3.2.5.

Multi-Factor Cryptographic Authenticators

Multi-factor cryptographic authenticators encapsulate one or more authentication keys that SHALL only be accessible through the presentation and verification of an activation factor (i.e., a password or a biometric characteristic). Non-exportable authentication keys, suitable for use at AAL3, SHALL be stored in an isolated execution environment that is protected by hardware or in a separate processor with a controlled interface to the central processing unit of the user endpoint. Exportable authentication keys (usable at AAL2 or below) SHOULD be stored in appropriate storage that is available to the authenticator (e.g., keychain storage). If accessible to the endpoint being authenticated, authentication keys SHALL be strongly protected against unauthorized disclosure by using access controls that limit access to the authentication keys to only those software components that require access.

External (non-embedded) cryptographic authenticators SHALL meet the requirements for connected authenticators in Sec. 3.2.11. Each authentication event SHALL require input and verification of the local activation factor. Authenticator activation secrets SHALL meet the requirements of Sec. 3.2.10. A biometric activation factor SHALL meet the requirements of Sec. 3.2.3, including limits on the number of consecutive authentication failures. The activation secret or biometric sample and any biometric data derived from the biometric sample (e.g., a fingerprint image and feature locations produced by a fingerprint feature extractor) SHALL be erased after an authentication transaction.

Multi-Factor Cryptographic Verifiers

The requirements for a multi-factor cryptographic verifier are identical to those for a single-factor cryptographic verifier, as described in Sec. 3.1.6.2. Some multi-factor authenticators include flags to indicate whether an activation factor was used. If such a flag is present and indicates that an activation factor was not used, the authentication SHALL be treated as single-factor. Otherwise, verification of the output from a multi-factor cryptographic authenticator indicates that the activation factor was used.

Usage With Subscriber-Controlled Wallets

A specific form of multi-factor cryptographic authentication is a subscriber-controlled wallet on the subscriber’s device, as described in Sec. 5 of [SP800-63C]. After the claimant first unlocks the wallet using an activation factor, the authentication process uses a federation protocol, as detailed in [SP800-63C]. The assertion contents and presentation requirements of the federation protocol provide the security characteristics required of cryptographic authenticators. As such, subscriber-controlled wallets on the subscriber’s device can be considered multi-factor authenticators through the activation factor and the presentation and validation of an assertion generated by the wallet.

Cloud-hosted wallets are not considered cryptographic multi-factor authenticators because access is maintained through authentication over the internet rather than locally. All authentication information from hosted wallets is treated as an assertion, as addressed in Sec. 5 of [SP800-63C].

Access to the private key SHALL require an activation factor. Authenticator activation secrets SHALL meet the requirements of Sec. 3.2.10. Biometric activation factors SHALL meet the requirements of Sec. 3.2.3, including limits on the number of consecutive authentication failures. The password or biometric sample used for activation and any biometric data derived from the biometric sample SHALL be erased immediately after an authentication transaction.

Authentication processes using subscriber-controlled wallets SHALL be used with a federation process as detailed in Sec. 5 of [SP800-63C]. Signed audience-restricted assertions that are generated by subscriber-controlled wallets are considered phishing-resistant because they prevent an assertion presented to an impostor RP from being used by the legitimate one. Assertions that lack a valid signature from the wallet or an audience restriction SHALL NOT be considered phishing-resistant. Assertions SHALL also include sufficient information to determine the nature of the activation method used to activate the wallet.

Syncable Authenticators

Some cryptographic authenticators allow the subscriber to copy (i.e., clone) the authentication secret to additional devices, usually via a sync fabric. This eases the burden for subscribers who want to use additional devices to authenticate. Specific requirements for syncable authenticators and the sync fabric are given in Appendix B.

General Authenticator Requirements

The following requirements apply to all types of authentication.

Physical Authenticators

CSPs SHALL provide subscriber instructions for appropriately protecting the authenticator against theft or loss. The CSP SHALL provide a mechanism to invalidate1 the authenticator immediately upon notification from a subscriber that the authenticator’s loss, theft, or compromise is suspected.

Possession and control of a physical authenticator are based on proof of possession of a secret associated with the authenticator. When an embedded secret (typically a certificate and associated private key) is in the endpoint, its “device identity” can be considered a physical authenticator. However, this requires a secure authentication protocol that is appropriate for the AAL being authenticated. Browser cookies do not satisfy this requirement except as short-term secrets for session maintenance (not authentication), as described in Sec. 5.1.1.

Rate Limiting (Throttling)

When required by the authenticator type descriptions in Sec. 3.1, the verifier SHALL implement controls to protect against online guessing attacks. Unless otherwise specified in the description of a given authenticator, the verifier SHALL limit consecutive failed authentication attempts using a specific authenticator on a single subscriber account to no more than 100 by disabling that authenticator. If more than one authenticator is involved with an excessive number of authentication attempts (e.g., single-factor cryptographic authenticator and centrally verified password), both authenticators SHALL be disabled. Authenticators that have been disabled SHALL be required to rebind to the subscriber account, as described in Sec. 4.1, to be usable in the future.

The limit of 100 attempts is an upper bound, and agencies MAY impose lower limits. The limit of 100 was chosen to balance the likelihood of a correct guess (e.g., 100 attempts against a six-digit decimal OTP authenticator output) versus the potential need for account recovery when the limit is exceeded.

Additional techniques MAY be used to reduce the likelihood that an attacker will lock the legitimate claimant out due to rate limiting. These include:

-

Requiring the claimant to complete a bot detection and mitigation challenge before attempting authentication

-

Requiring the claimant to wait after a failed attempt for a period of time that increases as the subscriber account approaches its maximum allowance for consecutive failed attempts (e.g., 30 seconds up to an hour)

-

Leveraging other risk-based or adaptive authentication techniques to identify claimant behaviors that fall within or outside of typical norms (e.g., the use of the claimant’s IP address, geolocation, timing of request patterns, or browser metadata)

When the subscriber successfully authenticates, the verifier SHOULD disregard any previous failed attempts for the authenticators used in the successful authentication.

Following successful authentication at a given AAL, the verifier SHOULD reset the retry count of the authenticators that were used. If this is provided, the maximum AAL of the authenticator being reset SHALL not exceed the AAL of the session from which it is being reset. If the subscriber cannot authenticate at the required AAL, the account recovery procedures in Sec. 4.2 SHALL be used.

Use of Biometrics

Biometrics is the automated recognition of individuals based on their biological and behavioral characteristics, such as fingerprints, voice patterns, facial features, keystroke patterns, angle of holding a smart phone, screen pressure, typing speed, mouse movements, or gait. Such characteristics have multiple modalities that may differ in the extent to which they establish authentication intent, as described in Sec. 3.2.8.

Biometric comparisons are based on a measurement from the biometric sensor (e.g., camera, fingerprint reader). This measurement is subject to noise and presentation variations that require setting an acceptance threshold based on the differences between the measured biometric and the reference against which it is being compared. Due to these factors, there is some probability that a comparison will not result in a match, which is referred to as a false non-match rate (FNMR). Similarly, there is a probability that an impostor comparison will result in a match, referred to as a false match rate (FMR). A high-quality biometric system has both a very low FMR and FNMR. The chosen threshold usually emphasizes a low FMR to maximize security since false non-matches can often be mitigated by repeating the measurement or using an alternative authentication method.

For a variety of reasons, this document supports only a limited use of biometrics for authentication. These reasons include:

- The biometric FMR does not provide sufficient confidence in the subscriber’s authentication by itself because it does not account for active impersonation attacks.

- Biometric comparison is probabilistic, whereas the other authentication factors are deterministic.

- Biometric template protection schemes provide a method for revoking biometric characteristics that are comparable to other authentication factors (e.g., PKI certificates, passwords). However, the availability of such solutions is limited.

- Biometric characteristics do not constitute secrets. They can often be obtained online or otherwise without consent. A facial image can be obtained by taking a picture; latent fingerprints can be obtained from objects someone touches; and iris patterns can be captured with high-resolution cameras. While presentation attack detection (PAD) technologies can mitigate the risks of these types of attacks, additional trust in the sensor or biometric processing is required to ensure that PAD is operating in accordance with the needs of the CSP and the subscriber.

- The use of biometric characteristics, especially when stored for central verification, introduces new privacy concerns, which are discussed further in Sec. 7.

Therefore, the limited use of biometrics for authentication is supported with specific requirements and guidelines.

Biometrics SHALL only be used as part of multi-factor authentication with a physical authenticator (i.e., “something you have”). The biometric characteristic SHALL be presented and compared for each authentication operation. An alternative non-biometric authentication option SHALL always be provided to the subscriber. Biometric data SHALL be treated and secured as sensitive personal information.

Biometric Accuracy

The biometric system SHALL operate with an FMR [ISO/IEC2382-37] of one in 10000 or better for all demographic groups. Demographic categories to be considered SHALL include sex and skin tone when these factors affect biometric performance. This FMR SHALL be achieved under the conditions of a conformant attack (i.e., zero-effort impostor attempt), as defined in [ISO/IEC30107-1]. The biometric system SHOULD demonstrate a false non-match rate (FNMR) of less than 5 %. Biometric performance SHALL be tested in accordance with [ISO/IEC19795-1]. The biometric system SHALL be configured with a fixed threshold; it is not feasible to change the threshold for each demographic.

Presentation Attack Detection

The biometric system SHOULD implement PAD for iris and fingerprint modalities and SHALL implement PAD for facial recognition. Biometric comparison based on voice SHALL NOT be used. Testing the biometric system for deployment SHOULD demonstrate an impostor attack presentation accept rate (IAPAR) of less than 0.07. Presentation attack resistance SHOULD be tested in accordance with Clause 13 of [ISO/IEC30107-3] following security evaluation methodologies in [ISO/IEC19792] or [ISO/IEC19989-1] and [ISO/IEC19989-3]. The PAD decision MAY be made either locally on the claimant’s device or by a central verifier.

Injection Attack Detection

The biometric system SHALL allow no more than five consecutive failed authentication attempts or 10 consecutive failed attempts if PAD is implemented and meets the above requirements. Once that limit has been reached, the biometric authenticator SHALL impose a delay of at least 30 seconds before each subsequent attempt with an overall limit of no more than 50 consecutive failed authentication attempts or 100 if PAD is implemented due to the mitigation of presentation attacks. Once the overall limit is reached, the biometric system SHALL disable biometric authentication and offer another factor (e.g., a different biometric modality or an activation secret if it is not a required factor) if such an alternative method is already available. These limits are upper bounds, and agencies MAY make risk-based decisions to impose lower limits.

The verifier SHOULD determine the performance and integrity of the sensor and its associated endpoint. This increases the likelihood of detecting injection attacks due to compromised endpoints, sensor emulators, and similar threats. Acceptable methods for making this determination include:

- Use of a known sensor, as determined by sensor authentication

- First- or third-party testing against biometric performance standards