|

HTGS

v2.0

The Hybrid Task Graph Scheduler

|

|

HTGS

v2.0

The Hybrid Task Graph Scheduler

|

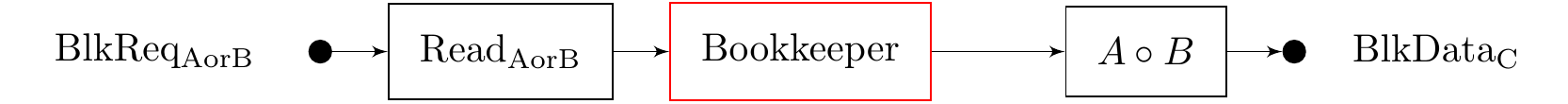

In this tutorial we expand on Tutorial 2A by adding two components to the previous task graph: (1) Reading the matrices from disk and (2) Using memory managers to stay within memory limits.

The source code for the tutorial is found here.

This tutorial uses the same algorithm and representation for the Hadamard product presented in Tutorial2A Implementation.

The primary change is altering the generate matrix task to read from disk and add support for systems with limited memory. This is particularly useful if the full matrix does not fit into system memory. To incorporate this design, we annotate the graph to include memory edges.

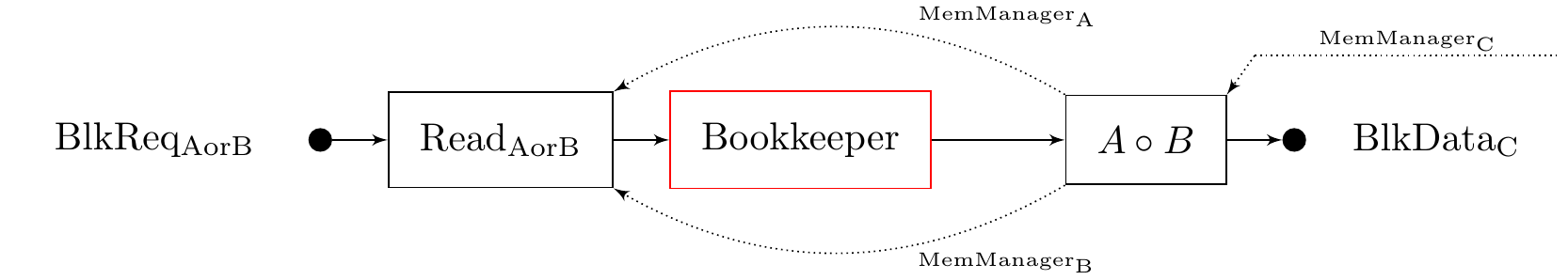

Using the memory edges, we are able to ensure that a fixed number of block-sized matrices flow through the graph, staying within memory limits. The memory is allocated using the htgs::MemoryManager. A htgs::IMemoryReleaseRule is specified to determine when the memory can be released. For the Hadamard product, this rule is trivial because the memory can be released as soon as the data has been used for computation. In more complex examples, such as matrix multiplication (Tutorial 3), data reuse must be carefully analyzed to ensure that the system does not deadlock; i.e. the memory never gets released because its release rule cannot be satisfied based on the traversal of data. The way an algorithm uses its data is important to understand to optimize these release rules, which ideally keeps data close to its processor for as long as possible.

The Hadamard product with memory management creates three memory edges for the two input matrices and the output matrix. In the pictoral representation, we mark that the Hadamard product task releases the memory for matrices A and B. Matrix C has a floating edge indicating that the memory will be released elsewhere. In actuality, the HTGS API only specifies the task that is allocating memory. This will be described in further detail later on in this tutorial.

This tutorial uses the same data as presented in Tutorial 2A Data.

This tutorial uses the same tasks as in Tutorial 2A tasks, except the Hadamard product task will now get and release memory with the htgs::ITask::getMemory and htgs::ITask::releaseMemory functions. Also, we will modify the GenMatrixTask to read the data from disk.

The ReadDiskMatrixTask is responsible for reading matrices A or B depending on the type of MatrixRequestData passed to the read task. The matrix data is loaded from disk such that each block has its own file. If a new block size is specified, then the matrix data will have to be regenerated. There are multiple techniques for reading blocks of data, such as using memory mapped files and optimized file formats for specifying sub-regions without reading the entire file. In the next tutorial, we will show how to use memory mapped files for I/O (Linux/MacOS only), which can be used to improve bandwidth.

The directory structure for the data is stored as follows: "data/tutorial2/4096x4096blksize1024/matrix<A/B>". Prior to reading data from disk, the ReadDiskMatrixTask allocates from main memory to store the data. This memory is acquired from a htgs::MemoryManager for matrix A or matrix B. To get memory from the htgs::MemoryManager, we use the htgs::ITask::getMemory function with the name of the memory edge and the release rule that is associated with the memory. The release rule is used to identify when the memory can be recycled. This allows for the htgs::ITask to express how it plans on using the data and ideally can be setup to only have to load the data once and release once all computation is done for that data. If the data were to be released prior to all computation being done, then a loop would need to be created (such as adding a new htgs::Bookkeeper with the appropriate htgs::IRule edge) to load the data a second time from disk. If a htgs::ITask calls htgs::ITask::getMemory and it had not declared the name of the htgs::MemoryManager for the edge, then the program will report an assertion failed or segmentation fault. To check if a memory edge exists use htgs::ITask::hasMemoryEdge.

The matrices can be created and saved to disk using the tutorial-utils function: checkAndValidateMatrixBlockFiles, which will check to see if all the necessary files are available on disk, and generate them if any are missing.

The HadamardProductTaskWithReleaseMem is responsible for the scalar product of two matrices. These matrices are stored in the MatrixBlockMulData object. Prior to multiplying the matrices, the task allocates memory for the resulting matrix, which will be added to the output of the htgs::TaskGraphConf.

After the Hadamard product task has completed processing matrices A and B together, the task must release the memory so that the htgs::MemoryManager can recycle that memory for the read task. Releasing memory is done using the htgs::MemoryData (or htgs::m_data_t from <htgs/types/Types.hpp>) that was received from htgs::ITask::getMemory, which is passed to the htgs::ITask::releaseMemory function. The htgs::m_data_t contains metadata, which describes where the memory was allocated, allowing the memory to return to its corresponding memory manager. The main stipulation with using this functionality is that all memory managers in a htgs::TaskGraphConf must have unique names (the names of the edges) and that the memory was allocated in the same graph that it is released.

In the ReadDiskMatrixTask we use the matrixTypeToString function to convert the matrix type enum to a string representation. This implementation is found in the enums folder in tutorial-utils.

This tutorial reuses all of the htgs::Bookkeeper and htgs::IRule implementations from Tutorial2A

The htgs::MemoryManager is a htgs::ITask that is created when adding a memory edge to a htgs::ITask. A memory edge is similar to any other edge, except it connects directly to the htgs::ITask rather than through a htgs::TaskManager. This edge acts as a mechism for the task to get htgs::MemoryData from a htgs::MemoryManager. The htgs::MemoryManager maintains a pool of htgs::MemoryData. Each htgs::MemoryData is inserted into the htgs::MemoryManager's output htgs::Connector This output htgs::Connector is shared between the htgs::ITask getting memory and the htgs::MemoryManager. If the htgs::Connector has no data, then the htgs::ITask will wait until the htgs::MemoryManager adds data along this edge.

a htgs::MemoryManager releases/recycles memory based on the htgs::IMemoryReleaseRule that is attached to the htgs::MemoryData. The htgs::IMemoryReleaseRule is added to the htgs::MemoryData using the htgs::ITask::getMemory function. This is used to update the state of the htgs::MemoryData's htgs::IMemoryReleaseRule, which determines when the htgs::MemoryData can be readded into the htgs::MemoryManager's memory pool. As soon as the htgs::MemoryData is released/recycled, it is sent to the output htgs::Connector for the htgs::MemoryManager. Returning htgs::MemoryData to its htgs::MemoryManager is done by passing the htgs::MemoryData that is received from the htgs::ITask::getMemory function to the htgs::ITask::releaseMemory function. The htgs::MemoryData contain meta data that describes the name of the htgs::MemoryManager task and its address. This ensures the htgs::MemoryData returns to the task that had allocated it.

Every memory edge contains a name associated with the edge. The name is used as an identifier for getting and releasing memory. As such, the names of the memory edges must be unique within a htgs::TaskGraphConf.

Any htgs::ITask can be specified as a task that is getting memory from a htgs::MemoryManager using the htgs::TaskGraphConf::addMemoryManagerEdge function, which creates a htgs::MemoryManager and attaches it to the htgs::ITask. The htgs::ITask that is specified must already exist within the htgs::TaskGraphConf prior to using the htgs::TaskGraphConf::addMemoryManagerEdge Allocation, freeing, and the type of memory used within the htgs::MemoryManager is defined with a htgs::IMemoryAllocator. The memory pool size is used to define the number of htgs::MemoryData that is allocated within the memory pool. The htgs::IMemoryAllocator and the memory pool size must take the data traversal behavior of the algorithm to ensure that there is enough memory data being sent from the htgs::MemoryManager and that there is not too much memory being allocated.

There are two types of MemoryManagers: Static and Dynamic. Each MemoryManager type modifies how/when memory is allocated and freed.

All MemoryManager types allocates a pool of htgs::MemoryData during the htgs::ITask::initialize phase. However, the Static MemoryManager will also allocate the memory associated with the htgs::MemoryData using the htgs::IMemoryAllocator::memAlloc function. The Static MemoryManager will release this memory once the destructor for the htgs::MemoryManager is called.

In the next sections, we will go into the details as to how each of these MemoryManager types vary and process MemoryData.

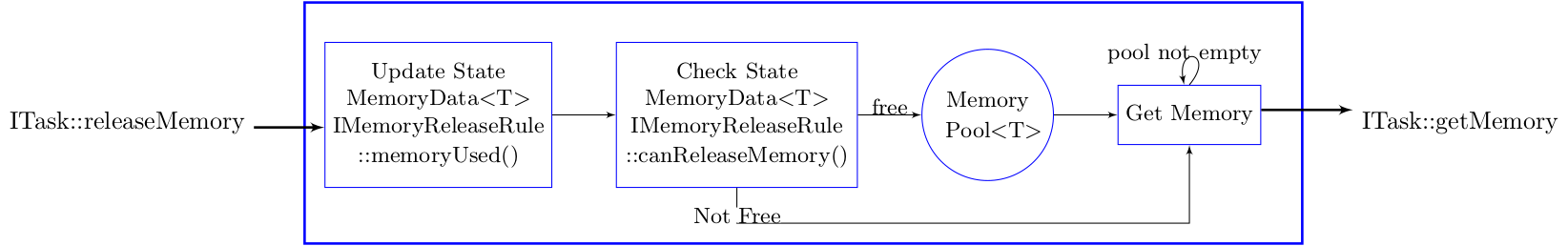

The static MemoryManager is a memory manager that recycles memory when it is released. All of the memory for the memory pool is allocated once when the MemoryManager is initialized and freed when the MemoryManager is shutdown. The diagram below shows how MemoryData is processed when an ITask releases memory. First, when MemoryData enters the MemoryManager, the MemoryData's state is updated using the htgs::IMemoryReleaseRule::memoryUsed function.

Next, the MemoryManager checks the htgs::IMemoryReleaseRule::canReleaseMemory function to determine whether the state indicates the memory can be freed or not. If the state indicates it can be freed, then the MemoryData is inserted into the MemoryPool. If it is not freed, then the MemoryData will not be recycled. Next, the MemoryPool is emptied into the MemoryManager's output Connector so the ITask getting memory can wake up and acquire MemoryData.

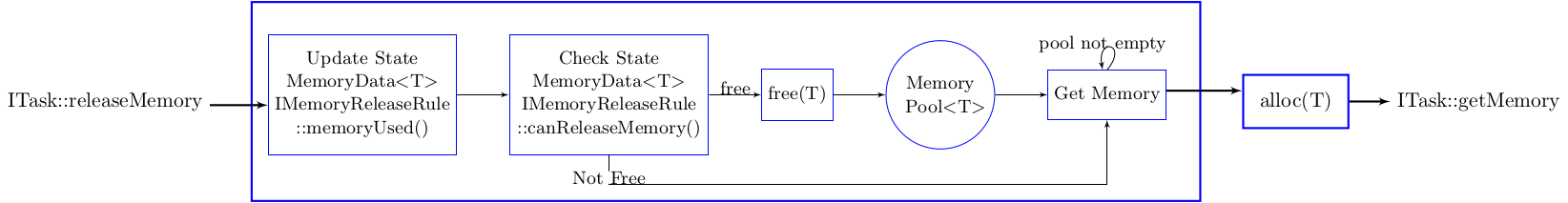

The dynamic MemoryManager contains a similar structure as the static MemoryManager with how it processes MemoryData. The primary difference is when the dynamic MemoryManager allocates and frees memory. In the static MemoryManager all memory is allocated during initialization and freed during shutdown. The dynamic MemoryManager allocates and frees memory on-the-fly. As shown in the diagram below, when MemoryData is sent from the releaseMemoryEdges, if the memory can be released, then the MemoryManager will free the memory that the MemoryData is managing. When the memoryEdges acquires memory from the MemoryManager, the ITask will allocate the memory that the MemoryData is managing prior to returning the MemoryData to the ITask. Using these mechanisms the memory is allocated and freed on demand.

Within the htgs::MemoryData there are two functions that must be defined to use a MemoryManager edge: (1) htgs::IMemoryAllocator, and (2) htgs::IMemoryReleaseRule. The Ihtgs::MemoryAllocator is specified when creating the memory edge, while the IMemoryReleaseRule is added to MemoryData when the memoryEdges gets memory. For the Hadamard product graph, we define the MatrixAllocator and MatrixMemoryRule as the htgs::IMemoryAllocator and htgs::IMemoryReleaseRule, respectively. Below is the code that defines these classes.

The MatrixAllocator creates a one-dimensional array with the specified size using the new operator and releases the memory using the delete[] operator.

The MatrixMemoryRule uses a release count to determine when the memory is ready to be released. When the memoryGetter requests memory, a new instance of the MatrixMemoryRule is defined and bound to the MemoryData that is fetched. This IMemoryReleaseRule is then used by the MemoryManager by calling the htgs::IMemoryReleaseRule::memoryUsed() function, which decrements the release count. The MemoryManager then checks if the memory can be released with the htgs::IMemoryReleaseRule::canReleaseMemory(), which returns true if the release count is zero.

Each matrix block in the HadamardProduct is only used once, so after the specified row, column has been computed, then the memory associated with that computation can be released. This aspect is represented by defining the release count for the MatrixMemoryRule to be one.

Using the implementation from [tutorial 2a](tut2a-create-and-execute-taskgraph), we augment the graph using the HTGS API to specify the memory manager edges.

Belows is the source code implementation for setup, construction of the task TaskGraph, executing the TaskGraph, and processing the output of the htgs::TaskGraphConf.

As before, the traversal order with operating on matrices A and B for the Hadamard product is defined using the htgs::TaskGraphConf::produceData function. If the structure presented below were to change the traversal to first iterate through requests for matrix A, followed by requests for matrix B in an entirely separate loop, then the requests for A would have to be fully processed prior to initiating the first computation on the Hadamard product. Using this traversal pattern would be detrimental to the memory management system as all of matrix A would have to be loaded prior to loading a single block of B, and if the system were to run out of memory prior to loading a block of B, then the graph would deadlock. Special care must be taken to how an algorithm initiates work into a graph that takes into account the behavior a traversal pattern has on memory and the computation.

The main function can be used to post-process data coming from the htgs::TaskGraphConf. If the data was allocated using a memory edge, then the main thread can use the htgs::TaskGraphConf::releaseMemory function to return the memory to its htgs::MemoryManager.

Sample execution:

In this tutorial, we looked at augmenting a graph to use disk and memory management.

I encourage you to play around with parts A and B of the tutorials and see how performance is impacted using larger matrices, different block sizes, and various thread configurations. These can be quickly modified using the command-line parameters: --width, --height, --block-size, --num-readers, and --num-workers.

In the next tutorial, we will be implementing an in-core matrix multiplication using block decomposition and (optionally) the OpenBLAS API. The tutorial will introduce how to implement a slightly more complex dependency and the impacts of data traversal. We also show how to improve the utilization of a compute kernel by using an optimized variant. In addition, we will look into debugging and profiling using the htgs::TaskGraphConf::writeDotToFile.

To demonstrate these aspects, we have split Tutorial 3 into 3 parts.