5 Full Palm (“EightInch” Data)

5.1 Segmentation Timing

All algorithms are run over a small fixed corpus of EightInch images to estimate the total runtime of the evaluation. To be evaluated under SlapSeg III, algorithms must segment the timing corpus, on average, in under 1 500 milliseconds. This maximum reference time is documented in the SlapSeg III test plan, and is subject to change. Times are measured by running a single process on an isolated compute node equipped with an Intel Gold 6254 CPU (submissions received prior to February 2022 were timed with a Intel Xeon E5-4650 CPU).

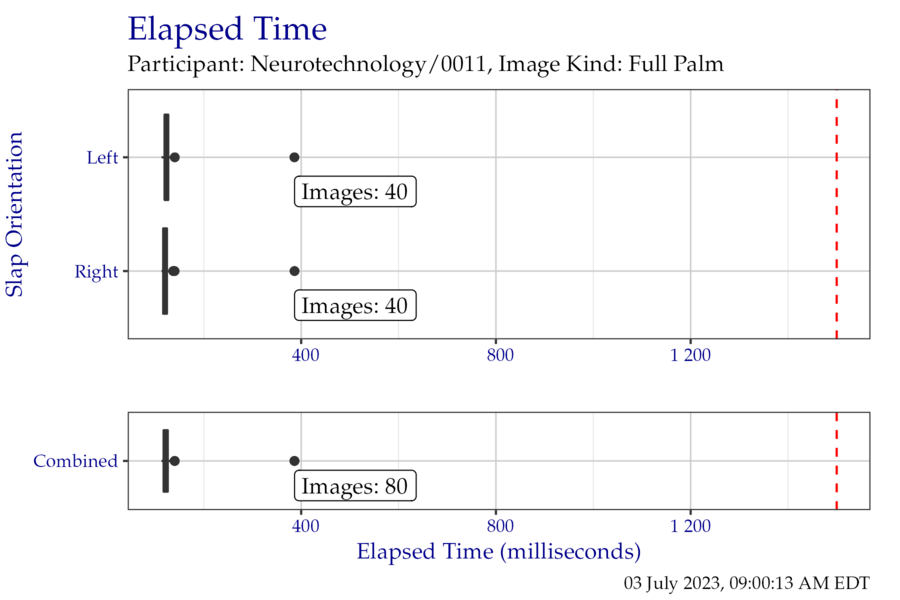

Box plots of segmentation times are separated by slap orientation in

Figure 5.1. Tabular representations are enumerated in Table 5.1. Results are reported in milliseconds.

Figure 5.1: Box plots of elapsed time in milliseconds when segmenting the EightInch timing test corpus, separated by slap orientation.

| Right | Left | Combined | |

|---|---|---|---|

| Minimum | 113 | 113 | 113 |

| 25% | 117 | 120 | 117 |

| Median | 120 | 122 | 121 |

| 75% | 124 | 127 | 126 |

| Maximum | 386 | 386 | 386 |

5.2 Segmentation Centers and Dimensions

5.2.1 Segmentation Centers

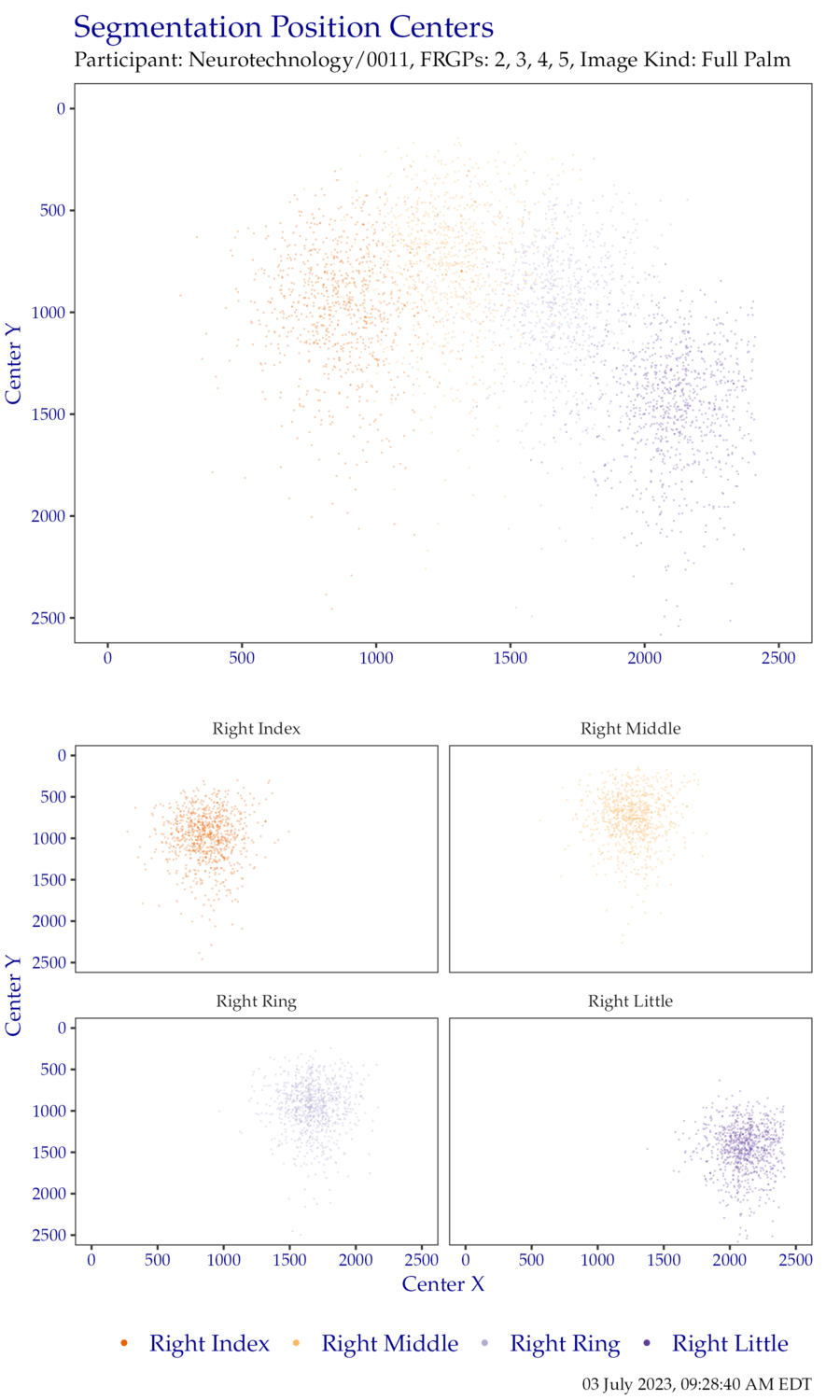

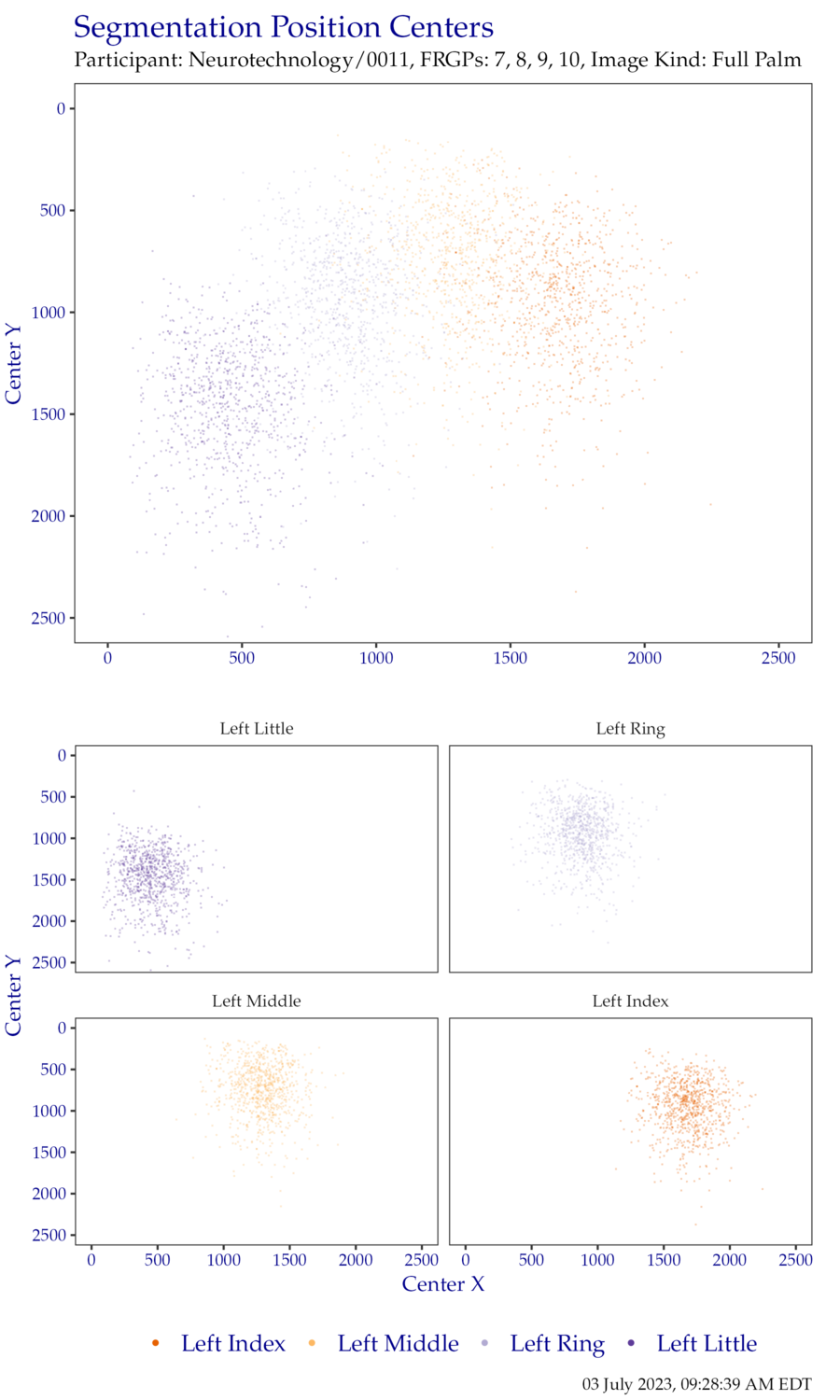

The plots in this section show the distribution of segmentation position centers (x, y) for EightInch data. At the top of each figure is a combined plot for all finger positions of a given slap orientation. These figures are isolated in plots faceted at the bottom of the figure.

Plots of segmentation centers for the right hand EightInch data are shown in Figure 5.2 and plots of segmentation centers for the left hand are shown in Figure 5.3. Blank lines that may appear in the plots are not rendering artifacts. Rather, they are indicative of image downsampling. Centers have been normalized to 500 pixels per inch.

Points in each plot are plotted with a semi-transparent opacity. This results in points of particular color appearing “darker” to indicate a higher frequency of the observed value, while “lighter” points indicate a lower observed frequency.

Figure 5.2: Segmentation centers for right hand EightInch data.

Figure 5.3: Segmentation centers for left hand EightInch data.

5.2.2 Segmentation Dimensions

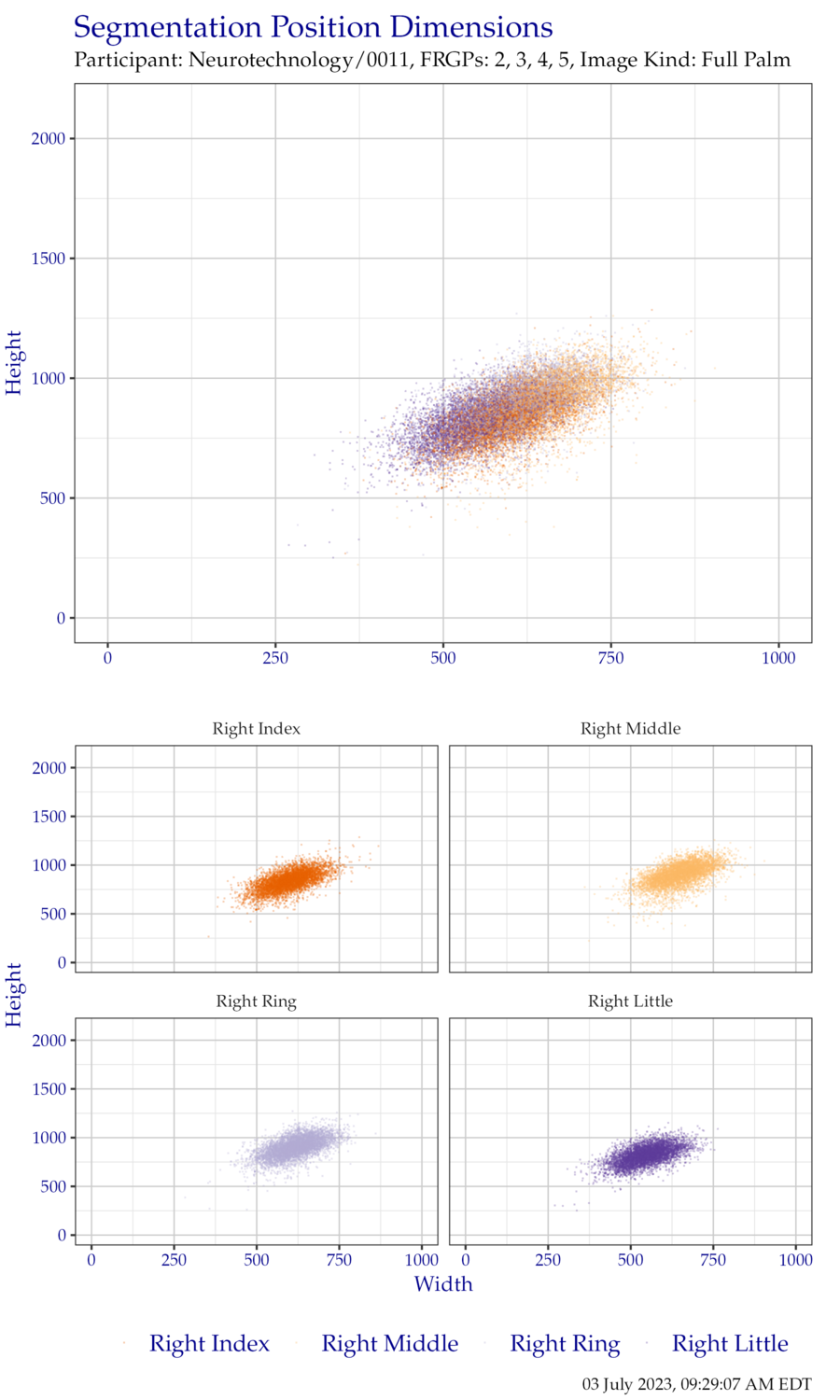

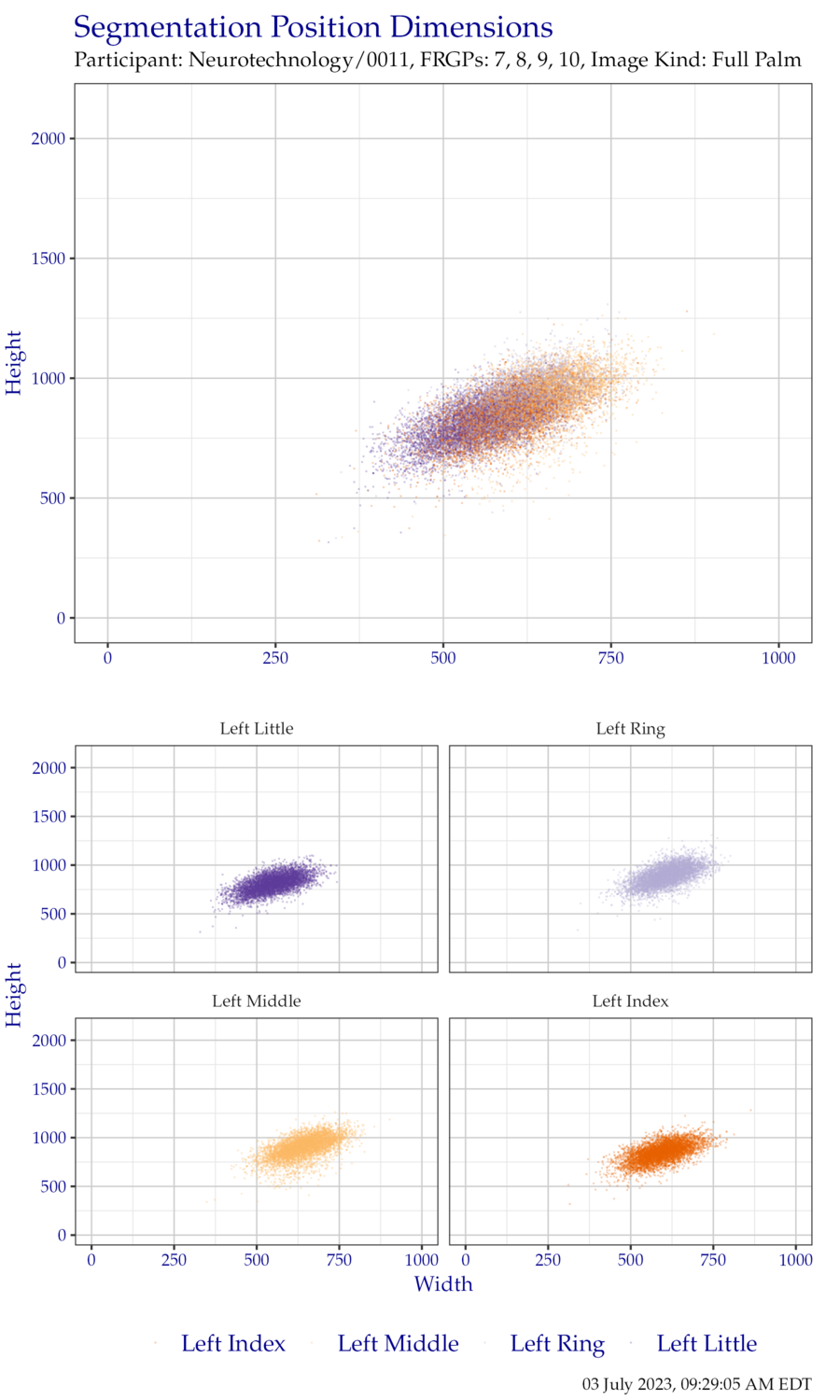

The plots in this section show the distribution of segmentation position widths and heights for EightInch data. At the top of each figure is a combined plot for all finger positions of a given slap orientation. These figures are isolated in plots faceted at the bottom of the figure.

Plots of segmentation position dimensions for the right hand EightInch data are shown in Figure 5.4 and the left hand in Figure 5.5. Blank lines that may appear in the plots are not rendering artifacts. Rather, they are indicative of image downsampling. Dimensions have been normalized to 500 pixels per inch.

Figure 5.4: Segmentation position dimensions for right hand EightInch data.

Figure 5.5: Segmentation position dimensions for left hand EightInch data.

5.3 Detailed Segmentation Statistics

NOTE: The following segmentation statistics are based on a limited subset (approximately 15%) of the anticipated Full Palm dataset. This analysis will be updated as soon as NIST can obtain the remainder of the dataset.

This section shows detailed results of segmentation of EightInch data. Values in each table are the percentage that the variable in the left-most column was correctly segmented.

Each table has three columns of percentages. The Standard Scoring column shows the percentage of correctly-segmented positions based on the scoring metrics defined in the SlapSeg III scoring document. The Ignoring Bottom Y column shows how the percentage would change if the threshold for the bottom Y coordinate of the segmentation position was ignored. Similarly, the Ignoring Bottom X and Y columns shows how the percentage would change if only the top, left, and right sides of the segmentation position were considered. These two supplemental columns are included because it has traditionally been difficult to determine the exact location of the distal interphalangeal joint.

Table 5.2 shows how

successful Neurotechnology+0011 segmented fingers for each subject in the test

corpus. Table 5.3 shows success for

specific finger positions over the entire test corpus. Similarly, Table

5.4 shows success for

segmenting the same finger position from both hands.

The remainder of the tables show success per subject when considering combinations of subsets of the fingers on each slap image. Table 5.5 shows success for combinations of all fingers, Table 5.6 for just the index and middle fingers, and Table 5.7 for all except the little finger.

| Number of Fingers | Standard Scoring | Ignoring Bottom Y | Ignoring Bottom X and Y |

|---|---|---|---|

| 1 | 100.0 | 100.0 | 100.0 |

| 2 | 100.0 | 100.0 | 100.0 |

| 3 | 100.0 | 100.0 | 100.0 |

| 4 | 99.8 | 99.8 | 99.9 |

| 5 | 98.9 | 98.9 | 99.0 |

| 6 | 98.4 | 98.4 | 98.4 |

| 7 | 97.2 | 97.5 | 97.6 |

| 8 | 89.5 | 89.7 | 90.8 |

| Finger | Standard Scoring | Ignoring Bottom Y | Ignoring Bottom X and Y |

|---|---|---|---|

| Right | |||

| Index | 97.6 | 97.7 | 97.7 |

| Middle | 97.7 | 97.7 | 98.0 |

| Ring | 98.4 | 98.5 | 98.7 |

| Little | 98.2 | 98.2 | 98.6 |

| Left | |||

| Index | 98.6 | 98.6 | 98.7 |

| Middle | 97.9 | 97.9 | 97.9 |

| Ring | 97.9 | 97.9 | 98.3 |

| Little | 97.5 | 97.6 | 97.6 |

| Fingers | Standard Scoring | Ignoring Bottom Y | Ignoring Bottom X and Y |

|---|---|---|---|

| Index | |||

| Either | 100.0 | 100.0 | 100.0 |

| Both | 96.2 | 96.3 | 96.4 |

| Middle | |||

| Either | 99.7 | 99.7 | 99.7 |

| Both | 96.0 | 96.0 | 96.3 |

| Ring | |||

| Either | 99.5 | 99.7 | 99.8 |

| Both | 96.8 | 96.8 | 97.2 |

| Little | |||

| Either | 100.0 | 100.0 | 100.0 |

| Both | 95.6 | 95.7 | 96.2 |

| Fingers | Standard Scoring | Ignoring Bottom Y | Ignoring Bottom X and Y |

|---|---|---|---|

| Right | |||

| Any | 99.4 | 99.4 | 99.5 |

| At Least Two | 99.2 | 99.2 | 99.2 |

| At Least Three | 98.9 | 98.9 | 99.0 |

| All Four | 94.4 | 94.6 | 95.4 |

| Left | |||

| Any | 99.7 | 99.7 | 99.7 |

| At Least Two | 99.4 | 99.4 | 99.4 |

| At Least Three | 98.7 | 98.7 | 98.7 |

| All Four | 94.1 | 94.3 | 94.7 |

| Fingers | Standard Scoring | Ignoring Bottom Y | Ignoring Bottom X and Y |

|---|---|---|---|

| Right | |||

| Either Index or Middle | 99.2 | 99.2 | 99.2 |

| Both Index and Middle | 96.1 | 96.2 | 96.6 |

| Left | |||

| Either Index or Middle | 99.5 | 99.5 | 99.5 |

| Both Index and Middle | 97.0 | 97.0 | 97.1 |

| Fingers | Standard Scoring | Ignoring Bottom Y | Ignoring Bottom X and Y |

|---|---|---|---|

| Right | |||

| Any | 99.4 | 99.4 | 99.4 |

| At Least Two | 99.0 | 99.0 | 99.0 |

| All Three | 95.3 | 95.5 | 96.1 |

| Left | |||

| Any | 99.5 | 99.5 | 99.5 |

| At Least Two | 98.9 | 98.9 | 98.9 |

| All Three | 96.1 | 96.1 | 96.6 |

5.4 Handling Troublesome Images

5.4.1 Capture Failures

Segmentation algorithms may refuse to process an image. This may happen for a technical reason (e.g., the algorithm cannot parse the image data), or for a practical reason (e.g., the hand in the image is placed incorrectly). These failure scenarios are the result of capturing improper image data. In these types of scenarios, it is important to examine the cause of the failure. With many live scan capture setups, segmentation is performed immediately after capture. If an algorithm can detect that it won’t be able to segment an image due to a technical or practical issue, it can alert the operator to perform a recapture before the subject leaves.

The SlapSeg III API encourages algorithms to identify these failure reasons by specifying pre-defined deficiencies in the image. Algorithms should attempt segmentation even if an image deficiency is encountered if at all possible. Note that SlapSeg III guarantees well-formed image data, so failures to parse are not an indicator of the data provided.

Neurotechnology+0011 did not report any capture failures.

5.4.1.1 Recovery

When encountering a segmentation failure, SlapSeg III algorithms are encouraged to provide a best-effort segmentation when possible. In some cases, that best-effort may be correct, which reduces the amount of images that need to be manually adjudicated by an operator.

Neurotechnology+0011 did not attempt any recovery segmentations.

5.4.2 Segmentation Failures

Even if an algorithm accepts an image for processing, it can still fail to process one or more fingers from the image, regardless of if the algorithm requested a recapture and provided best-effort segmentation.

The SlapSeg III API allows algorithms to communicate reasons for failure to process these fingers. In some cases, the distal phalanx in question might not be present in the image due to amputation or being placed outside the platen’s capture area. It is imperative that the segmentation algorithm correctly report this as failing to segment the correct friction ridge generalized position without disrupting the sequence of valid positions present in the image. This can help prompt an operator to recapture or record additional information about the subject.

In SlapSeg III, a number of images are missing fingers or otherwise have fingers

that will not be able to be segmented.

Reasons for segmentation failures reported by Neurotechnology+0011 are enumerated in Table 5.8.

| Failure Reason | Fingers |

|---|---|

| Finger Not Found | 274 |

| Finger Found, but Can’t Segment | 0 |

| Vendor Defined | 0 |

5.4.3 Identifying Missing Fingers

A small portion of the test corpus in SlapSeg III are missing fingers. Table

5.9 shows how

successful Neurotechnology+0011 was in correctly determining if a finger was

missing. The Missed row shows when a segmentation position was returned for

a missing finger. All possible failure reasons are enumerated, but are not

considered Correctly Identified because the algorithm specified failure for a

reason other than the finger not being found.

| Result | Percentage |

|---|---|

| Missed | 0.0 |

| Correctly Identified | 100.0 |

| Other Failure: Finger Found, but Can’t Segment | 0.0 |

| Other Failure: Vendor Defined | 0.0 |

| Other Failure: Segmentation Not Attempted | 0.0 |

5.4.4 Sequence Error

Sequence error occurs when a fingerprint is segmented from an image but assigned an incorrect finger position (e.g., segmenting a right middle finger but labeling it a right index finger). Table 5.10 shows cases in which a segmentation position was returned that matched a ground truth segmentation position for a different finger in the same image.

| Hand | Standard Scoring | Ignoring Bottom Y | Ignoring Bottom X and Y |

|---|---|---|---|

| Left | 0.57 | 0.57 | 0.57 |

| Right | 0.34 | 0.34 | 0.34 |

| Combined | 0.46 | 0.46 | 0.46 |

5.5 Determining Orientation

An optional portion of the SlapSeg III API asked participants to determine the hand orientation of an image. Participants were provided the kind (e.g., full palm) and needed to determine whether the image was of the left or right hand.

Overall Full Palm accuracy: 99.2%| Left | Right | |

|---|---|---|

| Left | 99.3 | 0.7 |

| Right | 0.9 | 99.1 |