Short Guide to JARVIS-Leaderboard¶

Introduction¶

JARVIS-Leaderboard is an open-source, community-driven platform that facilitates benchmarking and enhances reproducibility in materials design. Users can set up benchmarks with custom tasks and contribute datasets, code, and meta-data. The platform covers five main categories: Artificial Intelligence (AI), Electronic Structure (ES), Force-fields (FF), Quantum Computation (QC), and Experiments (EXP).

External Resources¶

Terminologies¶

Categories¶

- AI: Input data types include atomic structures, images, spectra, and text.

- ES: Involves various ES approaches, software packages, pseudopotentials, materials, and properties, comparing results to experiments.

- FF: Focuses on multiple approaches for material property predictions.

- QC: Benchmarks Hamiltonian simulations using various quantum algorithms and circuits.

- EXP: Utilizes inter-laboratory approaches to establish benchmarks.

Sub-categories¶

- SinglePropertyPrediction

- SinglePropertyClass

- ImageClass

- textClass

- MLFF (machine learning force-field)

- Spectra

- EigenSolver

Benchmarks¶

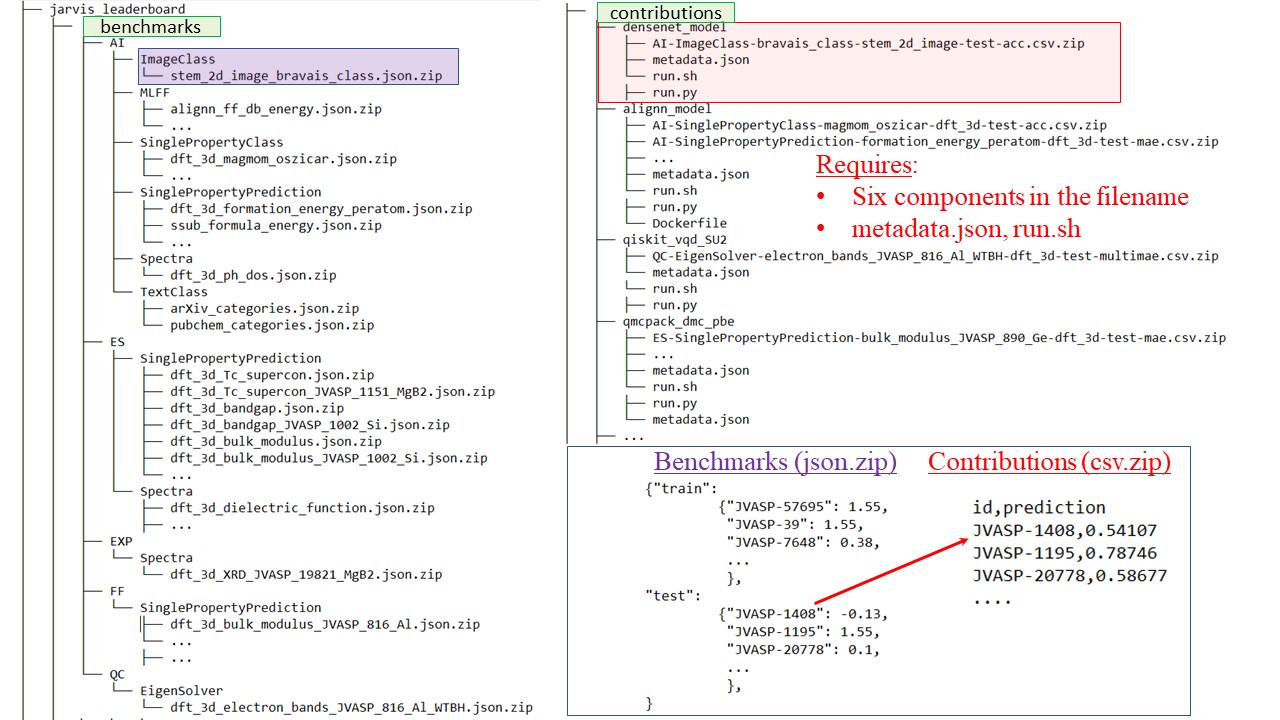

Ground truth data used to calculate metrics for specific tasks (e.g., a json.zip file).

Methods¶

Precise specifications for evaluation against a benchmark (e.g., DFT with VASP-GGA-PAW-PBE in the ES category).

Contributions¶

Individual data in the form of csv.zip files for each benchmark and method. Each contribution includes: - Method (e.g., AI) - Category (e.g., SinglePropertyPrediction) - Property (e.g., formation energy) - Dataset (e.g., dft_3d) - Data-split (e.g., test) - Metric (e.g., mae)

Directory and File Structure¶

How to Contribute¶

Adding a Contribution (csv.zip)¶

- Fork the JARVIS-Leaderboard repository on GitHub.

- Clone your forked repository:

git clone https://github.com/USERNAME/jarvis_leaderboard - Create a Python environment:

conda create --name leaderboard python=3.8 source activate leaderboard - Install the package:

python setup.py develop - Add a contribution:

cd jarvis_leaderboard/contributions/ mkdir vasp_pbe_teamX cd vasp_pbe_teamX cp ../vasp_optb88vdw/ES-SinglePropertyPrediction-bandgap_JVASP_1002_Si-dft_3d-test-mae.csv.zip . vi ES-SinglePropertyPrediction-bandgap_JVASP_1002_Si-dft_3d-test-mae.csv.zip - Modify the prediction value in the csv file, add

metadata.jsonandrun.shfiles. - Rebuild the leaderboard:

cd ../../../ python jarvis_leaderboard/rebuild.py mkdocs serve - Commit and push your changes:

git add jarvis_leaderboard/contributions/vasp_pbe_teamX git commit -m 'Adding my PBE Si result.' git push - Create a pull request on GitHub.

Adding a New Benchmark (json.zip)¶

- Create a

json.zipfile in thejarvis_leaderboard/benchmarksfolder. - Add a

.jsonfile withtrain,val,testkeys. - Add a corresponding

.mdfile in thejarvis_leaderboard/docsfolder. - Follow instructions for "Adding model benchmarks to existing dataset".

Acronyms¶

- MAE: Mean Absolute Error

- ACC: Classification accuracy

- MULTIMAE: MAE sum of multiple entries, Euclidean distance

Help¶

For questions or concerns, raise an issue on GitHub or email Kamal Choudhary ([email protected]).

Citation¶

JARVIS-Leaderboard: a large scale benchmark of materials design methods

License¶

This template is served under the NIST license. Read the [LICENSE] file for more info.