|

| | ICudaTask (int *cudaIds, size_t numGpus, bool autoEnablePeerAccess=true) |

| | Creates an ICudaTask. More...

|

| |

|

virtual void | initializeCudaGPU () |

| | Virtual function that is called when the ICudaTask has been initialized and is bound to a CUDA GPU.

|

| |

| virtual void | executeTask (std::shared_ptr< T > data)=0 |

| | Executes the ICudaTask on some data. More...

|

| |

|

virtual void | shutdownCuda () |

| | Virtual function that is called when the ICudaTask is shutting down.

|

| |

| virtual std::string | getName () override |

| | Virtual function that gets the name of this ICudaTask. More...

|

| |

| std::string | getDotFillColor () override |

| | Gets the color for filling the shape for graphviz dot. More...

|

| |

| virtual ITask< T, U > * | copy ()=0 |

| | Pure virtual function that copies this ICudaTask. More...

|

| |

|

virtual void | debug () override |

| | Virtual function that can be used to provide debug information.

|

| |

| int | getCudaId () |

| | Gets the Cuda Id for this cudaTask. More...

|

| |

| bool | requiresCopy (size_t pipelineId) |

| | Checks if the requested pipelineId requires GPU-to-GPU copy. More...

|

| |

| template<class V > |

| bool | requiresCopy (std::shared_ptr< MemoryData< V >> data) |

| | Checks if the requested pipelineId requires GPU-to-GPU copy. More...

|

| |

| bool | hasPeerToPeerCopy (size_t pipelineId) |

| | Checks if the requested pipelineId allows peer to peer GPU copy. More...

|

| |

| template<class V > |

| bool | autoCopy (V *destination, std::shared_ptr< MemoryData< V >> data, long numElems) |

| | Will automatically copy from one GPU to another (if it is required). More...

|

| |

| void | initialize () override final |

| | Initializes the CudaTask to be bound to a particular GPU. More...

|

| |

| void | shutdown () override final |

| | Shutsdown the ICudaTask. More...

|

| |

| const cudaStream_t & | getStream () const |

| | Gets the CUDA stream for this CUDA task. More...

|

| |

| int * | getCudaIds () |

| | Gets the cudaIds specified during ICudaTask construction. More...

|

| |

| size_t | getNumGPUs () |

| | Gets the number of GPUs specified during ICudaTask construction. More...

|

| |

| void | syncStream () |

| | Synchronizes the Cuda stream associated with this task. More...

|

| |

|

| ITask () |

| | Creates an ITask with number of threads equal to 1.

|

| |

| | ITask (size_t numThreads) |

| | Constructs an ITask with a specified number of threads. More...

|

| |

| | ITask (size_t numThreads, bool isStartTask, bool poll, size_t microTimeoutTime) |

| | Constructs an ITask with a specified number of threads as well as additional scheduling options. More...

|

| |

| virtual bool | canTerminate (std::shared_ptr< AnyConnector > inputConnector) override |

| | Virtual function that is called when an ITask is checking if it can be terminated. More...

|

| |

| virtual void | executeTaskFinal () override |

| | Virtual function that is called just before the task has shutdown. More...

|

| |

| virtual std::string | getDotLabelName () override |

| | Virtual function to get the label name used for dot graph viz. More...

|

| |

| virtual std::string | getDotShapeColor () override |

| | Gets the color of the shape for graphviz dot. More...

|

| |

| virtual std::string | getDotShape () override |

| | Gets the shape for graphviz dot. More...

|

| |

| virtual std::string | getDotCustomProfile () override |

| | Adds the string text to the profiling of this task in the graphviz dot visualization. More...

|

| |

| virtual void | printProfile () override |

| | Prints the profile data to std::out. More...

|

| |

| virtual size_t | getNumGraphsSpawned () |

| | Gets the number of graphs spawned by this ITask. More...

|

| |

|

virtual std::string | genDotProducerEdgeToTask (std::map< std::shared_ptr< AnyConnector >, AnyITask *> &inputConnectorDotMap, int dotFlags) override |

| |

|

virtual std::string | genDotConsumerEdgeFromConnector (std::shared_ptr< AnyConnector > connector, int flags) override |

| |

|

virtual std::string | genDotProducerEdgeFromConnector (std::shared_ptr< AnyConnector > connector, int flags) |

| |

| ITask< T, U > * | copyITask (bool deep) override |

| | Copies the ITask (including a copy of all memory edges) More...

|

| |

| void | addResult (std::shared_ptr< U > result) |

| | Adds results to the output list to be sent to the next connected ITask in a TaskGraph. More...

|

| |

| void | addResult (U *result) |

| | Adds results to the output list to be sent to the next connected ITask in a TaskGraph. More...

|

| |

| void | initialize (size_t pipelineId, size_t numPipeline, TaskManager< T, U > *ownerTask) |

| | Function that is called when an ITask is being initialized by it's owner thread. More...

|

| |

| template<class V > |

| m_data_t< V > | getMemory (std::string name, IMemoryReleaseRule *releaseRule) |

| | Retrieves memory from a memory edge. More...

|

| |

| template<class V > |

| m_data_t< V > | getDynamicMemory (std::string name, IMemoryReleaseRule *releaseRule, size_t numElems) |

| | Retrieves memory from a memory edge. More...

|

| |

| template<class V > |

| void | releaseMemory (m_data_t< V > memory) |

| | Releases memory onto a memory edge, which is transferred by the graph communicator. More...

|

| |

|

void | resetProfile () |

| | Resets profile data.

|

| |

| size_t | getThreadID () |

| | Gets the thread ID associated with this task. More...

|

| |

| unsigned long long int | getTaskComputeTime () const |

| | Gets the task's compute time. More...

|

| |

| std::string | inTypeName () override final |

| | Gets the demangled input type name of the connector. More...

|

| |

| std::string | outTypeName () override final |

| | Gets the demangled output type name of the connector. More...

|

| |

| std::string | getAddress () override final |

| | Gets the address from the owner task, which is the address of the task graph. More...

|

| |

| void | setTaskManager (TaskManager< T, U > *ownerTask) |

| | Sets the owner task manager for this ITask. More...

|

| |

| TaskManager< T, U > * | getOwnerTaskManager () |

| | Gets the owner task manager for this ITask. More...

|

| |

| virtual void | gatherProfileData (std::map< AnyTaskManager *, TaskManagerProfile *> *taskManagerProfiles) |

| | Gathers profile data. More...

|

| |

|

| AnyITask () |

| | Creates an ITask with number of threads equal to 1.

|

| |

| | AnyITask (size_t numThreads) |

| | Constructs an ITask with a specified number of threads. More...

|

| |

| | AnyITask (size_t numThreads, bool isStartTask, bool poll, size_t microTimeoutTime) |

| | Constructs an ITask with a specified number of threads as well as additional scheduling options. More...

|

| |

|

virtual | ~AnyITask () |

| | Destructor.

|

| |

| virtual std::string | genDot (int flags, std::string dotId, std::shared_ptr< htgs::AnyConnector > input, std::shared_ptr< htgs::AnyConnector > output) |

| | Virtual function that generates the input/output and per-task dot notation. More...

|

| |

|

virtual std::string | getConsumerDotIds () |

| |

|

virtual std::string | getProducerDotIds () |

| |

| virtual std::string | genDot (int flags, std::string dotId) |

| | Virtual function that adds additional dot attributes to this node. More...

|

| |

| virtual std::string | genCustomDot (ProfileUtils *profileUtils, int colorFlag) |

| | Virtual function to generate customized dot file. More...

|

| |

| virtual std::string | debugDotNode () |

| | Provides debug output for a node in the dot graph. More...

|

| |

| virtual void | profile () |

| | Virtual function that is called to provide profile output for the ITask. More...

|

| |

| virtual std::string | profileStr () |

| | Virtual function that is called after executionTask is called. More...

|

| |

| void | initialize (size_t pipelineId, size_t numPipeline) |

| | Virtual function that is called when an ITask is being initialized by it's owner thread. More...

|

| |

| void | setPipelineId (size_t pipelineId) |

| | Sets the pipeline Id for this ITask. More...

|

| |

| size_t | getPipelineId () |

| | Gets the pipeline ID. More...

|

| |

| void | setNumPipelines (size_t numPipelines) |

| | Sets the number of pipelines that this ITask belongs too. More...

|

| |

| size_t | getNumPipelines () const |

| | Sets the task graph communicator. More...

|

| |

| size_t | getNumThreads () const |

| | Gets the number of threads associated with this ITask. More...

|

| |

| bool | isStartTask () const |

| | Gets whether this ITask is a starting task. More...

|

| |

| bool | isPoll () const |

| | Gets whether this ITask is polling for data or not. More...

|

| |

| size_t | getMicroTimeoutTime () const |

| | Gets the timeout time for polling. More...

|

| |

| void | copyMemoryEdges (AnyITask *iTaskCopy) |

| | Copies the memory edges from this AnyITask to another AnyITask. More...

|

| |

| std::string | genDot (int flags, std::shared_ptr< AnyConnector > input, std::shared_ptr< AnyConnector > output) |

| | Creates a dot notation representation for this task. More...

|

| |

| void | profileITask () |

| | Provides profile output for the ITask,. More...

|

| |

| std::string | getDotId () |

| | Gets the id used for dot nodes. More...

|

| |

| std::string | getNameWithPipelineId () |

| | Gets the name of the ITask with it's pipeline ID. More...

|

| |

| const std::shared_ptr< ConnectorMap > & | getMemoryEdges () const |

| | Gets the memory edges for the task. More...

|

| |

| const std::shared_ptr< ConnectorMap > & | getReleaseMemoryEdges () const |

| | Gets the memory edges for releasing memory for the memory manager, used to shutdown the memory manager. More...

|

| |

| bool | hasMemoryEdge (std::string name) |

| | Checks whether this ITask contains a memory edge for a specified name. More...

|

| |

| void | attachMemoryEdge (std::string name, std::shared_ptr< AnyConnector > getMemoryConnector, std::shared_ptr< AnyConnector > releaseMemoryConnector, MMType type) |

| | Attaches a memory edge to this ITask to get memory. More...

|

| |

| unsigned long long int | getMemoryWaitTime () const |

| | Gets the amount of time the task was waiting for memory. More...

|

| |

| void | incMemoryWaitTime (unsigned long long int val) |

| | Increments memory wait time. More...

|

| |

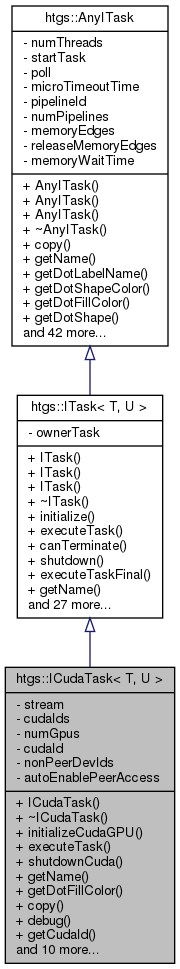

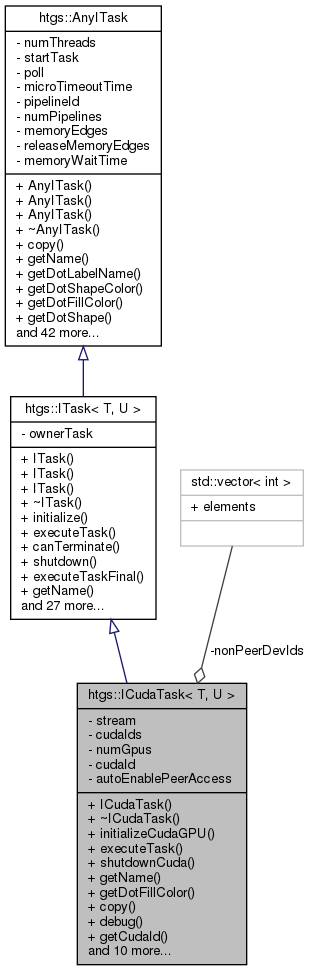

template<class T, class U>

class htgs::ICudaTask< T, U >

An ICudaTask is used to attach a task to an NVIDIA Cuda GPU.

The task that inherits from this class will automatically be attached to the GPU when launched by the TaskGraphRunTime from within a TaskGraphConf.

An ICudaTask may be bound to one or more GPUs if the task is added into an ExecutionPipeline. The number of CUContexts must match the number of pipelines specified for the ExecutionPipeline.

Mechanisms to handle automatic data motion for GPU-to-GPU memories is provided to simplify peer to peer device memory copies. In order to use peer to peer copy, both GPUs must reside on the same I/O Hub (IOH) and be the same GPU model.

It may be necessary to copy data that resides on two different GPUs. This can be achieved by using the autoCopy(V destination, std::shared_ptr<MemoryData<V>> data, long numElems) function. This occurs when there are ghost regions between data domains. If peer to peer copying is allowed between the multiple GPUs, then the autocopy function is not needed. See below for an example of using autocopy.

At this time it is necessary for the ICudaTask to copy data from CPU memories to GPU memories.

Functions are available for getting the CUDA stream, context, pipeline ID, and number of pipelines.

- Note

- It is ideal to configure a separate copy ICudaTask to copy data asynchronously from a computation ICudaTask for CPU->GPU or GPU->CPU copies.

Example implementation:

#define SIZE 100

public:

~SimpleCudaTask() {}

{

cudaMalloc(&localMemory, sizeof(double) * SIZE);

}

virtual void executeTask(std::shared_ptr<MatrixData> data) {

...

double * memory;

if (this->

autoCopy(localMemory, data->getCudaMemoryData(), SIZE))

{

memory = localMemory;

}

else

{

memory = data->getMemoryData()->get();

}

...

}

virtual void debug() { ... }

virtual std::string

getName() {

return "SimpleCudaTask"; }

private:

double *localMemory;

};

Example usage:

SimpleCudaTask *cudaTask = new SimpleCudaTask(...);

- Template Parameters

-

Public Member Functions inherited from htgs::ITask< T, U >

Public Member Functions inherited from htgs::ITask< T, U > Public Member Functions inherited from htgs::AnyITask

Public Member Functions inherited from htgs::AnyITask