Digital Identity Risk Management

This section is normative.

This section describes the methodology for assessing digital identity risks associated with online services, including residual risks to users of the online service, the service provider organization, and its mission and business partners. It offers guidance on selecting usable, privacy-enhancing security, and anti-fraud controls that mitigate those risks. Additionally, it emphasizes the importance of continuously evaluating the performance of the selected controls.

The Digital Identity Risk Management (DIRM) process focuses on the identification and management of risks according to two dimensions: (1) risks that result from operating the online service that might be addressed by an identity system and (2) additional risks that are introduced as a result of implementing the identity system.

The first dimension of risk informs initial assurance level selections and seeks to identify risks associated with a compromise of the online service that might be addressed by an identity system. For example:

- Identity proofing: Negative impacts that could reasonably be expected if an imposter were to gain access to a service or receive a credential using the identity of a legitimate user (e.g., an attacker successfully impersonates someone)

- Authentication: Negative impacts that could reasonably be expected if a false claimant accessed an account that was not rightfully theirs (e.g., an attacker who compromises or steals an authenticator), often referred to as an account takeover attack

- Federation: Negative impacts that could reasonably be expected if the wrong subject successfully accessed an online service, system, or data (e.g., compromising or replaying an assertion)

If there are risks associated with a compromise of the online service that could be addressed by an identity system, an initial assurance level is selected and the second dimension of risk is then considered.

The second dimension of risk seeks to identify the risks posed by the identity system itself and informs the tailoring process. Tailoring provides a process to modify an initially assessed assurance level, implement compensating or supplemental controls, or modify selected controls based on ongoing detailed risk assessments in areas such as privacy, usability, and resilience to real-world threats.

Examples of the types of impact that can result from risks introduced by the identity system itself include:

- Identity proofing: Impacts of not successfully identity proofing and enrolling a legitimate subject due to barriers faced by the subject throughout the process of identity proofing, falling victim to a breach of information that was collected and retained to support identity proofing processes, or the initial IAL failing to completely address specific threats, threat actors, and fraud

- Authentication: Impacts of failing to authenticate the correct subject due to barriers faced by the subject in presenting their authenticator, including barriers due to usability issues; the initial AAL failing to completely address targeted account takeover models or specific authenticator types fail to mitigate anticipated attacks

- Federation: Impacts of releasing real subscriber attributes to the wrong online service or system or releasing incorrect or fake attributes to a legitimate RP

The outcomes of the DIRM process depend on the role that an entity plays within the digital identity model.

- For relying parties, the intent of this process is to determine the assurance levels and any tailoring required to protect online services and the applications, transactions, and systems that comprise or are impacted by those services. This directly contributes to the selection, development, and procurement of CSP services. Federal RPs SHALL implement the DIRM process for all online services.

- For credential service providers and identity providers, the intent of this process is to design service offerings that meet the requirements of the defined assurance levels, continuously guard against compromises to the identity system, and meet the needs of RPs. Whenever a service offering deviates from normative guidance, those deviations SHALL be clearly communicated to the RPs that utilize the service.

CSPs and IdPs are expected to offer services at assurance levels that are requested by the RPs they serve. However, CSPs and IdPs that choose to deviate from this guideline or augment their services are expected to conduct an abbreviated digital identity risk assessment and document their modifications in a Digital Identity Acceptance Statement that is provided to RPs (see Sec. 3.4.4).

This process augments the risk management processes required by [FISMA]. The results of the DIRM impact assessment for the online service may be different from the FISMA impact level for the underlying application or system. Identity process failures can result in different levels of impact for various user groups. For example, the overall assessed FISMA impact level for a payment system may result in a ‘FISMA Moderate’ impact category because sensitive financial data is being processed by the system. However, for individuals who are making guest payments where no persistent account is established, the authentication and proofing impact levels may be lower. Agency authorizing officials SHOULD require documentation that demonstrates adherence to the DIRM process as a part of the authority to operate (ATO) for the underlying information system that supports an online service. Agency authorizing officials SHOULD require documentation from CSPs that demonstrates adherence to the DIRM process as part of procurement or ATO processes for integration with CSPs.

These guidelines use the term FISMA impact level; other NIST RMF publications also use the term system impact level to refer to such impact categorization.

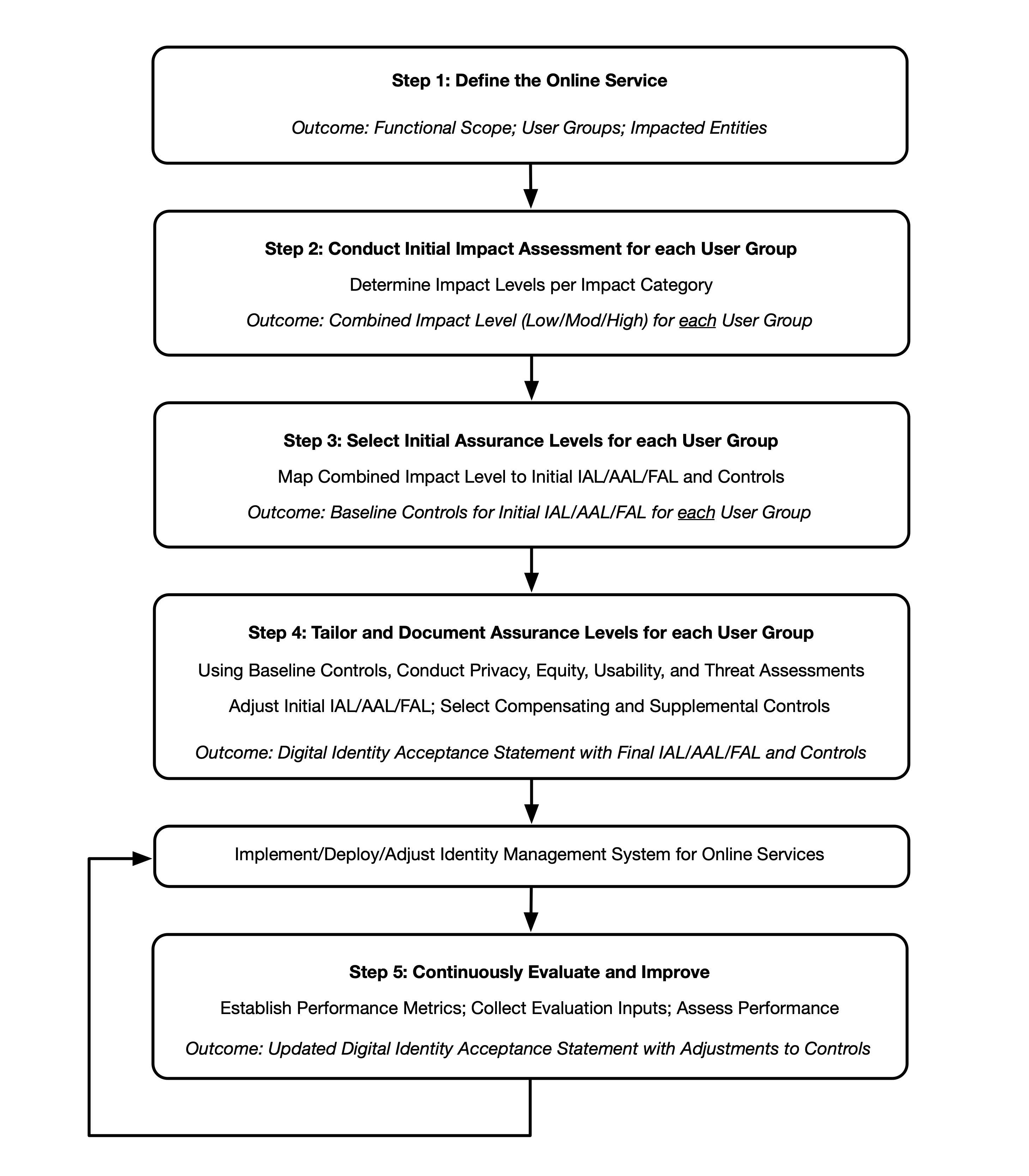

There are 5 steps in the DIRM process:

- Define the online service: As a starting point, the organization documents a description of the online service in terms of its functional scope, the user groups it is intended to serve, the types of online transactions available to each user group, and the underlying data that the online service processes through its interfaces. If the online service is one element of a broader business process, its role is documented, as are the uses of any data collected and processed by the online service. Additionally, an organization needs to determine the entities that will be impacted by the online service and the broader business process of which it is a part. The outcome is a description of the online service, its users, and the entities that may be impacted by its functionality.

- Conduct initial impact assessment: In this step, organizations assess the impacts of a compromise of the online service that might be addressed by an identity system (i.e., identity proofing, authentication, or federation). Each function of the online service is assessed against a defined set of harms and impact categories. Each user group of the online service is considered separately based on the transactions available to that user group (i.e., the permissions that the group is granted relative to the data and functions of the online service). The outcome of this step is a documented set of impact categories and associated impact levels that are determined by considering the transactions available to each user group of the online service.

- Select initial assurance levels: In this step, the impact categories and impact levels are evaluated to determine the initial assurance levels to protect the online service from unauthorized access and fraud. Using the assurance levels, the organization identifies the baseline controls for the IAL, AAL, and FAL for each user group based on the requirements in companion volumes [SP800-63A], [SP800-63B], and [SP800-63C], respectively. The outcome of this step is an identified initial IAL, AAL, and FAL, as applicable, for each user group.

- Tailor and document assurance level determinations: In this step, detailed assessments are conducted or leveraged to determine the potential impact of the initially selected assurance levels and their associated controls on privacy, customer experience, and resistance to the current threat environment. Tailoring may result in a modification of the initially assessed assurance level, the identification of compensating or supplemental controls (see Sec. 3.4.2 and Sec. 3.4.3), or both. All assessments and final decisions are documented and justified. The outcome is a Digital Identity Acceptance Statement (see Sec. 3.4.4) with a defined and implementable set of assurance levels and a final set of controls for the online service.

- Continuously evaluate and improve: In this step, information on the performance of the identity management approach is gathered and evaluated. This evaluation considers a diverse set of factors, including business impacts, effects on fraud rates, and impacts on user communities. This information is crucial for determining whether the selected assurance levels and controls meet mission, business, security, and — where applicable — program integrity needs. It also helps monitor for unintended harms that impact privacy and access. Opportunities for improvement should also be considered by closely monitoring the evolving threat landscape and investigating new technologies and methodologies that can counter those threats, improve customer experience, or enhance privacy. The outcomes of this step are performance metrics, documented and transparent processes for evaluation and redress, and ongoing improvements to the identity management approach.

Fig. 6. High-level diagram of the DIRM Process Flow

Figure 6 illustrates the major actions and outcomes for each step of the DIRM process flow. While presented as a stepwise approach, there can be many points in the process that require divergence from the sequential order, including the need for iterative cycles between initial task execution and revisiting tasks. For example, the introduction of new regulations or requirements while an assessment is in progress may require organizations to revisit a step in the process. Additionally, new functionalities, changes in data usage, and changes to the threat environment may require an organization to revisit steps in the DIRM process at any point, including potentially modifying the assurance levels and/or the related controls of the online service.

Organizations SHOULD adapt and modify this overall approach to meet organizational processes, governance, and enterprise risk management practices. At a minimum, organizations SHALL execute and document each step and complete and document the normative mandates and outcomes of each step, regardless of any organization-specific processes or tools used in the overall DIRM process. Additionally, organizations SHOULD consult with a representative sample of the online service’s user population to inform the design and performance evaluation of the identity management system.

Define the Online Service

The purpose of defining the online service is to understand its functionality and establish a common understanding of its context, which will inform subsequent steps of the DIRM process. The role of the online service is contextualized as part of the broader business environment and associated processes, resulting in a documented description of the scope of the online service, user groups and their expectations, data processed, impacted entities, and other pertinent details.

RPs SHALL develop a description of the online service that includes, at minimum:

- The organizational mission and business objectives supported by the online service

- The mission and business partner dependencies associated with the online service

- Legal, regulatory, and contractual requirements, including privacy obligations that apply to the online service

- The functionality of the online service and the data that it is expected to process

- User groups that need to have access to the online service as well as the types of online transactions and access privileges available to each user group

- The set of entities (to include users of the online service, organizations, and populations served) that will be impacted by the online service and the broader business process of which it is a part

- The results of any preexisting DIRM assessments (as an input) and the current state of any preexisting identity technologies (i.e., proofing, authentication, or federation)

- The estimated availability of the types of identity evidence required for identity proofing across all user groups served

It is imperative to consider unexpected and undesirable impacts, as well as the scale of impact, on different entities that result from an unauthorized user gaining access to the online service due to a failure of the digital identity system. For example, if an attacker obtained unauthorized access to an online service that controls a power plant, the actions taken by the bad actor could have devastating environmental impacts on the local populations that live near the facility and cause power outages for the localities served by the plant.

It is important to differentiate between user groups and impacted entities, as described in this document. The online service will allow access to a set of users who may be partitioned into a few user groups based on the kind of functionality that is offered to that user group. For example, an online income tax filing and review service may have the following user groups: (1) citizens who need to check the status of their personal tax returns, (2) tax preparers who file tax returns on behalf of their clients, and (3) system administrators who assign privileges to different groups of users or create new user groups as needed. Impacted entities include all those who could face negative consequences in the event of a digital identity system failure. This will likely include members of the user groups but may also include those who never directly use the system.

Accordingly, the scope of impact assessments SHALL include individuals who use the online application as well as the organization itself. Additionally, organizations SHALL identify other entities (e.g., mission partners, communities, and those identified in [SP800-30]) that need to be specifically included based on mission and business needs. At a minimum, organizations SHALL document all impacted entities (both internal and external to the organization) when conducting their impact assessments.

The output of this step is a documented description of the online service, including a list of the user groups and other entities that are impacted by the functionality provided by the online service. This information will serve as a basis and establish the context for effectively applying the impact assessments detailed in the following sections.

Conduct Initial Impact Assessment

This step of the DIRM process addresses the first dimension of risk by identifying the risks to the online service that might be addressed by an identity system.

The purpose of the initial impact assessment is to identify the potential adverse impacts of failures in identity proofing, authentication, and federation that are specific to an online service, yielding an initial set of assurance levels. RPs SHOULD consider historical data and results from user focus groups when performing this step. The impact assessment SHALL include:

- Identifying a set of impact categories and the potential harms for each impact category,

- Identifying the levels of impact, and

- Assessing the level of impact for each user group.

The level of impact for each user group identified in Sec. 3.1 SHALL be considered separately based on the transactions available to that user group. This gives organizations maximum flexibility in selecting and implementing assurance levels that are appropriate for each user group. While impacts to user groups, the organization, and other entities are primary considerations for impact assessments, organizations SHOULD also consider scale (e.g., number of persons impacted by transactions).

The output of this assessment is a defined impact level (i.e., Low, Moderate, or High) for each user group. This serves as the primary input to the initial assurance level selection.

Identify Impact Categories and Potential Harms

While an online service has a discrete set of users and user groups that authenticate to access the functionality provided by the service, there may be a much larger set of entities that are impacted when imposters and attackers obtain unauthorized access to the online service due to errors in identity proofing, authentication, or federation. In Sec. 3.1, such impacted entities are identified and documented as a part of defining the online service.

In this step, organizations identify the categories of impact that are applicable to the impacted entities for a given online service. At a minimum, organizations SHALL include the following impact categories in their impact assessments:

- Degradation of mission delivery

- Damage to trust, standing, or reputation

- Unauthorized access to information

- Financial loss or liability

- Loss of life or danger to human safety, human health, or environmental health

Organizations SHOULD include additional impact categories, as appropriate, based on their mission and business objectives. Each impact category SHALL be documented and consistently applied when implementing the DIRM process across different online services offered by the organization.

Harms refer to any adverse effects that would be experienced by an impacted entity. They provide a means to effectively understand the impact categories and how they may apply to specific entities impacted by the online service. For each impact category, organizations SHALL consider potential harms for each of the impacted entities identified in Sec. 3.1.

Examples of harms associated with each category include:

- Degradation of mission delivery:

- Harms to individuals may include the inability to access government services or benefits for which they are eligible.

- Harms to the organization (including the organization offering the online service and organizations supported by the online service) may include the inability to perform current mission/business functions in a sufficiently timely manner, with sufficient confidence and/or correctness, or within planned resource constraints, or the inability or limited ability to perform mission/business functions in the future.

- Damage to trust, standing, or reputation:

- Harms to individuals may include damage to image or reputation as a result of impersonation.

- Harms to the organization may include damage to reputation resulting in the fostering of a negative image, the deterioration of existing trust relationships, or the inability to forge potential new trust relationships in the future.

- Unauthorized access to information:

- Harms to individuals may include the breach of personal information or other sensitive information that may result in secondary harms, such as financial loss, loss of life, physical or psychological injury, impersonation, identity theft, or persistent inconvenience.

- Harms to the organization may include the exfiltration, deletion, degradation, or exposure of intellectual property or the unauthorized disclosure of other information assets, such as classified materials or controlled unclassified information (CUI).

- Financial loss or liability:

- Harms to individuals may include debts incurred or assets lost as a result of fraud or other harm, damage to or loss of credit, actual or potential loss of employment or sources of income, loss of housing, and/or other financial loss.

- Harms to the organization may include costs related to fraud or other criminal activity, loss of assets, devaluation, or loss of business.

- Loss of life or danger to human safety, human health, or environmental health:

- Harms to individuals may include death or damage to physical well-being that may result in secondary harms, such as damage to mental or emotional well-being, or impact on environmental health that could result in the uninhabitability of the local environment and require intervention to address potential or actual damage.

- Harms to the organization may include damage to or loss of the organization’s workforce or damage to the surrounding environment and the subsequent impact of unsafe conditions.

The outcome of this activity is a list of impact categories and harms that will be used to assess adverse consequences for impacted entities.

Identify Potential Impact Levels

In this step, the organization assesses the potential level of impact caused by an unauthorized user gaining access to the online service for each of the impact categories selected by the organization (from Sec. 3.2.1). Impact levels are assigned using one of the following potential impact values:

- Low: Expected to have a limited adverse effect

- Moderate: Expected to have a serious adverse effect

- High: Expected to have a severe or catastrophic adverse effect

Each user group can have a distinct set of privileges and functionalities through the online service. Hence, it is necessary to consider the adverse consequences for each set of impacted entities in each of the impact categories, as a result of an intruder obtaining unauthorized access as a member of a particular user group. To provide a more objective basis for impact level assignments, organizations SHOULD develop thresholds and examples for the impact levels for each impact category. Where this is done, particularly with specifically defined quantifiable values, these thresholds SHALL be documented and used consistently in the DIRM assessments across an organization to allow for a common understanding of risks.

Examples of potential impacts in each of the impact categories include:

- Degradation of mission delivery:

- Low: Expected to result in limited mission capability degradation such that the organization is still able to perform its primary functions but with some reduced effectiveness

- Moderate: Expected to result in serious mission capability degradation such that the organization is still able to perform its primary functions but with significantly reduced effectiveness

- High: Expected to result in severe or catastrophic mission capability degradation or loss over a duration such that the organization is unable to perform one or more of its primary functions

- Damage to trust, standing, or reputation:

- Low: Expected to result in limited, short-term inconvenience, distress, or embarrassment to any party

- Moderate: Expected to result in serious short-term or limited long-term inconvenience, distress, or damage to the standing or reputation of any party

- High: Expected to result in severe or serious long-term inconvenience, distress, or damage to the standing or reputation of any party or many individuals

- Unauthorized access to information:

- Low: Expected to have a limited adverse effect on organizational operations, organizational assets, or individuals, as defined in [FIPS199]

- Moderate: Expected to have a serious adverse effect on organizational operations, organizational assets, or individuals, as defined in [FIPS199]

- High: Expected to have a severe or catastrophic adverse effect on organizational operations, organizational assets, or individuals, as defined in [FIPS199]

- Financial loss or financial liability:

- Low: Expected to result in limited financial loss or liability to any party

- Moderate: Expected to result in a serious financial loss or liability to any party

- High: Expected to result in severe or catastrophic financial loss or liability to any party

- Loss of life or danger to human safety, human health, or environmental health:

- Low: Expected to result in a limited impact on human safety or health that resolves on its own or with a minor amount of medical attention or a limited impact on environmental health that requires at most minor intervention to prevent further damage or reverse existing damage

- Moderate: Expected to result in a serious impact on human safety or health that requires significant medical attention, serious impact on environmental health that results in a period of uninhabitability and requires significant intervention to prevent further damage or reverse existing damage, or the compounding impacts of multiple low-impact events

- High: Expected to result in a severe or catastrophic impact on human safety or health, such as severe injury, trauma, or death, a severe or catastrophic impact on environmental health that results in long-term or permanent uninhabitability and requires extensive intervention to prevent further damage or reverse existing damage, or the compounding impacts of multiple moderate impact events

This guideline provides three impact levels. However, organizations MAY define more granular impact levels and develop their own methodologies for their initial impact assessment activities.

Impact Analysis

The impact analysis considers the level of impact (i.e., Low, Moderate, or High) of compromises of any of the identity system functions (i.e., identity proofing, authentication, and federation) that results in an intruder obtaining unauthorized access to the online service as a member of a particular user group, and initiating transactions that cause negative effects on impacted entities. The impact analysis considers the following dimensions:

- User groups (see Sec. 3.1)

- Impacted entities (see Sec. 3.1)

- Impact categories (see Sec. 3.2.1)

- Impact levels (see Sec. 3.2.2)

The impact analysis SHALL consider the level of impact for each impact category for each type of impacted entity if an intruder obtains unauthorized access as a member of each user group. Because different sets of transactions are available to each user group, it is important to consider each user group separately for this analysis.

For example, for an online service that allows for the control, operation, and monitoring of a water treatment facility, each group of users (e.g., technicians who control and operate the facility, auditors and monitoring officials, system administrators) is considered separately based on the transactions available to that user group through the online service. The impact analysis assesses the level of impact (i.e., Low, Moderate or High) on various impacted entities (e.g., citizens who drink the water, the organization that owns the facility, auditors, monitoring officials) for each of the impact categories being considered if a bad actor obtains unauthorized access to the online service as a member of a user group.

The impact analysis SHALL be performed for each user group that has access to the online service. For each impact category, the impact level is estimated for each impacted entity as a result of a compromise of the online service caused by failures in the identity management functions.

If there is no harm or impact for a given impact category for any entity, the impact level can be marked as None.

The output of this impact analysis is a set of impact levels for each user group that SHALL be documented in a suitable format for further analysis in accordance with Sec. 3.4.

Determine the Combined Impact Level for Each User Group

The impact assessment levels for each user group are combined to establish a single impact level to represent the risks to impacted entities from a compromise of identity proofing, authentication, and/or federation functions for that user group.

Organizations can apply a variety of methods for this combinatorial analysis, such as:

- Using a high-water mark approach across the various impact categories and impacted entities to derive the effective impact level

- Assigning different weights to different impact categories and/or impacted entities and taking an average to derive the effective impact level

- Some other combinatorial logic that aligns with the organization’s mission and priorities

Organizations SHALL document the approach they use to combine their impact assessment into an overall impact level for each of their defined user groups and SHALL apply it consistently across all of its online services. At the conclusion of the combinatorial analysis, organizations SHALL document the impact for each user group.

The outcome of this step is an effective impact level for each user group due to a compromise of the identity management system functions (i.e., identity proofing, authentication, federation).

Select Initial Assurance Levels and Baseline Controls

The effective impact level (i.e., Low, Moderate, or High) serves as a primary input to the process of selecting the initial assurance level for each user group (see Sec. 3.3.1) to identify the corresponding set of baseline digital identity controls from the requirements and guidelines in the companion volumes [SP800-63A], [SP800-63B], and [SP800-63C]. The resulting initial assurance level for each user group applies to all three digital identity system functions (i.e., identity proofing, authentication, and federation).

The initial set of selected digital identity controls and processes will be assessed and tailored in Step 4 based on potential risks generated by the identity management system.

Assurance Levels

Depending on the functionality and deployed architecture of the online service, the support of one or more of the identity management functions (i.e., identity proofing, authentication, and federation) may be required. The strength of these functions is described in terms of assurance levels. The RP SHALL identify the types of assurance levels that apply to their online service from the following:

- IAL: The robustness of the identity proofing process to determine the identity of an individual. The IAL is selected to mitigate risks that result from potential identity proofing failures.

- AAL: The robustness of the authentication process itself and the binding between an authenticator and a specific individual’s identifier. The AAL is selected to mitigate risks that result from potential authentication failures.

- FAL: The robustness of the federation process used to communicate authentication and attribute information to an RP from an IdP. The FAL is selected to mitigate risks that result from potential federation failures.

Assurance Level Descriptions

A summary of each of the xALs is provided below. While high-level descriptions of the assurance levels are provided in this subsection, readers of this guideline are encouraged to refer to companion volumes [SP800-63A], [SP800-63B], and [SP800-63C] for normative guidelines and requirements for each assurance level.

Identity Assurance Level

-

IAL1: IAL1 supports the real-world existence of the claimed identity and provides some assurance that the applicant is associated with that identity. Core attributes are obtained from identity evidence or self-asserted by the applicant. All core attributes are validated against authoritative or credible sources, and steps are taken to link the attributes to the person undergoing the identity proofing process.

-

IAL2: IAL2 requires collecting additional evidence and a more rigorous process for validating the evidence and verifying the identity.

-

IAL3: IAL3 adds the requirement for a trained CSP representative (i.e., proofing agent) to interact directly with the applicant, as part of an on-site attended identity proofing session, and the collection of at least one biometric.

Table 1 describes the control objectives (i.e., attack protections) for each identity assurance level.

| IAL | Control Objectives | User Profile |

|---|---|---|

| IAL1 | Limit highly scalable attacks. Protect against synthetic identity. Protect against attacks that use compromised personal information. | Access to personal information is required but limited. User actions are limited (e.g., viewing and making modifications to individual personal information). Fraud cannot be directly perpetrated through available user functions. Users cannot receive payments until an offline or manual process is conducted. |

| IAL2 | Limit scaled and targeted attacks. Protect against basic evidence falsification and theft. Protect against basic social engineering. | Users can view and change financial information (e.g., a direct deposit location). Individuals can directly perpetrate financial fraud through the available application functionality. A user can view or modify other users’ personal information. Users have visibility into or access to proprietary information. |

| IAL3 | Limit sophisticated attacks. Protect against advanced evidence falsification, theft, and repudiation. Protect against advanced social engineering attacks. | Users have direct access to multiple highly sensitive records; administrator access to servers, systems, or security data; the ability to access large sets of data that may reveal sensitive information about one or many users; or access that could result in a breach that would constitute a major incident under OMB guidance. |

Authentication Assurance Level

-

AAL1: AAL1 provides basic confidence that the claimant controls an authenticator that is bound to the subscriber account. AAL1 requires either single-factor or multi-factor authentication using a wide range of available authentication technologies. Verifiers are expected to make multi-factor authentication options available at AAL1 and encourage their use. Successful authentication requires the claimant to prove possession and control of the authenticator through a secure authentication protocol.

-

AAL2: AAL2 provides high confidence that the claimant controls one or more authenticators that are bound to the subscriber account. Proof of the possession and control of two distinct authentication factors is required through the use of secure authentication protocols. Approved cryptographic techniques are required.

-

AAL3: AAL3 provides very high confidence that the claimant controls authenticators that are bound to the subscriber account. Authentication at AAL3 is based on the proof of possession of a key through the use of a cryptographic protocol and either an activation factor or a password. AAL3 authentication requires the use of a public-key cryptographic authenticator with a non-exportable private key that provides phishing resistance. Approved cryptographic techniques are required.

Table 2 describes the control objectives (i.e., attack protections) for each authentication assurance level.

| AAL | Control Objectives | User Profile |

|---|---|---|

| AAL1 | Provide minimal protections against attacks. Deter password-focused attacks. | No personal information is available to any users, but some profile or preference data maybe retained to support usability and the customization of applications. |

| AAL2 | Require multifactor authentication. Offer phishing-resistant options. | Individual personal information can be viewed or modified by users. Limited proprietary information can be viewed by users. |

| AAL3 | Require phishing resistance and verifier compromise protections. | Highly sensitive information can be viewed or modified. Multiple proprietary records can be viewed or modified by users. Privileged user access could result in a breach that would constitute a major incident under OMB guidance. |

Federation Assurance Level

-

FAL1: FAL1 provides a basic level of protection for federation transactions and supports a wide range of use cases and deployment decisions.

-

FAL2: FAL2 provides a high level of protection for federation transactions and additional protection against a variety of attacks against federated systems, including attempts to inject assertions into a federated transaction.

-

FAL3: FAL3 provides a very high level of protection for federation transactions and establishes very high confidence that the information communicated in the federation transaction matches what was established by the CSP and IdP.

Table 3 describes the control objectives (i.e., attack protections) for each federation assurance level.

| FAL | Control Objectives | User Profile |

|---|---|---|

| FAL1 | Protect against forged assertions. | No sensitive personal information is available to any users but some profile or preference data may be retained to support usability or the customization of applications. |

| FAL2 | Protect against forged assertions and injection attacks. | Users can access personal information and other sensitive data with appropriate authentication assurance levels (e.g., AAL2 or above). |

| FAL3 | Protect against IdP compromise. | Federation primarily supports attribute exchange. Users have access to classified or highly sensitive information or services that could result in a breach that would constitute a major incident under OMB guidance. |

\clearpage

Initial Assurance Level Selection

Organizations SHALL develop and document a process and governance model for selecting initial assurance levels and controls based on the potential impacts of failures in the digital identity system. The following subsections provide guidance on the major elements to consider in the process for selecting initial assurance levels.

The overall impact level for each user group is used as the basis for selecting the initial assurance level and related technical and process controls for the online service under assessment based on the impacts of failures within the digital identity functions. The initial assurance levels and controls can be further assessed and tailored in the next step of the DIRM process.

Although the initial impact assessment (see Sec. 3.2) and the combined impact level determination for each user group (see Sec. 3.2.4) do not differentiate between identity proofing, authentication, and federation risks, the selected initial xALs may still be different. For example, the initial impact assessment may be low impact and indicate IAL1 and FAL1 but may also determine that personal information is accessible and therefore requires AAL2. Similarly, the impact assessment may determine that no proofing is required, resulting in no IAL regardless of the baselines for authentication and federation. Further changes may result from the tailoring process as discussed in Step 4: Tailoring.

The output of this step is a set of initial xALs that are applicable to the online service for each user group.

Selecting Initial IAL

Before selecting an initial assurance level, RPs must determine whether identity proofing is needed for the users of their online services. Identity proofing is not required if the online service does not need any personal information to execute digital transactions. If personal information is needed, the RP must determine whether validated attributes are required or if self-asserted attributes are acceptable. The system may also be able to operate without identity proofing if the potential harms from accepting self-asserted attributes are insignificant. In such cases, the identity proofing processes described in [SP800-63A] are not applicable to the system.

If the online service does require identity proofing, an initial IAL is selected through a simple mapping process:

- Low impact: IAL1

- Moderate impact: IAL2

- High impact: IAL3

The organization SHALL document whether identity proofing is required for their application and, if it is, SHALL select an initial IAL for each user group based on the effective impact level determination from Sec. 3.2.4.

The IAL reflects the level of assurance that an applicant holds the claimed real-life identity. The initial selection assumes that higher potential impacts of failures in the identity proofing process should be mitigated by higher assurance processes.

Selecting Initial AAL

Authentication is needed for online services that offer access to personal information, protected information, or subscriber accounts. Organizations should consider the legal, regulatory, or policy requirements that govern online services when making decisions regarding the application of authentication assurance levels and authentication mechanisms. For example, [EO13681] states that “all organizations making personal data accessible to citizens through digital applications require the use of multiple factors of authentication,” which requires a minimum selection of AAL2 for applications that meet those criteria.

If the online service requires authentication to be implemented, an initial AAL is selected through a simple mapping process:

- Low impact: AAL1

- Moderate impact: AAL2

- High impact: AAL3

The organization SHALL document whether authentication is needed for their online service and, if it is, SHALL select an initial AAL for each user group based on the effective impact level determination from Sec. 3.2.4.

The AAL reflects the level of assurance that the claimant is the same individual to whom the authenticator was registered. The initial selection assumes that higher potential impacts of failures in the authentication process should be mitigated by higher assurance processes.

Selecting Initial FAL

Identity federation brings many benefits, including a convenient customer experience that avoids redundant, costly, and often time-consuming identity processes. The benefits of federation through a general-purpose IdP model or a subscriber-controlled wallet model are covered in Sec. 5 of [SP800-63C]. However, not all online services will be able to make use of federation, whether for risk-based reasons or due to legal or regulatory requirements. Consistent with [M-19-17], federal agencies that operate online services SHOULD implement federation as an option for user access.

If the online service implements identity federation, an initial FAL is selected through a simple mapping process:

- Low impact: FAL1

- Moderate impact: FAL2

- High impact: FAL2 or FAL3

The organization SHALL document whether federation will be used for their online service and, if it is, SHALL select an initial FAL for each user group based on the effective impact level determination from Sec. 3.2.4.

For online services that are assessed to be high impact, organizations SHALL conduct a further assessment to evaluate the risk of a compromised IdP to determine whether FAL2 or FAL3 is more appropriate for their use case. Considerations SHOULD include the type of data being accessed, the location of the IdP (e.g., whether the IdP is internal or external to their enterprise boundary), and the availability of bound authenticators or holder-of-key capabilities.

The FAL reflects the level of assurance in identity assertions that convey the results of authentication processes and relevant identity information to RP online services. The preliminary selection assumes that higher potential impacts of failures in federated identity architectures should be mitigated by higher assurance processes.

Identify Baseline Controls

The selection of the initial assurance levels for each user group and each of the applicable identity functions (i.e., IAL, AAL, and FAL) serves as the basis for selecting the baseline digital identity controls from the guidelines in companion volumes [SP800-63A], [SP800-63B], and [SP800-63C]. As described in Sec. 3.4, the baseline controls include technical and process controls that will be assessed against additional potential impacts.

Using the initial xALs selected in Sec. 3.3.3, the organization SHALL identify the applicable baseline controls for each user group as follows:

- Initial IAL and related technical and process controls from [SP800-63A]

- Initial AAL and related technical and process controls from [SP800-63B]

- Initial FAL and related technical and process controls from [SP800-63C]

While online service providers must assess and determine the xALs that are appropriate for protecting their applications, the selection of these assurance levels does not mean that the online service provider must implement the related technical and process controls independently. Based on the identity model that the online service provider implements, some or all of the assurance levels and related controls may be implemented by an external entity, such as a third-party CSP or IdP.

The output of this step is a set of assigned xALs and baseline controls for each user group.

Tailor and Document Assurance Levels

The second dimension of risk addressed by the DIRM process focuses on risks from the identity management system that represent the unintended negative consequences of the initial selection of xALs and related technical and process controls in Sec. 3.3.4.

Tailoring provides a process to modify an initially assessed assurance level and implement compensating or supplemental controls based on ongoing detailed risk assessments. It provides a pathway for flexibility and enables organizations to achieve risk management objectives that align with their specific context, users, and threat environments. This process focuses on assessing the risks posed by the identity system itself, specific environmental threats, and privacy and customer experience impacts. It does not prioritize any specific risk area or outcomes for organizations. Making decisions that balance different types of risks to meet organizational outcomes remains the responsibility of organizations.

While organizations are required to implement and document a tailoring process, this guideline does not require the initial assurance levels or control sets to be modified as a result. However, organizations are expected to complete the assessments in the tailoring section to fully account for the outcomes of their selected initial assurance levels.

Within the tailoring step, organizations SHALL focus on impacts to mission delivery due to the implementation of identity management controls, including the possibility of legitimate users who are unable to access desired online services or experience sufficient friction or frustration with the identity system (and technology selection) that they abandon attempts to access the online service.

As a part of the tailoring process, organizations SHALL review the Digital Identity Acceptance Statements and practice statements1 from CSPs and IdPs that they use or intend to use. However, organizations SHALL also conduct their own analysis to ensure that the organization’s specific mission and the communities being served by the online service are given due consideration for tailoring purposes. As a result, the organization MAY require their chosen CSP to strengthen or provide optionality in the implementation of certain controls to address risks and unintended impacts to the organization’s mission and the communities served.

To promote interoperability and consistency, organizations SHOULD implement their assessed or tailored xALs consistent with the normative guidance in this document. However, these guidelines provide flexibility to allow organizations to tailor the initial xALs and related controls to meet specific mission needs, address unique risk appetites, and provide secure and accessible online services. In doing so, CSPs MAY offer and organizations MAY utilize tailored sets of controls that differ from the normative statements in this guideline.

Organizations SHALL establish and document their xAL tailoring process. At a minimum, this process:

- SHALL follow a documented governance approach to allow for decision-making

- SHALL document all decisions in the tailoring process, including the assessed xALs, modified xALs, and supplemental and compensating controls in the Digital Identity Acceptance Statement (see Sec. 3.4.4)

- SHALL justify and document all risk-based decisions or modifications to the initially assessed xALs in the Digital Identity Acceptance Statement (see Sec. 3.4.4)

- SHOULD establish a cross-functional capability to support subject-matter analysis of xAL selection impacts in the tailoring process (e.g., subject-matter experts who can speak about risks and considerations related to privacy, customer experience, fraud and impersonation impacts, and other germane areas)

The tailoring process promotes a structured means of balancing risks and impacts while protecting online services, systems, and data in a manner that enables mission success and supports security, customer experience, and privacy for individuals.

Assess Privacy, Customer Experience, and Threat Resistance

When selecting and tailoring assurance levels for specific online services, considerations extend beyond the initial impact assessment in Sec. 3.2. When progressing from the initial assurance level selection in Sec. 3.3.4 to the final xAL selection and implementation, organizations SHALL conduct detailed assessments of the controls defined for the initially selected xALs to identify potential impacts in the operational environment.

At a minimum, organizations SHALL assess the impacts and potential unintended consequences related to the following areas:

- Privacy – Identify unintended consequences to the privacy of individuals who will be subject to the controls at an assessed xAL and of individuals affected by organizational or third-party practices related to the establishment, management, or federation of a digital identity. A privacy assessment SHOULD leverage an existing Privacy Threshold Analysis (PTA) or Privacy Impact Assessment (PIA) as inputs during the privacy assessment process.2 However, as the goal of the privacy assessment is to identify privacy risks that arise from the initial assurance level selection, additional assessments and evaluations that are specific to the baseline controls for the assurance levels may be required for the underlying information system.

- Customer Experience – Determine whether implementation of the initial assurance levels may create substantial or unacceptable barriers to individuals seeking to access services. Customer experience assessments SHALL consider impacts that result from the identity management controls to ensure that they do not cause undue burdens, frustrations, or frictions for individuals and that there are pathways to provide service to users of all capabilities, resources, technology access, and economic statuses.

- Threat Resistance – Determine whether the defined assurance level and related controls will address specific threats to the online service based on the operational environment, its threat actors, and known tactics, techniques, and procedures (TTPs). Threat assessments SHALL consider specific known and potential threats, threat actors, and TTPs within the implementation environment for the identity management functions. For example, certain benefits programs may be more subject to familial threats or collusion. Based on their assessments, organizations MAY implement supplemental controls specific to the communities served by their online service. Conversely, organizations MAY tailor their assessed xAL down or modify their baseline controls if their threat assessment indicates that a reduced threat posture is appropriate based on their environment.

Organizations SHOULD leverage consultation and feedback from the entities and communities served to ensure that the tailoring process addresses their known constraints.

Organizations SHOULD also conduct additional business-specific assessments as appropriate to fully represent mission- and domain-specific considerations that have not been captured here. All assessments applied during the tailoring phase SHALL be extended to any compensating or supplemental controls, as defined in Sec. 3.4.2 and Sec. 3.4.3. While identity system costs are not specifically included as an input for DIRM processes or as a metric for continuous evaluation, the costs and cost effectiveness of implementation and long-term operation are inherent considerations for responsible program and risk management. Based on their available funding and resources, organizations will likely need to make trade-offs that can be more effectively informed by the DIRM process and its outputs. Any cost-based decisions that result in modifications to assessed xALs or baseline controls SHALL be documented in the Digital Identity Acceptance Statement (see Sec. 3.4.4).

The outcome of this step is a set of risk assessments for privacy, customer experience, threat resistance, and other dimensions that inform the tailoring of the initial assurance levels and the selection of compensating and supplemental controls.

Identify Compensating Controls

A compensating control is a management, operational, or technical control employed by an organization in lieu of a normative control (i.e., SHALL statements) in the defined xALs. To the greatest degree practicable, a compensating control is intended to address the same risks as the baseline control it is replacing. Organizations MAY choose to implement a compensating control if they are unable to implement a baseline control or when a risk assessment indicates that a compensating control sufficiently mitigates risk in alignment with organizational risk tolerance. This control MAY be a modification to the normative statements defined in these guidelines or MAY be applied elsewhere in an online service, digital transaction, or service life cycle. For example:

- A federal agency could choose to use a federal background investigation and checks [FIPS201] to compensate for the identity evidence validation with authoritative sources requirement under these guidelines.

- An organization could choose to implement stricter auditing and transactional review processes on a payment application where verification processes using weaker forms of identity evidence were accepted due to the lack of required evidence in the end-user population.

Where compensating controls are implemented, organizations SHALL document the compensating control, the rationale for the deviation, comparability of the chosen alternative, and any resulting residual risks. CSPs and IdPs that implement compensating controls SHALL communicate this information to all potential RPs prior to integration to allow the RP to assess and determine the acceptability of the compensating controls for their use cases.

The process of tailoring allows organizations and service providers to make risk-based decisions regarding how they implement their xALs and related controls. It also provides a mechanism for documenting and communicating decisions through the Digital Identity Acceptance Statement described in Sec. 3.4.4.

Identify Supplemental Controls

Supplemental controls may be added to further strengthen the baseline controls specified for the organization’s selected assurance levels. Organizations SHOULD identify and implement supplemental controls to address specific threats in the operational environment that may not be addressed by the baseline controls. For example:

- To complete the proofing process, an organization could choose to verify an individual against additional pieces of identity evidence beyond what is required by the assurance level due to a high prevalence of fraudulent attempts.

- An organization could restrict users to only phishing-resistant authentication at AAL2.

- An organization could choose to implement risk-scoring analytics and re-proofing mechanisms to confirm a user’s identity when their access attempts exhibit certain risk factors.

Any supplemental controls SHALL be assessed for impacts based on the same factors used to tailor the organization’s assurance level and SHALL be documented.

Digital Identity Acceptance Statement

Organizations SHALL develop a Digital Identity Acceptance Statement (DIAS) to document the results of the DIRM process for (i) each online service managed by the organization, and (ii) each external online service used to support the mission of the organization, including software-as-a-service offerings (e.g., social media platforms, email services, online marketing services). RPs who intend to use a particular CSP/IdP SHALL review the latter’s DIAS and incorporate relevant information into the organization’s DIAS for each online service.

Organizations SHALL prepare a DIAS for their online service that includes, at a minimum:

- Initial impact assessment results,

- Initially assessed xALs,

- Tailored xAL and rationale if the tailored xAL differs from the initially assessed xAL,

- All compensating controls with their comparability or residual risks, and

- All supplemental controls.

Federal agencies SHOULD include this information in the information system authorization package described in [NISTRMF].

CSPs/IdPs SHALL implement the DIRM process and develop a DIAS for the services they offer if they deviate from the normative guidance in these guidelines, including when supplemental or compensating controls are added. To complete a DIRM of their offered assurance levels and controls, CSPs/IdPs MAY base their assessment on anticipated or representative digital identity services that they wish to support. In creating this risk assessment, they SHOULD seek input from real-world RPs on their user populations and anticipated context. The DIAS prepared by a CSP SHALL include, at a minimum:

- Claimed xAL, related controls, and rationale for any deviations from normative guidance;

- All compensating controls with their comparability or residual risks; and

- All supplemental controls.

The DIRM process for external online services used by the organization SHALL consider relevant inputs from the provider of the service and document the results in a DIAS. The DIAS prepared by the organization for external online services SHALL include, at a minimum:

- Assessed xAL, related controls, and rationale for any deviations from normative guidance;

- All compensating controls with their comparability or residual risks; and

- All supplemental controls.

The final implemented xALs do not all need to be at the same level. There may be variance based on the functions of the online service, the impact assessment, and the tailoring process.

Continuously Evaluate and Improve

Continuous improvement is a critical tool for keeping pace with the threat and technology environment and identifying programmatic gaps that need to be addressed to balance risk management objectives. For instance, an organization may determine that a portion of the target population intended to be served by the online service does not have access to affordable high-speed internet services, which are needed to support remote identity proofing. The organization could bridge this gap by establishing a program that offers local, in-person proofing services within the community. This could involve providing appointments with proofing agents who can meet individuals at more accessible locations, such as their local community center, the nearest post office, a partner business facility, or even at the individual’s home.

To address the shifting environment in which they operate and more rapidly address service capability gaps, organizations SHALL implement a continuous evaluation and improvement program that leverages input from end users who have interacted with the identity management system as well as performance metrics for the online service. This program SHALL be documented, including the metrics that are collected, the sources of data required to enable performance evaluation, and the processes in place for taking timely actions based on the continuous improvement process. This program and its effectiveness SHOULD be assessed on a regular basis to ensure that outcomes are being achieved and that programs are addressing issues in a timely manner.

Additionally, organizations SHALL monitor the evolving threat landscape to stay informed of the latest threats and fraud tactics. Organizations SHALL regularly assess the effectiveness of current security measures and fraud detection capabilities against the latest threats and fraud tactics.

Evaluation Inputs

To fully understand the performance of their identity system, organizations will need to identify critical inputs to their continuous evaluation process. At a minimum, these inputs SHALL include:

- Integrated CSP, IdP, and authentication functions as well as validation, verification, and fraud management systems, as appropriate

- Customer feedback mechanisms, such as complaint processes, helpdesk statistics, and other user feedback (e.g., surveys, interviews, or focus groups)

- Threat analysis, threat reporting, and threat intelligence feeds that are available

- Fraud trends, fraud investigation results, and fraud metrics as available

- The results of ongoing customer experience assessments and privacy assessments

RPs SHALL document their metrics, reporting requirements, and data inputs for any CSP, IdP, or other integrated identity service to ensure that expectations are appropriately communicated to partners and vendors.

Performance Metrics

The exact metrics available to organizations will vary based on the technologies, architectures, and deployment methods they use. Additionally, the availability and usefulness of certain metrics will vary over time. Therefore, these guidelines do not attempt to define a comprehensive set of metrics for all scenarios. Table 4 provides a set of recommended metrics that organizations SHOULD track as part of their continuous evaluation program. However, organizations are not constrained by this table and SHOULD implement metrics based on their specific systems, technology, and program needs. See [SP800-55V2] for more information on identifying additional performance metrics. In Table 4, all references to unique users include both legitimate users and imposters.

| Title | Description | Type |

|---|---|---|

| Pass Rate (Overall) | Percentage of unique users who successfully complete identity proofing | Proofing |

| Pass Rate (Per Proofing Type) | Percentage of unique users who are successfully proofed for each offered type (i.e., remote unattended, remote attended, on-site attended, on-site unattended) | Proofing |

| Fail Rate (Overall) | Percentage of unique users who start the identity proofing process but are unable to successfully complete all of the steps | Proofing |

| Estimated Adjusted Fail Rate | Percentage of failures adjusted to account for identity proofing attempts that are suspected to be fraudulent | Proofing |

| Fail Rate (Per Proofing Type) | Percentage of unique users who do not complete proofing due to a process failure for each offered type (i.e., remote unattended, remote attended, on-site attended, on-site unattended) | Proofing |

| Abandonment Rate (Overall) | Percentage of unique users who start the identity proofing process but do not complete it without failing a process | Proofing |

| Abandonment Rate (Per Proofing Type) | Percentage of unique users who start a specific type of identity proofing process but do not complete it without failing a process | Proofing |

| Failure Rates (Per Proofing Process Step) | Percentage of unique users who are unsuccessful at completing each identity proofing step in a CSP process | Proofing |

| Completion Times (Per Proofing Type) | Average time that it takes a user to complete each defined proofing type offered as part of an identity service | Proofing |

| Authenticator Type Usage | Percentage of subscribers who have an active authenticator by each type available | Authentication |

| Authentication Failures | Percentage of authentication events that fail (not to include authentication attempts that are successful after re-entry of an authenticator output) | Authentication |

| Account Recovery Attempts | The number of account or authenticator recovery processes initiated by subscribers | Authentication |

| Confirmed Unauthorized Access or Fraud | Percentage of total transaction events (i.e., identity proofing + authentication events) that the organization determines to be unauthorized or fraudulent through analysis or self-reporting | Fraud |

| Suspected Unauthorized Access or Fraud | Percentage of total transaction events (i.e., identity proofing + authentication events) that are suspected to be unauthorized or fraudulent | Fraud |

| Reported Unauthorized Access or Fraud | Percentage of total transaction events (i.e., identity proofing + authentication events) reported to be unauthorized or fraudulent by users | Fraud |

| Unauthorized Access or Fraud (Per Proofing Type) | Number of identity proofing events that are suspected or reported as being fraudulent for each available type of proofing | Fraud |

| Unauthorized Access or Fraud (Per Authentication Type) | Number of authentication events that are suspected or reported to be unauthorized or fraudulent by each available type of authentication | Fraud |

| Help Desk Calls | Number of calls received by the CSP or identity service | Customer Experience |

| Help Desk Calls (Per Type) | Number of calls received related to each offered service (e.g., proofing failures, authenticator resets, complaints) | Customer Experience |

| Help Desk Resolution Times | Average length of time that it takes to resolve a complaint or help desk ticket | Customer Experience |

| Customer Satisfaction Surveys | The results of customer feedback surveys conducted by CSPs, RP, or both | Customer Experience |

| Redress Requests | The number of redress requests received related to the identity management system | Customer Experience |

| Redress Resolution Times | The average time it takes to resolve redress requests related to the identity management system | Customer Experience |

The data used to generate continuous evaluation metrics may not always reside with the identity program or the organizational entity responsible for identity management systems. The intent of these metrics is to integrate with existing data sources whenever possible to collect information that is critical to identity program evaluation. For example, customer service representative (CSR) teams may already have substantial information on customer requests, complaints, or concerns. Organizations that implement and maintain identity management systems are expected to coordinate with these teams to acquire the information needed to discern identity management system-related complaints or issues.

Measurement in Support of Customer Experience Outcomes

A primary goal of continuous improvement is to enhance customer experience, usability, and accessibility outcomes for different user populations. As a result, the metrics collected by organizations SHOULD be further evaluated to provide insights into the performance of their identity management systems for their supported communities. Where possible, these efforts SHOULD avoid the collection of additional personal information and instead use informed analyses of proxy data to identify potential performance issues. This can include comparing and filtering the metrics to understand deviations in performance across different user populations based on other available data, such as zip code, geographic region, age, or sex.

Redress

Designing services that support a wide range of populations requires processes to adjudicate issues and provide redress3 as warranted. Service failures, disputes, and other issues tend to arise as part of normal operations, and their impacts can vary broadly, from minor inconveniences to major disruptions or damage. Furthermore, the same issue experienced by one person or community as an inconvenience can have disproportionately damaging impacts on other individuals and communities.

To enable access to critical online services while deterring identity-related fraud and cybersecurity threats, it is essential for organizations to plan for potential issues and to design redress approaches that aim to be fair, transparent, easy for legitimate claimants to navigate, and resistant to exploitation attempts.

Understanding when and how harms might be occurring is a critical first step for organizations to take informed action. Continuous evaluation and improvement programs can play a key role in identifying instances and patterns of potential harm. Moreover, there may be business processes in place outside of those established to support identity management that can be leveraged as part of a comprehensive approach to issue adjudication and redress. Beyond these activities, additional practices can be implemented to ensure that users of identity management systems are able to voice their concerns and have a path to redress. Requirements for these practices include:

- RPs and CSPs SHALL enable individuals to convey grievances and seek redress through an issue handling process that is documented, accessible, trackable, and usable by all individuals and whose instructions are easy to find on a public-facing website.

- RPs and CSPs SHALL institute a governance model for implementing this issue handling process, including documented roles and responsibilities.

- The issue handling process SHALL be implemented as a dedicated function that includes procedures for:

- Impartially reviewing pertinent evidence,

- Requesting and collecting additional evidence that informs the issues, and

- Expeditiously resolving issues and determining corrective action.

- RPs and CSPs SHALL make human support personnel available to intervene and override issue adjudication outputs generated by algorithmic support mechanisms.

- RPs and CSPs SHALL educate support personnel on issue handling procedures for the digital identity management system, the avenues for redress, and the alternatives available to gain access to services.

- RPs and CSPs SHALL implement a process for personnel and technologies that provides support functions to report and address major barriers that end users face and grievances they may have.

- RPs and CSPs SHALL incorporate findings derived from the issue handling process into continuous evaluation and improvement activities.

Organizations are encouraged to consider these and other emerging redress practices. Prior to adopting any new redress practice, including supporting technology, organizations SHOULD test the practice with users to avoid the introduction of unintended consequences, particularly those that may counteract or contradict the goals associated with redress. In addition, organizations SHALL assess the integrity and performance of their redress mechanisms and implement controls to prevent, detect, and remediate attempted identity fraud involving those mechanisms.

Cybersecurity, Fraud, and Identity Program Integrity

The close coordination of identity functions with teams that are responsible for cybersecurity, privacy, threat intelligence, fraud detection, and program integrity enables a more complete protection of business capabilities and constant improvement. For example, payment fraud data collected by program integrity teams could provide indicators of compromised subscriber accounts and potential weaknesses in identity proofing implementations. Similarly, threat intelligence teams may learn of new TTPs that could impact identity proofing, authentication, and federation processes. Organizations SHALL establish consistent mechanisms for the exchange of information between stakeholers that are responsible for critical internal security and fraud prevention. Organizations SHOULD do the same for external stakeholders and identity services that comprise their online services.

When organizations are supported by external identity providers (e.g., CSPs), the exchange of data related to security, fraud, and other RP functions may be complicated by regulations or policy. However, establishing the necessary mechanisms and guidelines to enable effective information sharing SHOULD be considered in contractual and legal mechanisms. All data collected, transmitted, or shared by the identity service provider SHALL be subject to a detailed privacy and legal assessment by either the entity generating the data (e.g., a CSP) or the related RP for whom the service is provided.

Coordination and integration with various organizational functional teams can help to achieve better outcomes for the identity functions. Ideally, such coordination is performed throughout the risk management process and operations life cycle. Companion volumes [SP800-63A], [SP800-63B], and [SP800-63C] provide specific fraud mitigation requirements related to each of the identity functions.

Artificial Intelligence and Machine Learning in Identity Systems

Identity solutions use artificial intelligence (AI) and machine learning (ML) in various ways, such as improving the performance of biometric matching systems, automating evidence or attribute validation, detecting fraud, and even assisting users (e.g., chatbots). While the potential applications of AI and ML are extensive, these technologies may also introduce new risks or produce unintended negative outcomes.

The following requirements apply to all uses of AI and ML in the identity system, regardless of how they are used:

- All uses of AI/ML SHALL be documented and communicated to organizations that rely on these systems. The use of integrated technologies that leverage AI/ML by CSPs, IdPs, or verifiers SHALL be disclosed to all RPs that make access decisions based on information from these systems.

- All organizations that use AI/ML SHALL provide information to any entities that use their technology on the methods and techniques used for training their models, a description of the data sets used in training, information on the frequency of model updates, and the results of all testing completed on their algorithms.

- All organizations that use AI/ML systems or rely on services that use these systems SHOULD implement the NIST AI Risk Management Framework ([NISTAIRMF]) to evaluate the risks that may be introduced by such systems.

- All organizations that use AI/ML systems or rely on services that use these systems SHALL perform and document privacy risk assessments for personal information and data processed by such systems.

-

Further information on practice statements and their contents can be found in Sec. 3.1 of SP 800-63A. ↩

-

For more information about privacy risk assessments, refer to the NIST Privacy Framework: A Tool for Improving Privacy through Enterprise Risk Management at https://nvlpubs.nist.gov/nistpubs/CSWP/NIST.CSWP.01162020.pdf. ↩

-

Redress generally refers to a remedy that is made after harm occurs. ↩